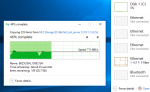

iperf say 9.3 Gb/s each way.

Ordering another 16GB memory today.

How do Qnap+synology boxes get away with so little memory on their 10GbE products. Can they actually reach particularly high throughputs?

It's a matter of design. FreeNAS uses ZFS, which was built from the ground up to assume memory is cheap, because it was intended to be run on a big Sun Solaris server. This allows ZFS to do some interesting and amazing things, including going MUCH faster than the underlying hardware might otherwise allow, because of things like the ARC. However, in order to get that, you have to toss lots of resources at it. If you under-resource it, it goes rather more poorly.

Those smaller NAS boxes, they don't have the complexity of ZFS. A typical hard drive might be able to read files at 200MBytes/sec, and two mirrored might go at 400MBytes/sec, so with a simple filesystem like EXT3, there's an easy, low-resource way for a SoC based NAS to be able to perform ~400MBytes/sec reads and ~200MBytes/sec writes for sequential access, but the trick is, you will *never* go faster than what the underlying hard drives support. Things like disk seeks bring that down; it is possible to bring a SoC NAS to its knees on some platter based HDD's with just a few hundred IOPS worth of traffic.

With ZFS, the system might be sustaining a hell of a lot more traffic than that, and it doesn't necessarily need to be sequential traffic. If it can be held in ARC/L2ARC, it requires no seeks to fetch. Writing to the pool, even "random" updates tend to be aggregated and written to contiguous space, so you can see a ZFS filer pulling off some amazing stunts with traffic that'd melt even a high end traditional array like an EqualLogic or something like that.

ZFS isn't magic. It just trades off one thing for another.