I dont understand why the read speed is slow for this pool.

TrueNAS is running as a VM, 4 cores 32GB memory, with Intel 10G NIC which is assigned with PCIe passthrough.

All 4 disks in the pool below are Samsung PM981 NVMe SSD, installed on an x16 gen3 adapter, assigned to TrueNAS with PCIe passthrough.

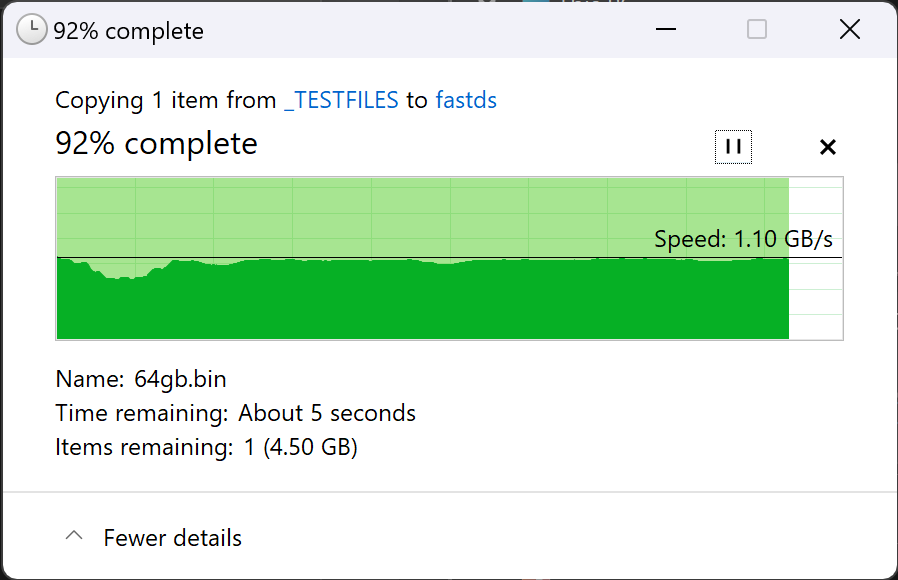

This is the write from my Windows PC to TrueNAS. They are on the same VLAN, connected to the same switch. The file (64gb.bin) is an 64GB file. I have dirty data max ~10GB, and it always syncs ~2GB in ~1 seconds. There is no write throttle or delay happening. Hence, I think, the write speed is stable.

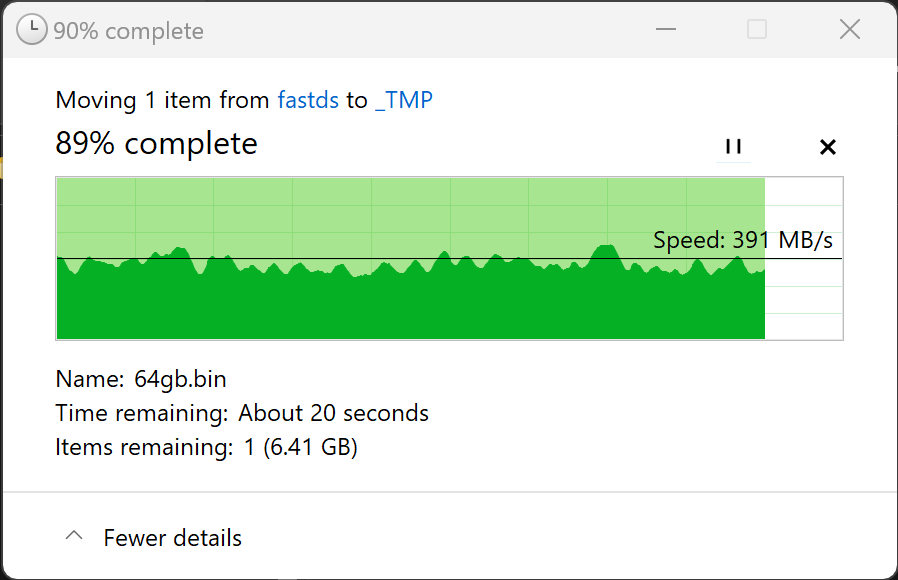

This is the read. I also tried copying just after a reboot and it is same.

I also tried this with an 16GB file as I believe it fully fits to ARC, and a second copy shows 0 miss in arcstat but the read performance is still same ~400MB/s.

TrueNAS is running as a VM, 4 cores 32GB memory, with Intel 10G NIC which is assigned with PCIe passthrough.

All 4 disks in the pool below are Samsung PM981 NVMe SSD, installed on an x16 gen3 adapter, assigned to TrueNAS with PCIe passthrough.

Code:

root@tnas[~]# zpool status fast

pool: fast

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

fast ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

gptid/db594e70-a209-11ed-8822-649d99b180d2 ONLINE 0 0 0

gptid/db58ad97-a209-11ed-8822-649d99b180d2 ONLINE 0 0 0

mirror-1 ONLINE 0 0 0

gptid/db5a6840-a209-11ed-8822-649d99b180d2 ONLINE 0 0 0

gptid/db56fd03-a209-11ed-8822-649d99b180d2 ONLINE 0 0 0

errors: No known data errors

This is the write from my Windows PC to TrueNAS. They are on the same VLAN, connected to the same switch. The file (64gb.bin) is an 64GB file. I have dirty data max ~10GB, and it always syncs ~2GB in ~1 seconds. There is no write throttle or delay happening. Hence, I think, the write speed is stable.

This is the read. I also tried copying just after a reboot and it is same.

I also tried this with an 16GB file as I believe it fully fits to ARC, and a second copy shows 0 miss in arcstat but the read performance is still same ~400MB/s.