The units have many identical components:

8x 7200rpm HGST UltraStar HD (tested & 'known to be good')

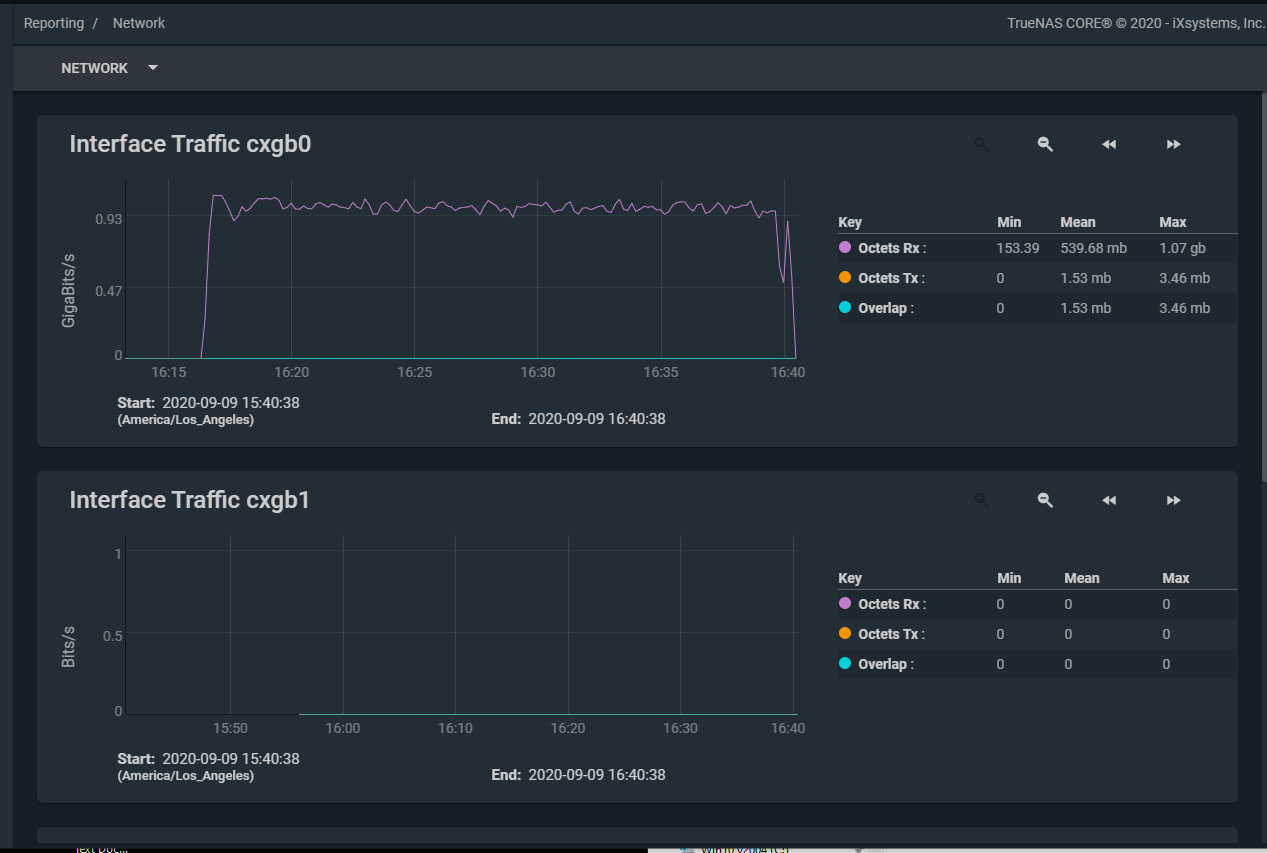

The same exact model Chelsio 10GbE SFP+ NIC.

The same RAIDz2 array configuration.

There's no extra hardware to 'accelerate' the Dell T320's performance (L2arc / SLOG, etc.)

Given the specs & performance details ... what explains the MP's slow write-speeds..?

Components which are identical:

UltraStar HDs, HP H220 HBA, Chelsio SFP+ NIC, RAIDz2, identical source data from identical SSD.

Variables:

Does it make sense to you guys that the Mac Pro should be this much slower?

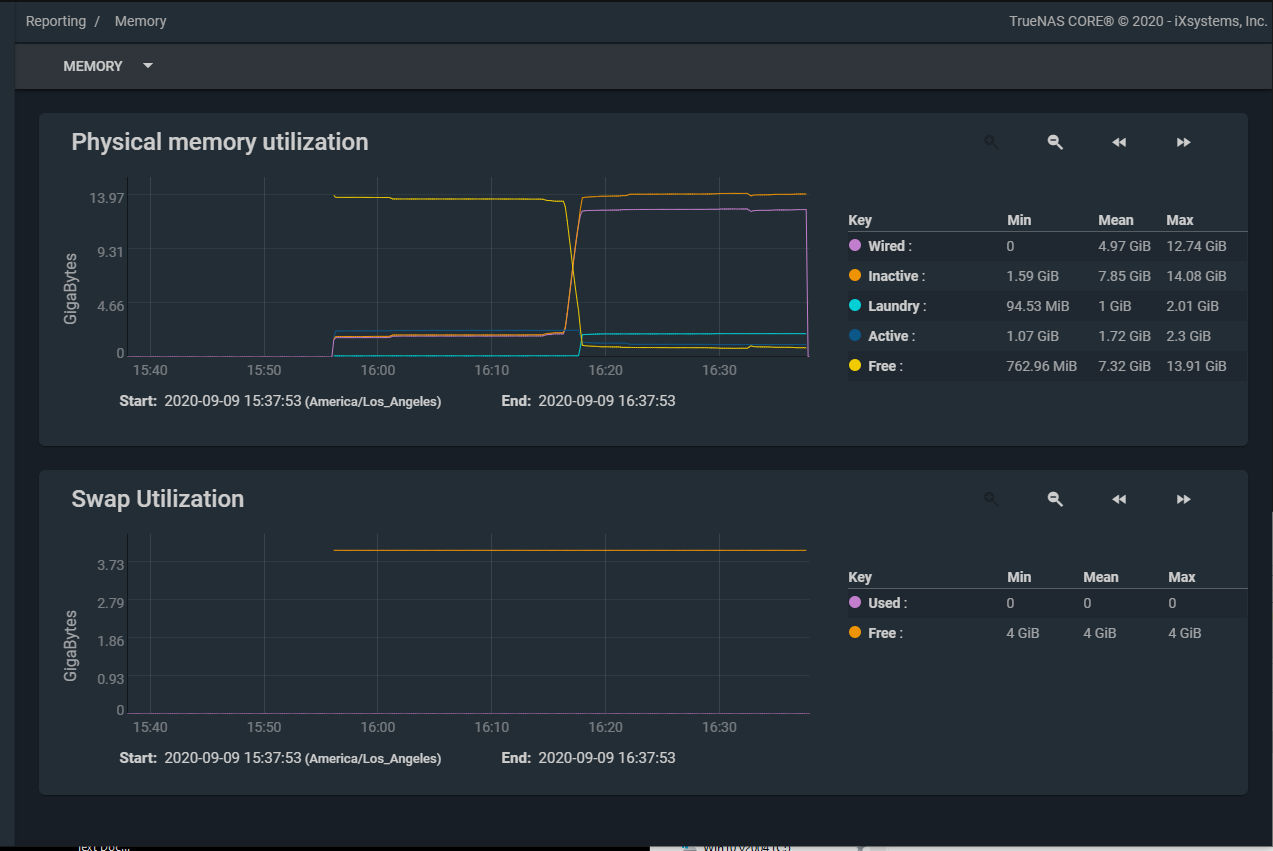

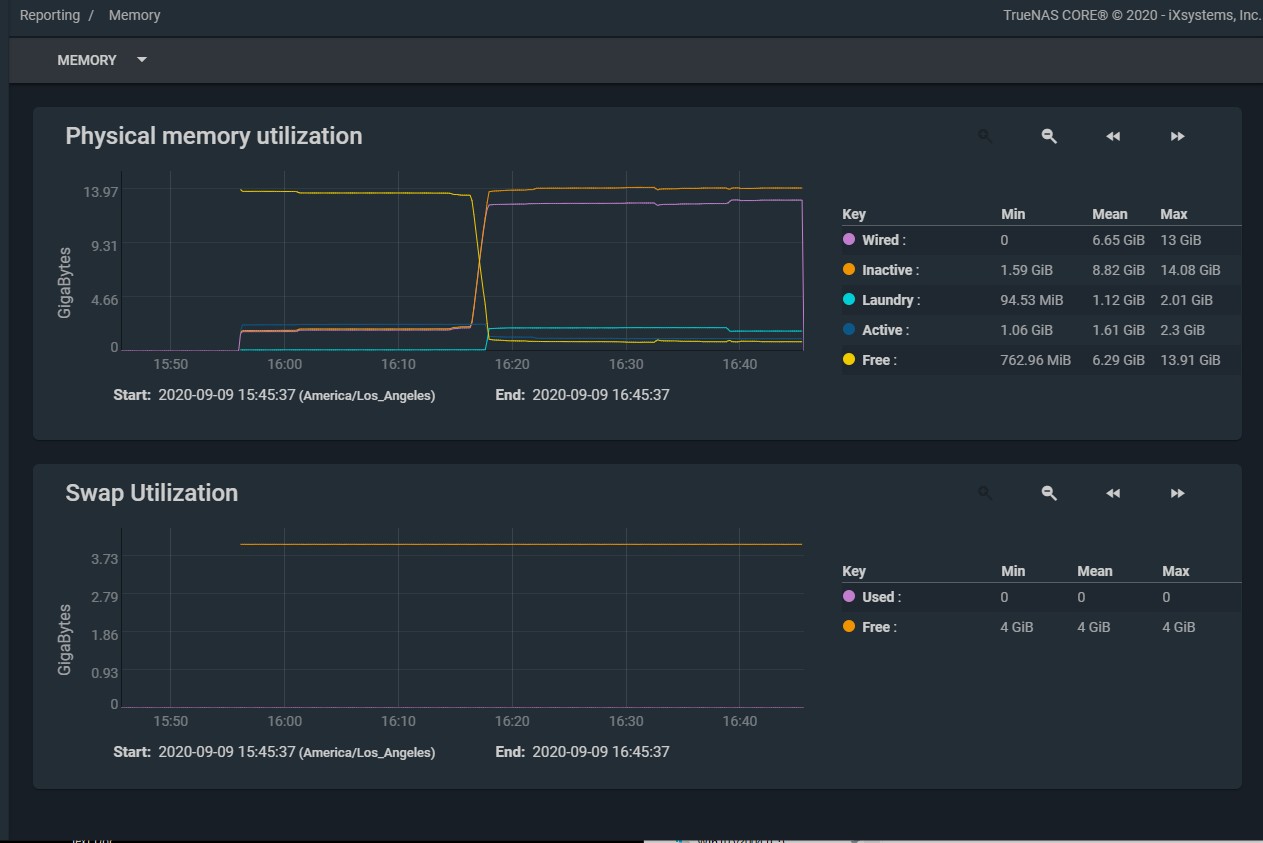

Or could there be another cause...? Or would increasing the RAM potentially make a difference (seems irrelevant to me)

Has a DDR2 machines never exceeded 200MB/s in FreeNAS..?

Has an X5355 never exceeded 200MB/s in FreeNAS..? If the clock speed is the "all important" aspect of the CPU ... sure it's 2 generations older ... but it's also faster.

Does RAM actually even effect the write speed (especially of an empty array) ..?

Synthetic Benchmarking the Mac Pro with these 8 UltraStar HGSTs yielded ~700MB/s Write // ~800 Read (OS X)

Thanks...

8x 7200rpm HGST UltraStar HD (tested & 'known to be good')

The same exact model Chelsio 10GbE SFP+ NIC.

The same RAIDz2 array configuration.

There's no extra hardware to 'accelerate' the Dell T320's performance (L2arc / SLOG, etc.)

Write Perf. | Computers | CPUs | Cores x Clock | RAM | NICs | HDDs | SAME Config | Source Data |

350 - 650 MB/s | Dell T320 | 1x E5-2403 | 4c @ 1.80 GHz | 32GB DDR3 | Chelsio 10GbE SFP+ | 8x HGST HUS 7.2K | HP SAS H220 in RAIDz2 | Same Data |

120 - 200 MB/s | MacPro 3,1 | 2x X5355 | 8c @ 2.66 GHz | 16GB DDR2 | Chelsio 10GbE SFP+ | 8x HGST HUS 7.2K | HP SAS H220 in RAIDz2 | Same Data |

Given the specs & performance details ... what explains the MP's slow write-speeds..?

Components which are identical:

UltraStar HDs, HP H220 HBA, Chelsio SFP+ NIC, RAIDz2, identical source data from identical SSD.

Variables:

System | RAM (amount + type) | PCIe Gen | CPU Model |

Mac Pro 3,1 | DDR2 -- 16GB | PCIe 2.0 (HBA = x16) | X5355 |

Dell T320 | DDR3 -- 32GB | PCIe 3.0 | E5-2403 |

Does it make sense to you guys that the Mac Pro should be this much slower?

Or could there be another cause...? Or would increasing the RAM potentially make a difference (seems irrelevant to me)

Has a DDR2 machines never exceeded 200MB/s in FreeNAS..?

Has an X5355 never exceeded 200MB/s in FreeNAS..? If the clock speed is the "all important" aspect of the CPU ... sure it's 2 generations older ... but it's also faster.

Does RAM actually even effect the write speed (especially of an empty array) ..?

Synthetic Benchmarking the Mac Pro with these 8 UltraStar HGSTs yielded ~700MB/s Write // ~800 Read (OS X)

Thanks...

Last edited: