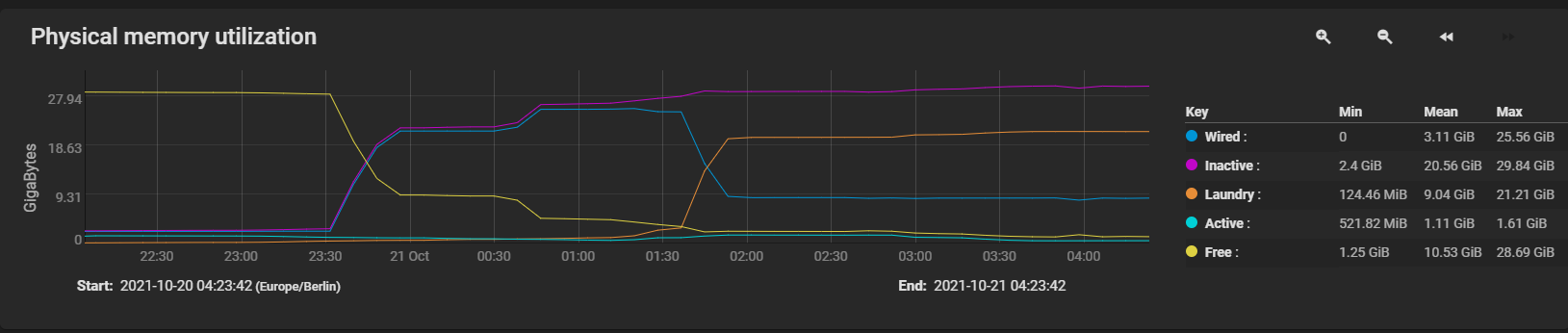

For months I'm seeing services using way too much RAM. Sometimes it looks normal, then it increases very high forcing the ARC to shink to a minimum and after some hours it drops down to normal values.

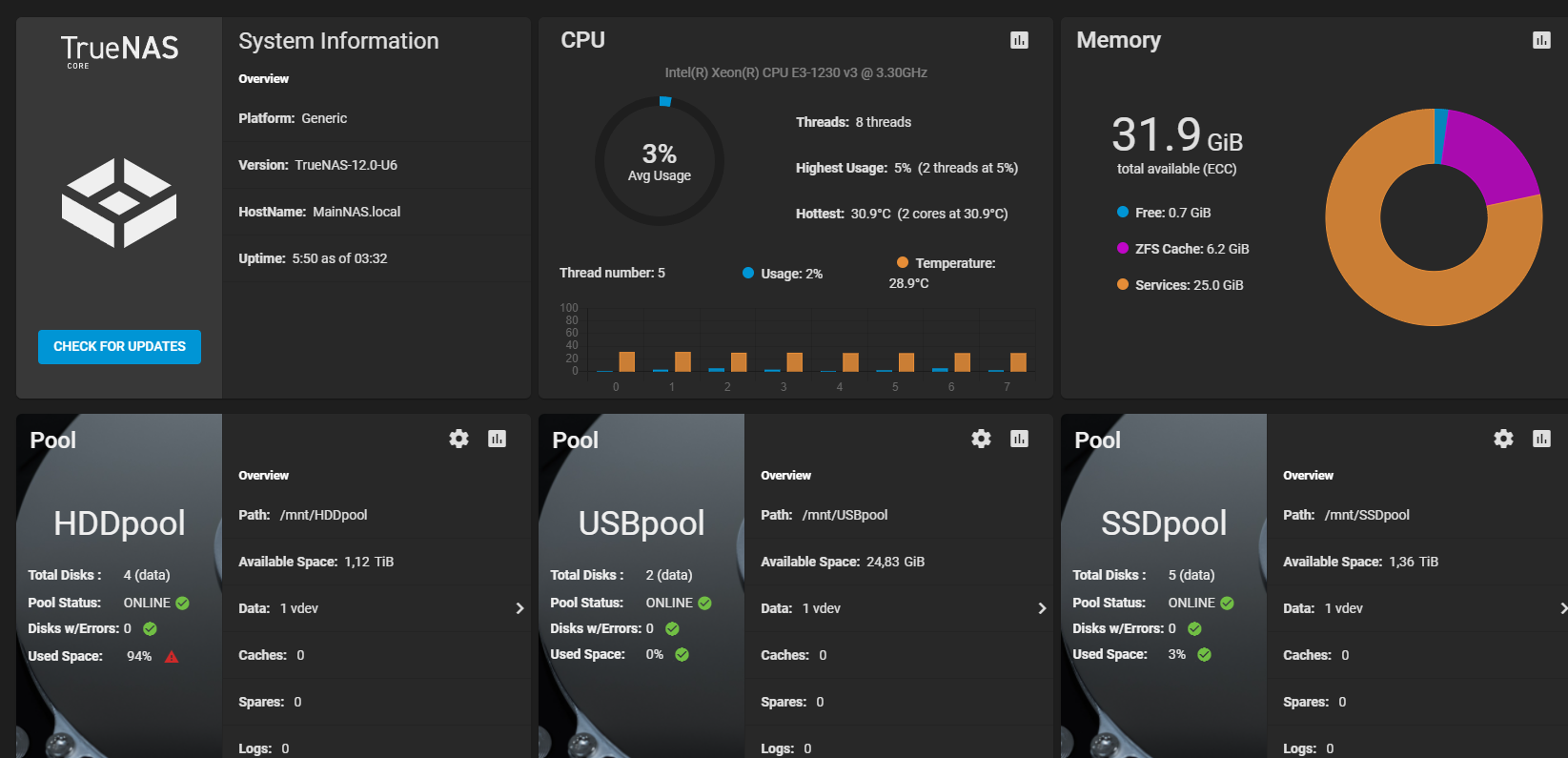

It looks like this:

I tried to don't start services that aren't critical to see if one of that services is creating some memory leaks or something but its still there. Right now I'm down to only running SSH, SMB, SMART, UPS and SNMP. No VMs, Jails or Plugins are running. I normally would like to start 3 VMs using 3+1+0,5GB RAM but bhyve won't let me start them because I'm out of memory...

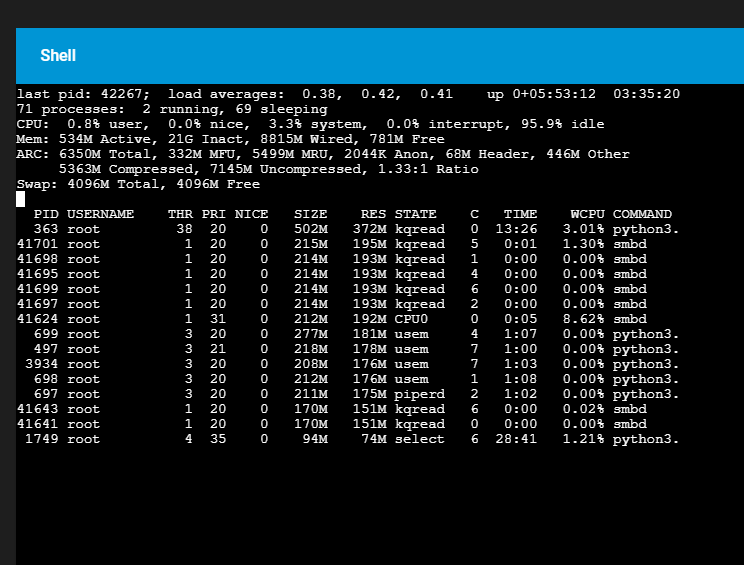

I don't know how to find out what is causing this. Processes shown by top don't use all that RAM:

This time most of the RAM is "Inact" but I also seen nearly all of the RAM being shown as "wired".

Any ideas?

Edit:

And for about a week I always get that "Getting started" popup when logging in. Shouldn't that only show up on the first visit after installing TrueNAS?

Edit:

It looks like this:

I tried to don't start services that aren't critical to see if one of that services is creating some memory leaks or something but its still there. Right now I'm down to only running SSH, SMB, SMART, UPS and SNMP. No VMs, Jails or Plugins are running. I normally would like to start 3 VMs using 3+1+0,5GB RAM but bhyve won't let me start them because I'm out of memory...

I don't know how to find out what is causing this. Processes shown by top don't use all that RAM:

This time most of the RAM is "Inact" but I also seen nearly all of the RAM being shown as "wired".

Any ideas?

Edit:

And for about a week I always get that "Getting started" popup when logging in. Shouldn't that only show up on the first visit after installing TrueNAS?

Edit:

Last edited: