Emerson Heiderich

Cadet

- Joined

- Nov 17, 2021

- Messages

- 2

Hello guys!

First, I apologize for the English, as I'm using Google Translate...

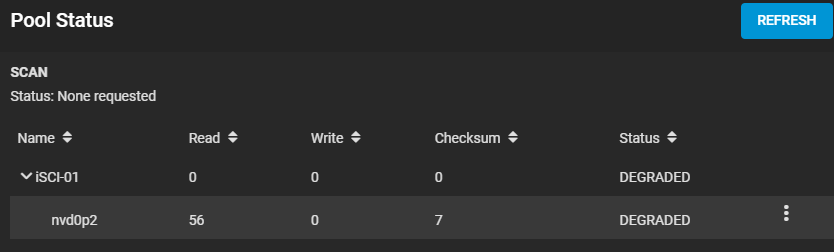

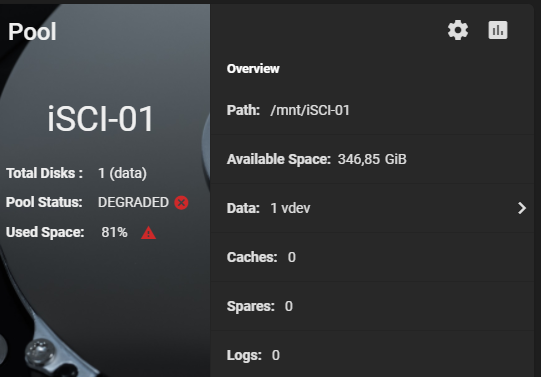

Well, let's go to the point. Unfortunately within 30 days I have two NVME disks that have been degraded and are showing read errors in the S.M.A.R.T. test;

I'm trying to use this 2TB XPG SX8200 Pro disk in an iSCI pool to serve as storage for my XCP-ng hyper-virtualization server; The first disk I installed on the server worked for 24 hours and after that time TrueNas started to show reading errors and they increased exponentially until it became degraded;

I sent it to RMA and they replaced the disk.

After receiving the new disk of the same model, I installed it on the server, created a new pool and made all the settings again. I migrated the VMs from my virtualization server to the new storage and it worked perfectly for 16 days. The performance has always been surprisingly fast and I didn't hear a problem in those 16 days, but unfortunately it has now returned to having read errors in the S.M.A.R.T report and the disk is showing as DEGRADED;

I have another NVME XPG SX8200 Pro 2TB disk that is in a file storage pool and is being shared via SMB. This disk already had almost 200 TB of reads according to S.M.A.R.T and didn't even have a read error;

Is there any configuration or the way I'm using the disk causing this problem? This has me worried a lot!

I apologize again for the English and as I'm not an expert on TrueNas, if I'm missing any information I'll respond promptly with the necessary.

Thanks in advance!

NOTE: Hardware data from my server is available in the subscription;

First, I apologize for the English, as I'm using Google Translate...

Well, let's go to the point. Unfortunately within 30 days I have two NVME disks that have been degraded and are showing read errors in the S.M.A.R.T. test;

I'm trying to use this 2TB XPG SX8200 Pro disk in an iSCI pool to serve as storage for my XCP-ng hyper-virtualization server; The first disk I installed on the server worked for 24 hours and after that time TrueNas started to show reading errors and they increased exponentially until it became degraded;

I sent it to RMA and they replaced the disk.

After receiving the new disk of the same model, I installed it on the server, created a new pool and made all the settings again. I migrated the VMs from my virtualization server to the new storage and it worked perfectly for 16 days. The performance has always been surprisingly fast and I didn't hear a problem in those 16 days, but unfortunately it has now returned to having read errors in the S.M.A.R.T report and the disk is showing as DEGRADED;

I have another NVME XPG SX8200 Pro 2TB disk that is in a file storage pool and is being shared via SMB. This disk already had almost 200 TB of reads according to S.M.A.R.T and didn't even have a read error;

Is there any configuration or the way I'm using the disk causing this problem? This has me worried a lot!

I apologize again for the English and as I'm not an expert on TrueNas, if I'm missing any information I'll respond promptly with the necessary.

Thanks in advance!

NOTE: Hardware data from my server is available in the subscription;

Code:

Warning: settings changed through the CLI are not written to the configuration database and will be reset on reboot. root@srv-truenas[~]# smartctl -a /dev/nvme0 smartctl 7.2 2020-12-30 r5155 [FreeBSD 12.2-RELEASE-p10 amd64] (local build) Copyright (C) 2002-20, Bruce Allen, Christian Franke, www.smartmontools.org === START OF INFORMATION SECTION === Model Number: ADATA SX8200PNP Serial Number: 2L232L215DHA Firmware Version: 32B3T8EA PCI Vendor/Subsystem ID: 0x1cc1 IEEE OUI Identifier: 0x000000 Controller ID: 1 NVMe Version: 1.3 Number of Namespaces: 1 Namespace 1 Size/Capacity: 2,048,408,248,320 [2.04 TB] Namespace 1 Utilization: 483,562,520,576 [483 GB] Namespace 1 Formatted LBA Size: 512 Local Time is: Wed Nov 17 11:21:55 2021 -03 Firmware Updates (0x14): 2 Slots, no Reset required Optional Admin Commands (0x0017): Security Format Frmw_DL Self_Test Optional NVM Commands (0x005f): Comp Wr_Unc DS_Mngmt Wr_Zero Sav/Sel_Feat Timestmp Log Page Attributes (0x0f): S/H_per_NS Cmd_Eff_Lg Ext_Get_Lg Telmtry_Lg Maximum Data Transfer Size: 64 Pages Warning Comp. Temp. Threshold: 75 Celsius Critical Comp. Temp. Threshold: 80 Celsius Supported Power States St Op Max Active Idle RL RT WL WT Ent_Lat Ex_Lat 0 + 9.00W - - 0 0 0 0 0 0 1 + 4.60W - - 1 1 1 1 0 0 2 + 3.80W - - 2 2 2 2 0 0 3 - 0.0450W - - 3 3 3 3 2000 2000 4 - 0.0040W - - 4 4 4 4 15000 15000 Supported LBA Sizes (NSID 0x1) Id Fmt Data Metadt Rel_Perf 0 + 512 0 0 === START OF SMART DATA SECTION === SMART overall-health self-assessment test result: PASSED SMART/Health Information (NVMe Log 0x02) Critical Warning: 0x00 Temperature: 28 Celsius Available Spare: 100% Available Spare Threshold: 10% Percentage Used: 0% Data Units Read: 1,431,131 [732 GB] Data Units Written: 2,264,670 [1.15 TB] Host Read Commands: 30,428,610 Host Write Commands: 36,614,674 Controller Busy Time: 372 Power Cycles: 8 Power On Hours: 386 Unsafe Shutdowns: 2 Media and Data Integrity Errors: 15 Error Information Log Entries: 15 Warning Comp. Temperature Time: 0 Critical Comp. Temperature Time: 0 Error Information (NVMe Log 0x01, 16 of 256 entries) Num ErrCount SQId CmdId Status PELoc LBA NSID VS 0 15 3 0x007a 0x4502 - 75715128 1 - 1 14 4 0x0079 0x4502 - 308649200 1 - 2 13 1 0x007e 0x4502 - 206409088 1 - 3 12 1 0x0079 0x4502 - 206408560 1 - 4 11 2 0x007f 0x4502 - 206408592 1 - 5 10 2 0x0079 0x4502 - 206408560 1 - 6 9 2 0x007f 0x4502 - 206618592 1 - 7 8 3 0x007b 0x4502 - 71897672 1 - 8 7 2 0x007f 0x4502 - 308210496 1 -