altano

Dabbler

- Joined

- Jun 6, 2021

- Messages

- 12

I have this error during replication:

Full log:

The snapshot

It seems like the recursive replication of

How can I work around this bad assumption, short of deleting all snapshots that predate the new dataset?

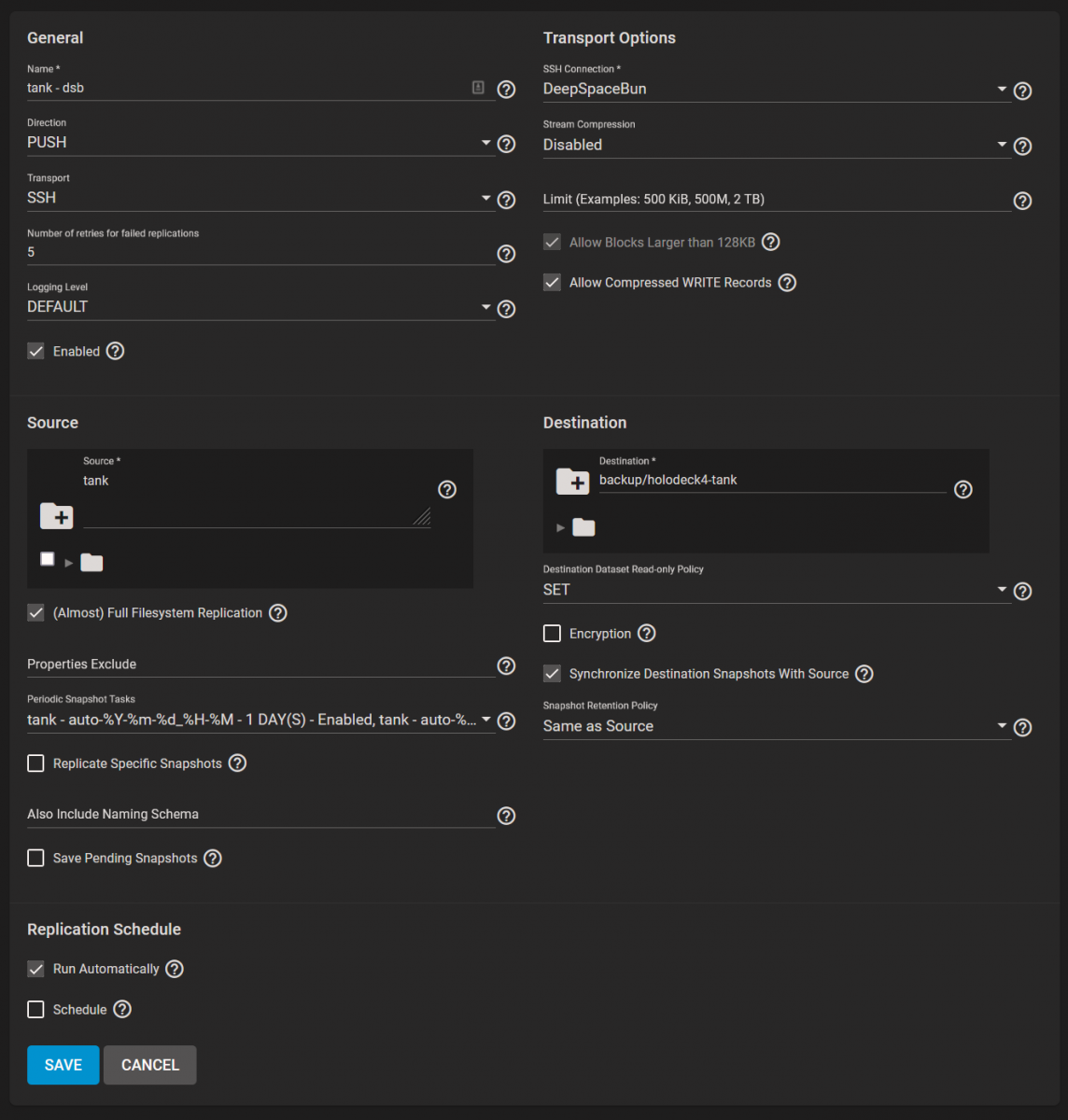

This is my replication config:

cannot send tank@auto-2021-08-08_00-00 recursively: snapshot tank/root/seafile@auto-2021-08-08_00-00 does not exist

Full log:

Code:

[2021/08/18 14:00:00] INFO [Thread-79] [zettarepl.paramiko.replication_task__task_1] Connected (version 2.0, client OpenSSH_8.4-hpn14v15)

[2021/08/18 14:00:00] INFO [Thread-79] [zettarepl.paramiko.replication_task__task_1] Authentication (publickey) successful!

[2021/08/18 14:00:01] INFO [replication_task__task_1] [zettarepl.replication.run] For replication task 'task_1': doing push from 'tank' to 'backup/holodeck4-tank' of snapshot='auto-2021-08-08_00-00' incremental_base='auto-2021-08-01_00-00' receive_resume_token=None encryption=False

[2021/08/18 14:00:02] WARNING [replication_task__task_1] [zettarepl.replication.run] For task 'task_1' at attempt 1 recoverable replication error RecoverableReplicationError("cannot send tank@auto-2021-08-08_00-00 recursively: snapshot tank/root/seafile@auto-2021-08-08_00-00 does not exist\nwarning: cannot send 'tank@auto-2021-08-08_00-00': backup failed\ncannot receive: failed to read from stream")

The snapshot

tank/root/seafile@auto-2021-08-08_00-00 does NOT exist because the seafile dataset was created on 8/11, after the 8/8 date that the system is expecting to find a snapshot.It seems like the recursive replication of

tank@auto-2021-08-08_00-00 is blindly expecting all CURRENTLY EXISTING datasets to have snapshots going back in time to my first top-level pool snapshot, which isn't a correct assumption (replication would never work whenever there are new datasets).How can I work around this bad assumption, short of deleting all snapshots that predate the new dataset?

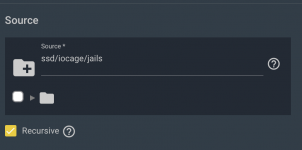

This is my replication config: