pchangover

Cadet

- Joined

- Nov 29, 2022

- Messages

- 5

My TrueNAS box is setup on an old IBM Cloud Object Storage Slicestor 3448 with Xeon E5-2603v3, 128gb ECC, dual SAS9300-8i controllers and a single RAIDZ2 vdev pool with 12 drives. Currently there are 10 easystore WD white label 12tb drives, 1 18tb whitelabel drive that I had to use to replace a bad 12tb drive and the single 20tb wd red pro drive I just installed.

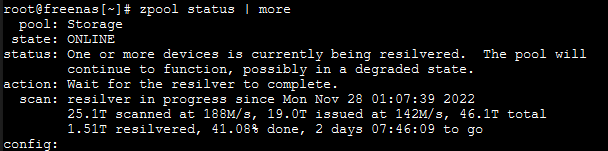

When I put the new drive in on Monday morning I didn't expect it to take so long and apparently I have 2 more days to go! My plan to upgrade each drive individually simply won't work with this time constraint. Any ideas what's going on? Part of me feels like maybe I should go ahead and build my replacement NAS device now and put the 20tb drives in there and just move the data over but this all feels broken to me.

When I put the new drive in on Monday morning I didn't expect it to take so long and apparently I have 2 more days to go! My plan to upgrade each drive individually simply won't work with this time constraint. Any ideas what's going on? Part of me feels like maybe I should go ahead and build my replacement NAS device now and put the 20tb drives in there and just move the data over but this all feels broken to me.