hadmanysons

Cadet

- Joined

- Jan 15, 2019

- Messages

- 6

I'm not sure what's going on, but some places report the old size of the pool and some the new. I started by creating this thread on Reddit and got no help there: https://old.reddit.com/r/freenas/comments/af92uq/autoexpand_kind_of_not_working_after_replacing/

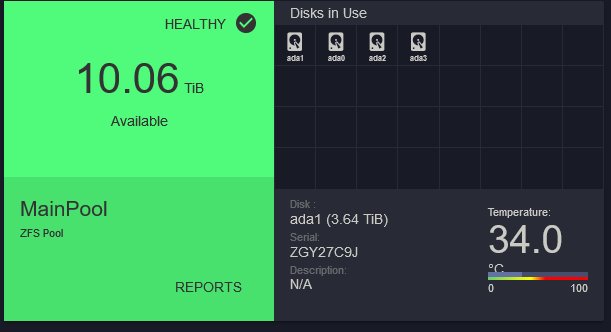

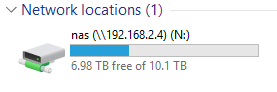

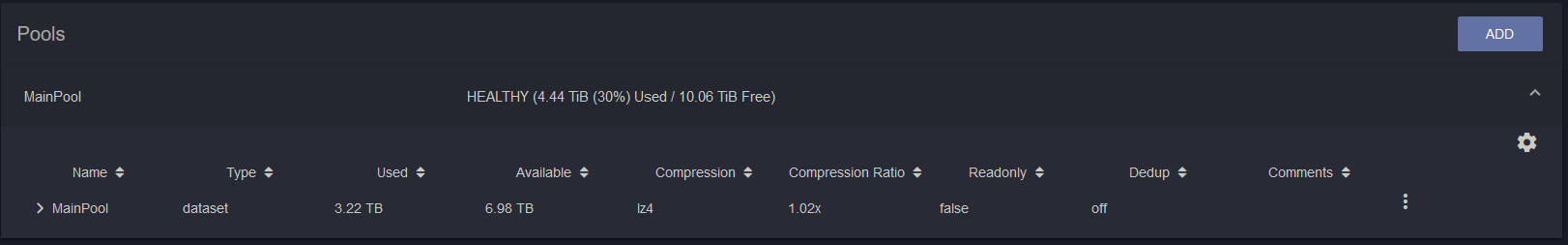

Basically, the dashboard shows the correct amount that should be free now, 10TB, but if I go into Storage->Pools, it only shows 7TB free (old drives value). Additionally, on my windows share (Samba) it only shows 7TB as well.

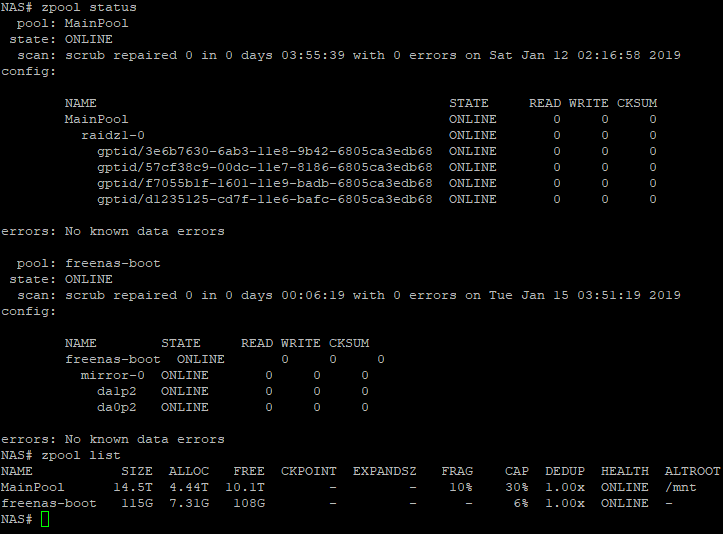

Furthermore, "zpool list" shows 10TB free, for a total of 14.5TB availabe. Output of "geom label list" shows that all 4 drives are reporting as 3.6T. I'm kind of at a loss here.

I've through about 10 of the seemingly relevant results that pop up when I search the forum for "autoexpand", and nothing has worked so far.

I verified autoexpand was on before replacing last drive.

Things I've tried:

Restart the box

set autoexpand off and then on, and restart the box

"zpool online -e MainDrive <guid>" all four hard drives and restarted

Is this a GUI/Samba bug, or did my pool not actually autoexpand?

Thanks in advance. I've tried to post everything that I thought could be relevant to the issue. Been using FreeNAS for about 3 years, although this is my first time on this forum asking about it. And yes, I've RTFM on everything that I thought would be relevant, my apologies in advance if I missed something.

Basically, the dashboard shows the correct amount that should be free now, 10TB, but if I go into Storage->Pools, it only shows 7TB free (old drives value). Additionally, on my windows share (Samba) it only shows 7TB as well.

Furthermore, "zpool list" shows 10TB free, for a total of 14.5TB availabe. Output of "geom label list" shows that all 4 drives are reporting as 3.6T. I'm kind of at a loss here.

Code:

NAS# geom label list Geom name: ada0p2 Providers: 1. Name: gptid/57cf38c9-00dc-11e7-8186-6805ca3edb68 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e1 secoffset: 0 offset: 0 seclength: 7809842696 length: 3998639460352 index: 0 Consumers: 1. Name: ada0p2 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 Geom name: ada1p2 Providers: 1. Name: gptid/3e6b7630-6ab3-11e8-9b42-6805ca3edb68 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e1 secoffset: 0 offset: 0 seclength: 7809842696 length: 3998639460352 index: 0 Consumers: 1. Name: ada1p2 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 Geom name: ada2p2 Providers: 1. Name: gptid/f7055b1f-1601-11e9-badb-6805ca3edb68 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e1 secoffset: 0 offset: 0 seclength: 7809842696 length: 3998639460352 index: 0 Consumers: 1. Name: ada2p2 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 Geom name: ada3p2 Providers: 1. Name: gptid/d1235125-cd7f-11e6-bafc-6805ca3edb68 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e1 secoffset: 0 offset: 0 seclength: 7809842696 length: 3998639460352 index: 0 Consumers: 1. Name: ada3p2 Mediasize: 3998639460352 (3.6T) Sectorsize: 512 Stripesize: 4096 Stripeoffset: 0 Mode: r1w1e2 Geom name: da0p1 Providers: 1. Name: gptid/8ec19678-d3ee-11e6-b47a-6805ca3edb68 Mediasize: 524288 (512K) Sectorsize: 512 Stripesize: 0 Stripeoffset: 17408 Mode: r0w0e0 secoffset: 0 offset: 0 seclength: 1024 length: 524288 index: 0 Consumers: 1. Name: da0p1 Mediasize: 524288 (512K) Sectorsize: 512 Stripesize: 0 Stripeoffset: 17408 Mode: r0w0e0 Geom name: da1p1 Providers: 1. Name: gptid/b65ae93d-fde8-11e5-8719-0800274b59b4 Mediasize: 524288 (512K) Sectorsize: 512 Stripesize: 0 Stripeoffset: 17408 Mode: r0w0e0 secoffset: 0 offset: 0 seclength: 1024 length: 524288 index: 0 Consumers: 1. Name: da1p1 Mediasize: 524288 (512K) Sectorsize: 512 Stripesize: 0 Stripeoffset: 17408 Mode: r0w0e0

I've through about 10 of the seemingly relevant results that pop up when I search the forum for "autoexpand", and nothing has worked so far.

I verified autoexpand was on before replacing last drive.

Things I've tried:

Restart the box

set autoexpand off and then on, and restart the box

"zpool online -e MainDrive <guid>" all four hard drives and restarted

Is this a GUI/Samba bug, or did my pool not actually autoexpand?

Thanks in advance. I've tried to post everything that I thought could be relevant to the issue. Been using FreeNAS for about 3 years, although this is my first time on this forum asking about it. And yes, I've RTFM on everything that I thought would be relevant, my apologies in advance if I missed something.