Hi,

I recently built a TrueNAS system purely for NFS Mounting to Proxmox Cluster.

During my testing, I noticed when performing storage migrations from CEPH inside Proxmox over to the NFS mount (Write intensive operation) TrueNAS starts to report port flapping. The interesting aspect is I have separate physical uplinks to different storage networks that are not connecting to each other which is why I suspect the issue is System specific. The issue is occurring on both Intel 1GbE uplinks and Mellanox 40GbE uplinks.

I see the Python core dump issues are been tracked via:

The disktemp.py python logs occur at the same time as the flapping under heavy writes.

System Specs:

Version: TrueNAS-12.0-U2.1

Chassis: Dell PowerEdge R730xd + Dell MD1400 JBOD

CPU: E5-2660 v3

Memory: 256gb ECC DDR4

Controller:

- PERC H800 for JBOD

- PERC H710 for OS

- Part No. P31H2 for NVMe Read/Write Cache

Network:

- 2x Mellanox Connect-X Pro-3 Dual 40GbE (Trunking with Jumbo Frames)

- 1x Intel LOM i350 + x510

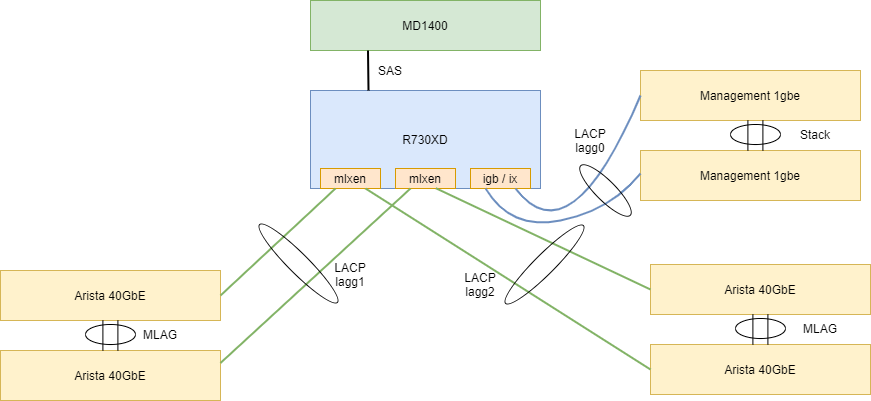

Physical Topology:

I saw some previous threads with similar cases running the older version of FreeNAS but there not quite the same issues I have observed. Has anyone else come across this or can give some advice?

If there is any information I can collect to help narrow down the issue I would be happy to provide it.

Thank you very much.

I recently built a TrueNAS system purely for NFS Mounting to Proxmox Cluster.

During my testing, I noticed when performing storage migrations from CEPH inside Proxmox over to the NFS mount (Write intensive operation) TrueNAS starts to report port flapping. The interesting aspect is I have separate physical uplinks to different storage networks that are not connecting to each other which is why I suspect the issue is System specific. The issue is occurring on both Intel 1GbE uplinks and Mellanox 40GbE uplinks.

Code:

Mar 16 08:10:53 srvstor1 igb0: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:10:53 srvstor1 mlxen1: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:10:53 srvstor1 mlxen3: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:10:54 srvstor1 mlxen2: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:10:54 srvstor1 kernel: lagg1: link state changed to DOWN

Mar 16 08:10:54 srvstor1 kernel: vlan202: link state changed to DOWN

Mar 16 08:10:55 srvstor1 mlxen0: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:10:55 srvstor1 kernel: lagg2: link state changed to DOWN

Mar 16 08:10:55 srvstor1 kernel: vlan207: link state changed to DOWN

Mar 16 08:11:09 srvstor1 kernel: lagg2: link state changed to UP

Mar 16 08:11:09 srvstor1 kernel: lagg1: link state changed to UP

Mar 16 08:11:09 srvstor1 kernel: vlan207: link state changed to UP

Mar 16 08:11:09 srvstor1 kernel: vlan202: link state changed to UP

Mar 16 08:12:41 srvstor1 1 2021-03-16T08:12:41.884359+09:00 srvstor1.mujin.co.jp collectd 4837 - - Traceback (most recent call last):

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 62, in read

with Client() as c:

File "/usr/local/lib/python3.8/site-packages/middlewared/client/client.py", line 281, in __init__

self._ws.connect()

File "/usr/local/lib/python3.8/site-packages/middlewared/client/client.py", line 124, in connect

rv = super(WSClient, self).connect()

File "/usr/local/lib/python3.8/site-packages/ws4py/client/__init__.py", line 223, in connect

bytes = self.sock.recv(128)

socket.timeout: timed out

Mar 16 08:15:46 srvstor1 igb0: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:15:46 srvstor1 mlxen1: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:15:46 srvstor1 mlxen0: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:15:46 srvstor1 mlxen3: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:15:46 srvstor1 kernel: lagg2: link state changed to DOWN

Mar 16 08:15:46 srvstor1 mlxen2: Interface stopped DISTRIBUTING, possible flapping

Mar 16 08:15:46 srvstor1 kernel: vlan207: link state changed to DOWN

Mar 16 08:15:46 srvstor1 kernel: lagg1: link state changed to DOWN

Mar 16 08:15:46 srvstor1 kernel: vlan202: link state changed to DOWN

Mar 16 08:15:47 srvstor1 kernel: lagg1: link state changed to UP

Mar 16 08:15:47 srvstor1 kernel: vlan202: link state changed to UP

Mar 16 08:15:47 srvstor1 kernel: lagg2: link state changed to UP

Mar 16 08:15:47 srvstor1 kernel: vlan207: link state changed to UPI see the Python core dump issues are been tracked via:

The disktemp.py python logs occur at the same time as the flapping under heavy writes.

System Specs:

Version: TrueNAS-12.0-U2.1

Chassis: Dell PowerEdge R730xd + Dell MD1400 JBOD

CPU: E5-2660 v3

Memory: 256gb ECC DDR4

Controller:

- PERC H800 for JBOD

- PERC H710 for OS

- Part No. P31H2 for NVMe Read/Write Cache

Network:

- 2x Mellanox Connect-X Pro-3 Dual 40GbE (Trunking with Jumbo Frames)

- 1x Intel LOM i350 + x510

Physical Topology:

I saw some previous threads with similar cases running the older version of FreeNAS but there not quite the same issues I have observed. Has anyone else come across this or can give some advice?

If there is any information I can collect to help narrow down the issue I would be happy to provide it.

Thank you very much.