exwhywhyzee

Dabbler

- Joined

- Sep 13, 2021

- Messages

- 30

I honestly don't know what has happened. I rebooted my TrueNAS VM after migrating it from one datastore to another.

I noted that there my Win2k22 file server wasn't connecting to the iSCSI share, so when I checked to see what was going on, I noticed that the console is flooded with these messages:

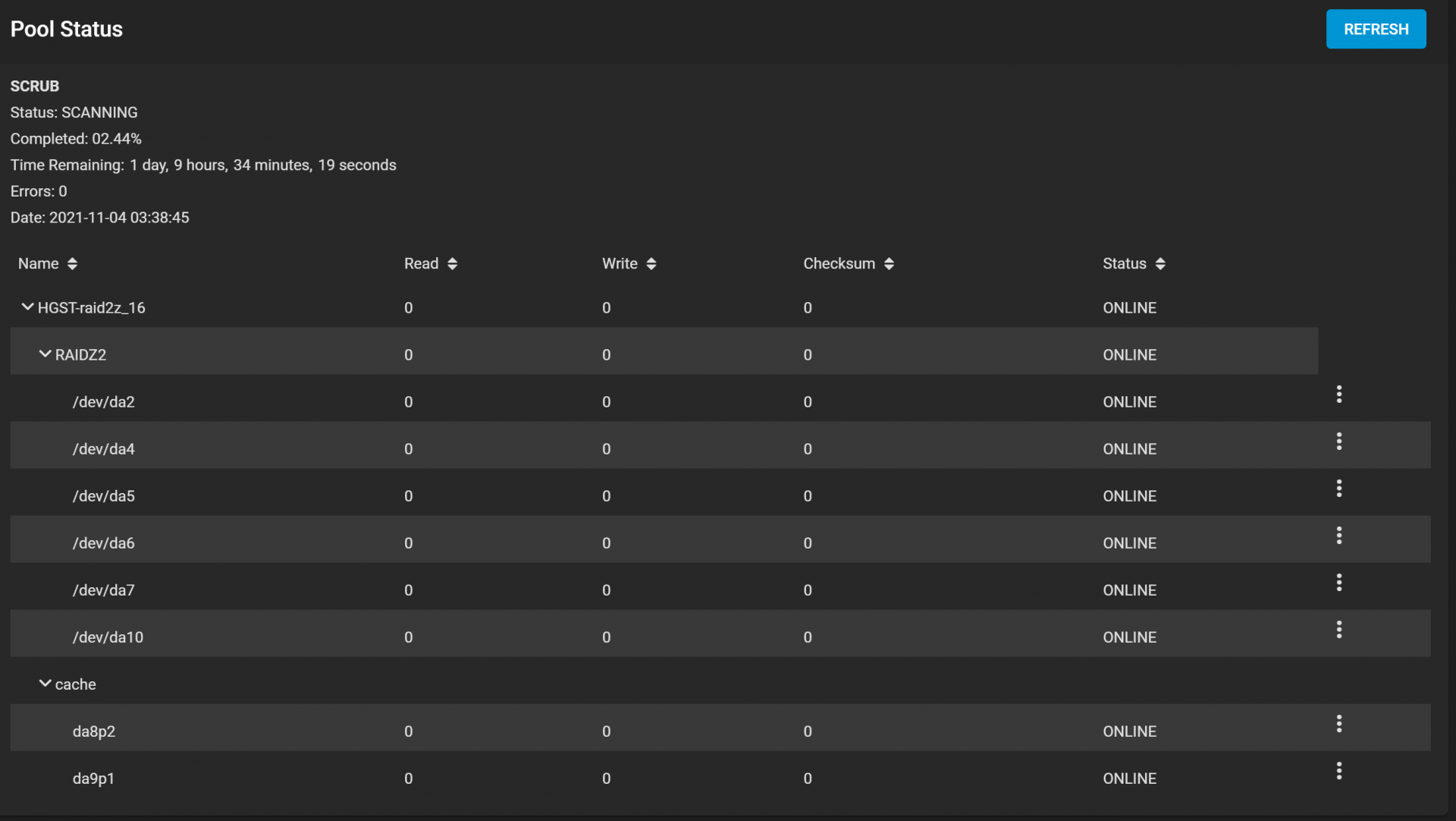

I also noticed something really peculiar: the pool is showing 100% used space., which makes absolutely no sense to me. The pool is 14TB, and only about 1.5TB-2TB outta be used. I have no idea where that 13.5-14TB came from

I see two error CRITICAL error messages:

I noted that there my Win2k22 file server wasn't connecting to the iSCSI share, so when I checked to see what was going on, I noticed that the console is flooded with these messages:

I also noticed something really peculiar: the pool is showing 100% used space., which makes absolutely no sense to me. The pool is 14TB, and only about 1.5TB-2TB outta be used. I have no idea where that 13.5-14TB came from

I see two error CRITICAL error messages:

CRITICAL

Failed to check for alert HasUpdate: Traceback (most recent call last): File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/alert.py", line 740, in __run_source alerts = (await alert_source.check()) or [] File "/usr/local/lib/python3.9/site-packages/middlewared/alert/base.py", line 211, in check return await self.middleware.run_in_thread(self.check_sync) File "/usr/local/lib/python3.9/site-packages/middlewared/utils/run_in_thread.py", line 10, in run_in_thread return await self.loop.run_in_executor(self.run_in_thread_executor, functools.partial(method, *args, **kwargs)) File "/usr/local/lib/python3.9/concurrent/futures/thread.py", line 52, in run result = self.fn(*self.args, **self.kwargs) File "/usr/local/lib/python3.9/site-packages/middlewared/alert/source/update.py", line 67, in check_sync path = self.middleware.call_sync("update.get_update_location") File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1272, in call_sync return self.run_coroutine(methodobj(*prepared_call.args)) File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1312, in run_coroutine return fut.result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 438, in result return self.__get_result() File "/usr/local/lib/python3.9/concurrent/futures/_base.py", line 390, in __get_result raise self._exception File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/update.py", line 412, in get_update_location os.chmod(path, 0o755) OSError: [Errno 28] No space left on device: '/var/db/system/update'

2021-11-04 03:35:02 (America/Los_Angeles)

CRITICAL

Space usage for pool "HGST-raid2z_16" is 96%. Optimal pool performance requires used space remain below 80%.

2021-11-02 00:45:57 (America/Los_Angeles)