Morikaen

Dabbler

- Joined

- Nov 21, 2021

- Messages

- 23

Hi everyone and thanks for reading another "Pool offline thread" and try to help.

I've been using Truenas for almost a year and is the first situation I don't know how to handle.

One of my pools went offline without further notice and I don't know how to fix it.

I'll share my system information

Platform: Generic

Version: TrueNAS-12.0-U6.1

Installed over a Generic AMD PC

I have 3 pools and this one went offline.

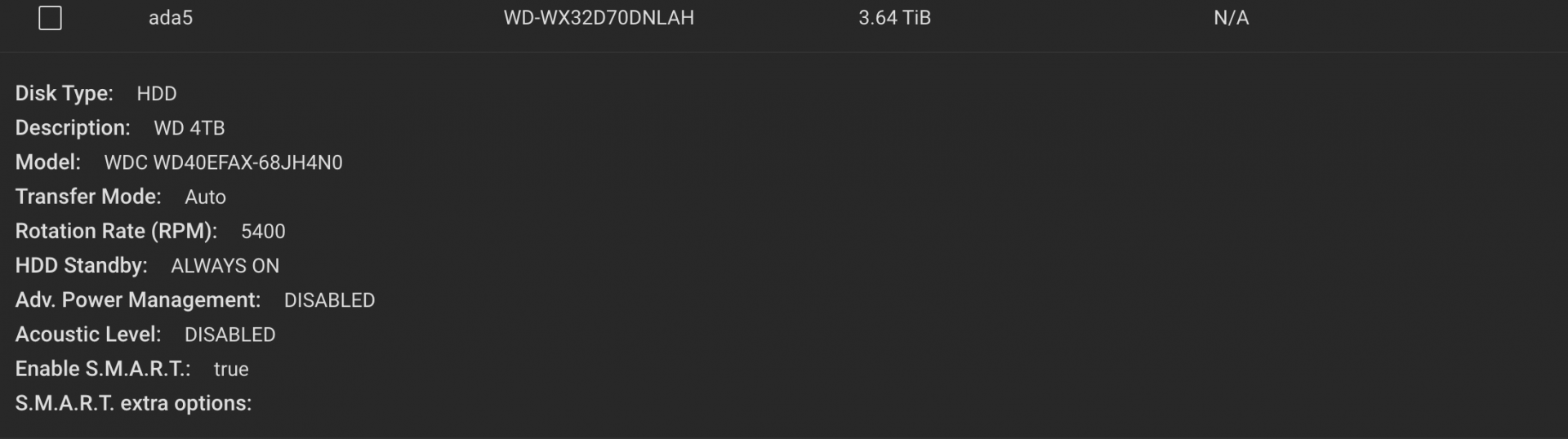

This pool uses this Western Digital disk, which label strangely doesn't exist any more

A couple of CLI outputs

as you can see, there is no trace of the missing TrasientPool

Any help is greatly appreciated.

Thanks a lot.

I've been using Truenas for almost a year and is the first situation I don't know how to handle.

One of my pools went offline without further notice and I don't know how to fix it.

I'll share my system information

Platform: Generic

Version: TrueNAS-12.0-U6.1

Installed over a Generic AMD PC

I have 3 pools and this one went offline.

This pool uses this Western Digital disk, which label strangely doesn't exist any more

A couple of CLI outputs

Code:

root@truenas[~]# zpool status -v

pool: SSDPool

state: ONLINE

scan: scrub repaired 0B in 00:01:22 with 0 errors on Sun Nov 21 00:01:22 2021

config:

NAME STATE READ WRITE CKSUM

SSDPool ONLINE 0 0 0

gptid/4fb2d2ec-447c-11eb-86ab-f46d04a297df ONLINE 0 0 0

errors: No known data errors

pool: StripePool

state: ONLINE

scan: scrub repaired 0B in 00:00:03 with 0 errors on Sun Nov 21 00:00:03 2021

config:

NAME STATE READ WRITE CKSUM

StripePool ONLINE 0 0 0

gptid/a88992bb-49d4-11eb-bfc3-f46d04a297df ONLINE 0 0 0

gptid/ac2729d1-49d4-11eb-bfc3-f46d04a297df ONLINE 0 0 0

gptid/ae780bfd-49d4-11eb-bfc3-f46d04a297df ONLINE 0 0 0

errors: No known data errors

pool: boot-pool

state: ONLINE

scan: scrub repaired 0B in 00as you can see, there is no trace of the missing TrasientPool

Code:

root@truenas[~]# camcontrol devlist <ST3500630AS 3.AAK> at scbus0 target 0 lun 0 (pass0,ada0) <ST9250827AS 3.AAA> at scbus3 target 2 lun 0 (pass1,ada1) <HTS541080G9SA00 MB4IC60R> at scbus3 target 3 lun 0 (pass2,ada2) <Port Multiplier 5755197b 000e> at scbus3 target 15 lun 0 (pass3,pmp0) <KINGSTON SV300S37A120G 521ABBF0> at scbus7 target 0 lun 0 (pass4,ada3) <SanDisk SDSSDA120G Z32080RL> at scbus8 target 0 lun 0 (pass5,ada4) <WDC WD40EFAX-68JH4N0 82.00A82> at scbus7 target 0 lun 0 (pass4,ada3)

Any help is greatly appreciated.

Thanks a lot.