Hi all, I'm having an issue I would greatly appreciate help with. First thing's first:

HARDWARE SETUP:

- Motherboard make and model

Asrock Rack ROMED8-2T

- CPU make and model

AMD EPYC 7282

- RAM quantity

64GB RAM

- Hard drives, quantity, model numbers, and RAID configuration, including boot drives

x7 Seagate_IronWolf_ZA2000NM10002-2ZG103 - 2TB... we'll come back to this, I'm not 100% sure what the RAID config was...

- Hard disk controllers

LSI 9300-8i

- Network cards

Intel Corporation Ethernet Controller 10G X550T

I've been running this config for ~10 months and while I've been having an issue for a while now (few months) where my pool has been degraded, I've never lost any data. I have a couple of spares in there, there haven't been any SMART failures, and when the pool gets scrubbed it comes back to full health so I've been ignoring it until I could spin up another pool for some testing. I'm pretty sure it is related to write errors from a sketchy portainer instance I'm running but I can't confirm... Of course, I didn't get the chance before today when I rebooted the machine to find that my pool was not remounted on boot. This is what I'm seeing:

The system knows the disks belong to this pool, but I cannot import the pool (its not available in the dropdown on the GUI) and via zpool import I'm getting:

I've seen other posts where one or several of the drives are shown as FAULTED, or the pool comes up as such, but that's not the case here. I've also tried some more forceful import commands I've seen in other posts (zpool import -f -F -R /mnt <pool>) using the name and the ID but I get the same response. Any help would be much appreciated. My #1 hope is to just get the data off these drives at this point... also to anyone out there suggesting I don't know what I'm doing, you're definitely mostly right. I'm still fairly new to TruNAS and zfs in general.

HARDWARE SETUP:

- Motherboard make and model

Asrock Rack ROMED8-2T

- CPU make and model

AMD EPYC 7282

- RAM quantity

64GB RAM

- Hard drives, quantity, model numbers, and RAID configuration, including boot drives

x7 Seagate_IronWolf_ZA2000NM10002-2ZG103 - 2TB... we'll come back to this, I'm not 100% sure what the RAID config was...

- Hard disk controllers

LSI 9300-8i

- Network cards

Intel Corporation Ethernet Controller 10G X550T

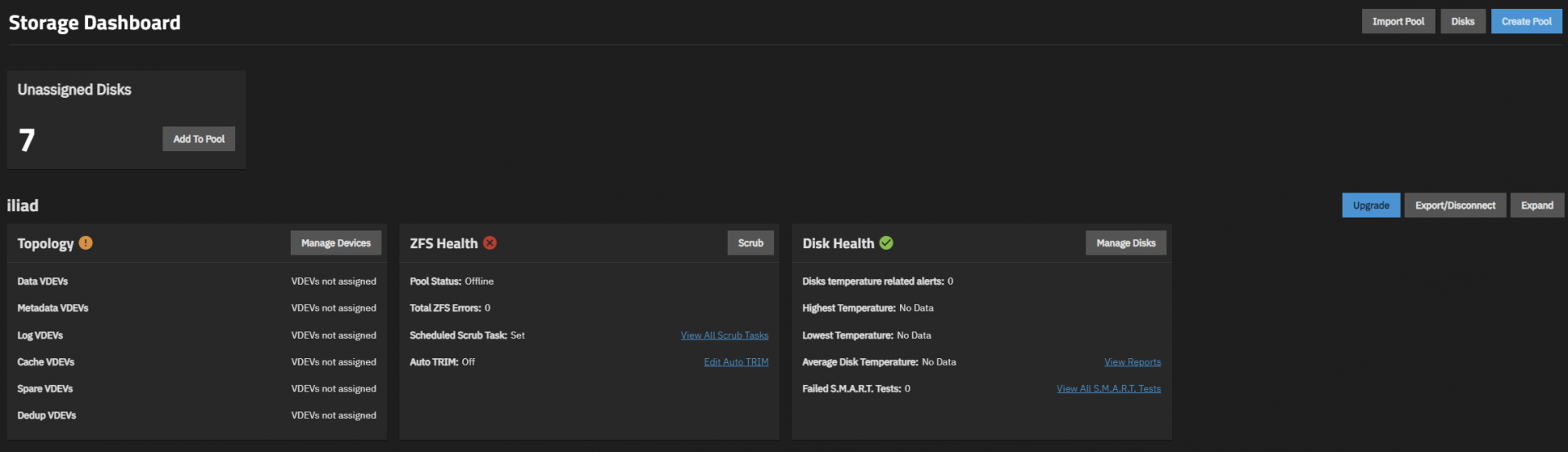

I've been running this config for ~10 months and while I've been having an issue for a while now (few months) where my pool has been degraded, I've never lost any data. I have a couple of spares in there, there haven't been any SMART failures, and when the pool gets scrubbed it comes back to full health so I've been ignoring it until I could spin up another pool for some testing. I'm pretty sure it is related to write errors from a sketchy portainer instance I'm running but I can't confirm... Of course, I didn't get the chance before today when I rebooted the machine to find that my pool was not remounted on boot. This is what I'm seeing:

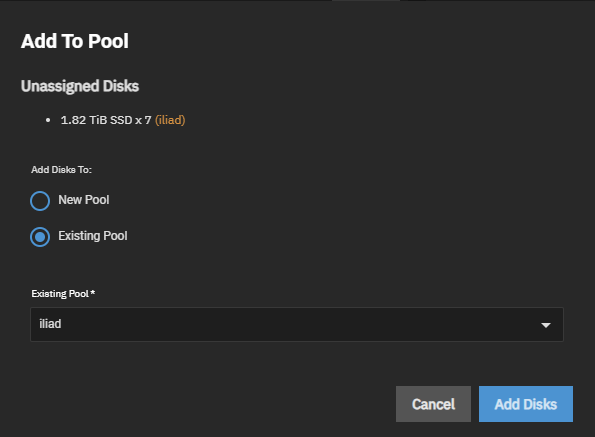

The system knows the disks belong to this pool, but I cannot import the pool (its not available in the dropdown on the GUI) and via zpool import I'm getting:

Code:

# zpool import

pool: iliad

id: 7288961483482980890

state: ONLINE

action: The pool can be imported using its name or numeric identifier.

config:

iliad ONLINE

raidz1-0 ONLINE

aede0578-5130-4402-8629-70d8a5452253 ONLINE

508f62dc-9ce6-4016-a2de-33db254537f4 ONLINE

spare-2 ONLINE

58c92f01-fef9-4b0e-8d21-2eb5677cf696 ONLINE

d368cb6b-b3a6-4fe9-bbec-9cab8d6a9661 ONLINE

80dfaa92-bf60-466a-8fd0-5377b96102e5 ONLINE

spare-4 ONLINE

913800a2-da16-4c57-9c47-73b5a2f94754 ONLINE

9aa0a10d-bdc4-41f4-9b21-edbdf4df2cb7 ONLINE

spares

d368cb6b-b3a6-4fe9-bbec-9cab8d6a9661

9aa0a10d-bdc4-41f4-9b21-edbdf4df2cb7

# zpool import iliad

cannot import 'iliad': I/O error

Destroy and re-create the pool from

a backup source.

I've seen other posts where one or several of the drives are shown as FAULTED, or the pool comes up as such, but that's not the case here. I've also tried some more forceful import commands I've seen in other posts (zpool import -f -F -R /mnt <pool>) using the name and the ID but I get the same response. Any help would be much appreciated. My #1 hope is to just get the data off these drives at this point... also to anyone out there suggesting I don't know what I'm doing, you're definitely mostly right. I'm still fairly new to TruNAS and zfs in general.