Dear friends of this community.

My truenas system is built on:

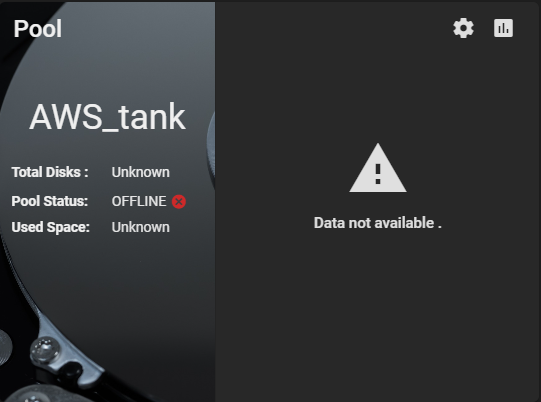

The pool "AWS_tank" is OFFLINE right now and Data is not available.

I replaced the damaged disk for a new one (same brand, model and capacity) and tried some workarounds from other posts with no success.

The output of

The output of

This "gptid/ec9f349f-0e69-11ec-836c-503eaa1fd636 UNAVAIL cannot open" is the damaged disk. It is dead, I tried it on other linux system and is unreadable.

A new one disk is attached now.

Also, I tried

I don't have a pervious data backup.

What can I do in order to recover the pool and data ?

Thanks in advance for your time and help.

Tei

My truenas system is built on:

- Motherboard Gygabyte model GA-H110M-H

- CPU: Intel(R) Core(TM) i5-6400 CPU @ 2.70GHz

- Memory: 2 x 16 GB DDR4 3200

- Available Memory: 31.8 GiB (as shown in Dashboard)

- Storage: 3 x 2 TB HDD in raidz1 array.

- Only one Pool "AWS_tank"

- Version: TrueNAS-12.0-U8

The pool "AWS_tank" is OFFLINE right now and Data is not available.

I replaced the damaged disk for a new one (same brand, model and capacity) and tried some workarounds from other posts with no success.

The output of

zpool status command is the following:root@truenas[~]# zpool status

pool: boot-pool

state: ONLINE

scan: resilvered 2.71M in 00:00:05 with 0 errors on Wed Nov 30 11:31:51 2022

config:

NAME STATE READ WRITE CKSUM

boot-pool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

da1p2 ONLINE 0 0 0

da0p2 ONLINE 0 0 0

errors: No known data errorsThe output of

zpool import command is the following:root@truenas[~]# zpool import

pool: AWS_tank

id: 16198973132710883895

state: FAULTED

status: One or more devices are missing from the system.

action: The pool cannot be imported. Attach the missing

devices and try again.

The pool may be active on another system, but can be imported using

the '-f' flag.

see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-3C

config:

AWS_tank FAULTED corrupted data

raidz1-0 DEGRADED

gptid/ec7f6f5e-0e69-11ec-836c-503eaa1fd636 ONLINE

gptid/ec9f349f-0e69-11ec-836c-503eaa1fd636 UNAVAIL cannot open

gptid/eca8fd77-0e69-11ec-836c-503eaa1fd636 ONLINEThis "gptid/ec9f349f-0e69-11ec-836c-503eaa1fd636 UNAVAIL cannot open" is the damaged disk. It is dead, I tried it on other linux system and is unreadable.

A new one disk is attached now.

Also, I tried

zpool import -f AWS_tank command with the following response:root@truenas[~]# zpool import -f AWS_tank

cannot import 'AWS_tank': I/O error

Destroy and re-create the pool from

a backup source.I don't have a pervious data backup.

What can I do in order to recover the pool and data ?

Thanks in advance for your time and help.

Tei