Motherboard: SuperMicro X9DRi-LN4F+

CPU: 2x Intel Xeon E5-2650 v2 @ 2.60GHz

RAM: ~196GB

Drives:

960GB Toshiba TR150 SSD

2x 256GB Samsung NVMe

12x 6TB HGST HUS726060AL5210 SAS HDD

SAS Controllers:

SAS2004 SAS9211-4i IT mode

SAS2008 SAS9211-8i IT mode

TrueNAS SCALE 23.10.1 hosted in Proxmox with passthrough of the SAS Controllers

Extra information: the 12 SAS drives were configured in a RaidZ3 with one of the 256GB Samsung NVMe as a cache drive

This system started with TrueNAS CORE and went through several updates ending with 13-U6. Not too long ago I updated the system to TrueNAS SCALE, starting with 22 and updating to 23.01.1. I recently did an update to 23.10.1 as well, but the system was working up until the other night. I was working on deploying some services to some other VMs in Proxmox and ran into an issue. I took the services down because I had implemented HTTPS redirection and that broke an internal endpoint that couldn't be reached over HTTPS. I believed that taking the services down would remove the HTTPS redirection, but it did not. I have a VM on the same Proxmox node as the TrueNAS VM that supplies DHCP, DNS and a Reverse Proxy. So, I thought maybe something was going on with that VM and I tried restarting it. That didn't work, so I tried restarting the entire Proxmox node. That didn't work either, so I went a different route and tried creating a file to use in the mean time in place of the endpoint that I could no longer reach.

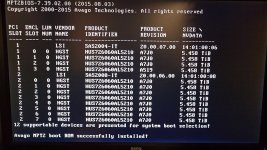

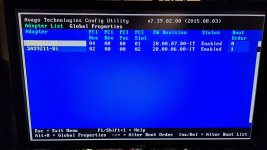

Now we get into the part where I noticed an issue with TrueNAS. I got things working with the file, but one of the tasks in the process that I was working on is to mount shares from TrueNAS. When the shares tried to get mounted, I was getting errors along the lines of the network location didn't exist. I was very confused because I haven't had issues with my TrueNAS shares in years, so I started investigating what was going on and ended up in TrueNAS to find that it said that my pool was offline. I restarted TrueNAS, but that didn't help, so I started digging deeper. I restarted the entire server and noticed that it said that the SAS configuration had changed and reconfiguration was recommended. I went into the configuration utility and sure enough one of the controllers didn't have the boot order specified. I set that and got back into TrueNAS, no difference.

At this point I really started digging into things like, making sure the passthrough was setup properly in Proxmox. Passthrough does seem to be setup properly. I read some stuff about these SAS Controllers needing to be in IT mode and then I realized that they already are and TrueNAS can see the SAS Controllers. I started trying to look into the disks and noticed that they show up in the BIOS, they show up in the SAS configuration utility, but they do not show up in TrueNAS. I also tried looking into the pool further in the CLI and noticed that while I can see the pool in the Dashboard of the TrueNAS GUI, I cannot see the pool in the CLI, not in status, not in import and it even appears like the files in /mnt do not exist.

When I first started troubleshooting, I initially thought that maybe the drives had failed, although it seems crazy that all 12 SAS drives in the pool would have all failed at the same time and before I restarted the whole Proxmox node everything was working fine. I'm still going to check each drive individually, just in case, but considering that I can see them in the BIOS and in the SAS configuration utility, I'm thinking that it's not the drives having failed.

I've searched and searched and found several things that seemed like they might be similar enough to my issue, but nothing has gotten me any closer to a solution. I've tried everything that I can think of, but I'm at loss at this point as to why I can see the drives in the BIOS and the SAS configuration utility, but TrueNAS just refuses to see them. Or why TrueNAS won't even see the pool in the CLI.

I have attached photos of anything that I thought could potentially be helpful.

CPU: 2x Intel Xeon E5-2650 v2 @ 2.60GHz

RAM: ~196GB

Drives:

960GB Toshiba TR150 SSD

2x 256GB Samsung NVMe

12x 6TB HGST HUS726060AL5210 SAS HDD

SAS Controllers:

SAS2004 SAS9211-4i IT mode

SAS2008 SAS9211-8i IT mode

TrueNAS SCALE 23.10.1 hosted in Proxmox with passthrough of the SAS Controllers

Extra information: the 12 SAS drives were configured in a RaidZ3 with one of the 256GB Samsung NVMe as a cache drive

This system started with TrueNAS CORE and went through several updates ending with 13-U6. Not too long ago I updated the system to TrueNAS SCALE, starting with 22 and updating to 23.01.1. I recently did an update to 23.10.1 as well, but the system was working up until the other night. I was working on deploying some services to some other VMs in Proxmox and ran into an issue. I took the services down because I had implemented HTTPS redirection and that broke an internal endpoint that couldn't be reached over HTTPS. I believed that taking the services down would remove the HTTPS redirection, but it did not. I have a VM on the same Proxmox node as the TrueNAS VM that supplies DHCP, DNS and a Reverse Proxy. So, I thought maybe something was going on with that VM and I tried restarting it. That didn't work, so I tried restarting the entire Proxmox node. That didn't work either, so I went a different route and tried creating a file to use in the mean time in place of the endpoint that I could no longer reach.

Now we get into the part where I noticed an issue with TrueNAS. I got things working with the file, but one of the tasks in the process that I was working on is to mount shares from TrueNAS. When the shares tried to get mounted, I was getting errors along the lines of the network location didn't exist. I was very confused because I haven't had issues with my TrueNAS shares in years, so I started investigating what was going on and ended up in TrueNAS to find that it said that my pool was offline. I restarted TrueNAS, but that didn't help, so I started digging deeper. I restarted the entire server and noticed that it said that the SAS configuration had changed and reconfiguration was recommended. I went into the configuration utility and sure enough one of the controllers didn't have the boot order specified. I set that and got back into TrueNAS, no difference.

At this point I really started digging into things like, making sure the passthrough was setup properly in Proxmox. Passthrough does seem to be setup properly. I read some stuff about these SAS Controllers needing to be in IT mode and then I realized that they already are and TrueNAS can see the SAS Controllers. I started trying to look into the disks and noticed that they show up in the BIOS, they show up in the SAS configuration utility, but they do not show up in TrueNAS. I also tried looking into the pool further in the CLI and noticed that while I can see the pool in the Dashboard of the TrueNAS GUI, I cannot see the pool in the CLI, not in status, not in import and it even appears like the files in /mnt do not exist.

When I first started troubleshooting, I initially thought that maybe the drives had failed, although it seems crazy that all 12 SAS drives in the pool would have all failed at the same time and before I restarted the whole Proxmox node everything was working fine. I'm still going to check each drive individually, just in case, but considering that I can see them in the BIOS and in the SAS configuration utility, I'm thinking that it's not the drives having failed.

I've searched and searched and found several things that seemed like they might be similar enough to my issue, but nothing has gotten me any closer to a solution. I've tried everything that I can think of, but I'm at loss at this point as to why I can see the drives in the BIOS and the SAS configuration utility, but TrueNAS just refuses to see them. Or why TrueNAS won't even see the pool in the CLI.

I have attached photos of anything that I thought could potentially be helpful.

Attachments

-

VideoCapture_20240118-185409.jpg677.7 KB · Views: 34

VideoCapture_20240118-185409.jpg677.7 KB · Views: 34 -

20240118_185811.jpg413 KB · Views: 35

20240118_185811.jpg413 KB · Views: 35 -

20240118_185640.jpg502 KB · Views: 35

20240118_185640.jpg502 KB · Views: 35 -

20240118_185612.jpg304.2 KB · Views: 31

20240118_185612.jpg304.2 KB · Views: 31 -

20240118_185549.jpg421.8 KB · Views: 31

20240118_185549.jpg421.8 KB · Views: 31 -

20240118_185502.jpg483.5 KB · Views: 36

20240118_185502.jpg483.5 KB · Views: 36 -

20240118_185020.jpg300.1 KB · Views: 35

20240118_185020.jpg300.1 KB · Views: 35 -

20240118_185004.jpg266.1 KB · Views: 32

20240118_185004.jpg266.1 KB · Views: 32 -

20240118_184951.jpg250.6 KB · Views: 27

20240118_184951.jpg250.6 KB · Views: 27 -

20240118_184826.jpg397.8 KB · Views: 30

20240118_184826.jpg397.8 KB · Views: 30 -

20240118_184548.jpg344.4 KB · Views: 23

20240118_184548.jpg344.4 KB · Views: 23 -

20240118_184526.jpg475.9 KB · Views: 30

20240118_184526.jpg475.9 KB · Views: 30 -

20240118_184420.jpg465.5 KB · Views: 24

20240118_184420.jpg465.5 KB · Views: 24 -

20240118_183712.jpg459.3 KB · Views: 24

20240118_183712.jpg459.3 KB · Views: 24 -

20240118_183659.jpg367 KB · Views: 26

20240118_183659.jpg367 KB · Views: 26 -

20240118_183650.jpg444.8 KB · Views: 27

20240118_183650.jpg444.8 KB · Views: 27 -

20240118_183252.jpg552.2 KB · Views: 30

20240118_183252.jpg552.2 KB · Views: 30 -

20240119_122318.jpg451.2 KB · Views: 29

20240119_122318.jpg451.2 KB · Views: 29 -

20240119_122247.jpg288.5 KB · Views: 37

20240119_122247.jpg288.5 KB · Views: 37