Hi everyone,

I've wanted to see how well FreeNAS would perform in back to back tests on physical vs. virtual hosts on the same hardware for a while now and finally have all the parts together to do some initial testing. My vision is to have a single box that can do it all for my homelab and for testing with ESXi being the hypervisor and have FreeNAS be virtualized and host out a nested datastore for the rest of my ESXi VM's.

I wanted to share my results in case anyone else was curious and I haven't seen anything like this benchmarked before. This is not a production system and the results are far from scientific but should give you a little insight into the performance of this type of configuration.

Pertinent hardware specs:

FreeNAS was installed on a USB device in the SuperStorage Server 6048R-E1CR36L chassis with X10DRH-iT motherboard. The onboard Intel X540 10GbE NICs were plugged into a Netgear XS708E 10GbE switch. On the ESXi host side of things, I used a Supermicro X10SDV-TLN4F based 1U server which has an Intel Xeon D-1540 SoC and integrated Intel x552 10GbE NICs as well which was also plugged into the Netgear XS708E 10GbE switch. The screenshots you're seeing below are from a Windows Server 2012 R2 VM on iSCSI and NFS datastores.

VM host setup:

The general process for the VM setup was as follows:

A FreeNAS VM was created on an SATA DOM datastore that is physically in the host. Then in the FreeNAS VM the LSI 3008 HBA was set to passthrough mode so the VM could have full access to the disks. VMXNET 3 NICs were used for the VM and MTU was set to 9000 from within ESXi networking and FreeNAS. Either an iSCSI zvol target or NFS datastore was set up in the FreeNAS VM and passed back through to the ESXi host where I created a nested datastore. I then created a Windows Server 2012 R2 VM on that nested datastore. These are the disk performance results from the various instances of the Windows Server 2012 R2 VMs that's were on the aforementioned datastores. The Intel E1000E NIC was used for the Windows Server 2012 R2 VMs and the MTU was set to 9014 bytes.

Questions:

I'm still not totally sold one way or the other on virtualizing FreeNAS, but the temptation of virtualizing it for the power savings of having less servers running is tempting. It's also tempting to use sync=disabled for the ESXi datastores but I know that's not smart so I need to get that figured out. Additionally, as it relates to virtualizing FreeNAS, it's pretty annoying when you reboot or have a power outage as you have to either SSH into the ESXi host to rescan the storage adapters (which I realize you can automate but it doesn't work if you're using FreeNAS volume encryption) or you have to do it manually from the GUI before you can power on your VMs that are in your nested datastore that's hosted by the FreeNAS VM. It's also of course a huge negative as this type of setup takes down all of your nested VMs when something goes wrong and the system goes down for whatever reason.

Furthermore, my results show that sync=enabled is a huge performance killer, even with an Intel DC s3510 SSD's for a SLOG. I am not sure if I'm doing something wrong but the performance loss is just too great. I don't really care if sync=disabled winds up destroying my VM's in the ESXi datastore as Veeam is easy enough to use but I would be upset if I lost my data on the rest of my FreeNAS volume, so that's concerning. I just can't imagine that the Intel DC s3510 is too slow to use as a proper SLOG and will only put out 100 MB/s. I wish I had an NVMe drive to test SLOG performance with...

At any rate, I will keep testing and see if I can get the performance to an acceptable level whilst still using sync=enabled. My results make me feel like virtualizing FreeNAS will a viable option for my non-production environment and I don't really see any downsides as long as you can passthrough a proper HBA to the VM, but again I'm not sure which way I'll go just yet as I want to do more testing. I just wanted to share a few of my initial tests and start a post to get some discussion going. I'll report back once I come to a conclusion on how I'm going to set things up or if I have any other interesting data to share. I would enjoy any questions, opinions, or feedback you have on this configuration and hearing about your similar setups. I'd also really like to figure out why things are so slow with sync=enabled. Thanks for any feedback.

I've wanted to see how well FreeNAS would perform in back to back tests on physical vs. virtual hosts on the same hardware for a while now and finally have all the parts together to do some initial testing. My vision is to have a single box that can do it all for my homelab and for testing with ESXi being the hypervisor and have FreeNAS be virtualized and host out a nested datastore for the rest of my ESXi VM's.

I wanted to share my results in case anyone else was curious and I haven't seen anything like this benchmarked before. This is not a production system and the results are far from scientific but should give you a little insight into the performance of this type of configuration.

Pertinent hardware specs:

- FreeNAS 9.10.1

- SuperStorage Server 6048R-E1CR36L chassis

- X10DRH-iT motherboard

- Dual Xeon 2620 v4 CPUs

- 128 GB RAM

- LSI 3008 HBA

- 14x Seagate IronWolf 10TB 7,200 RPM drives in a single zvol that constists of 7x mirrored vdevs that are stripped (RAID 10).

- 2x 80 GB Intel SSD DC s3510 overprovisioned to 10 GB for SLOG

- 1x 256 GB Samsung 850 Pro SSD for cache

- 2x onboard Intel x540 NICs (MTU 9000)

- Netgear XS708E 10GbE switch with VLANs set up for the storage network to isolate its traffic.

FreeNAS was installed on a USB device in the SuperStorage Server 6048R-E1CR36L chassis with X10DRH-iT motherboard. The onboard Intel X540 10GbE NICs were plugged into a Netgear XS708E 10GbE switch. On the ESXi host side of things, I used a Supermicro X10SDV-TLN4F based 1U server which has an Intel Xeon D-1540 SoC and integrated Intel x552 10GbE NICs as well which was also plugged into the Netgear XS708E 10GbE switch. The screenshots you're seeing below are from a Windows Server 2012 R2 VM on iSCSI and NFS datastores.

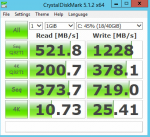

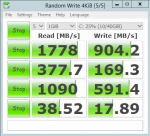

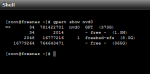

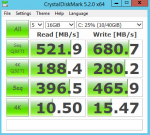

Physical FreeNAS, iSCSI VM, sync=disabled

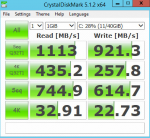

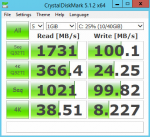

Physical FreeNAS, NFS VM, sync=disabled

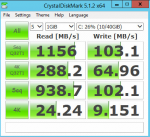

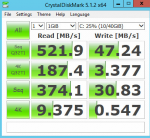

I forgot to screenshot the results for the physical host with sync=always but the writes were the same as you'll see below with the FreeNAS VM at around 100 MB/s.

VM host setup:

The general process for the VM setup was as follows:

A FreeNAS VM was created on an SATA DOM datastore that is physically in the host. Then in the FreeNAS VM the LSI 3008 HBA was set to passthrough mode so the VM could have full access to the disks. VMXNET 3 NICs were used for the VM and MTU was set to 9000 from within ESXi networking and FreeNAS. Either an iSCSI zvol target or NFS datastore was set up in the FreeNAS VM and passed back through to the ESXi host where I created a nested datastore. I then created a Windows Server 2012 R2 VM on that nested datastore. These are the disk performance results from the various instances of the Windows Server 2012 R2 VMs that's were on the aforementioned datastores. The Intel E1000E NIC was used for the Windows Server 2012 R2 VMs and the MTU was set to 9014 bytes.

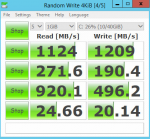

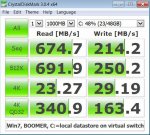

VM FreeNAS, NFS VM, sync=disabled

Questions:

- Based on the info I provided, is there any glaring reason why my performance metrics take such a hit with sync=enabled?

- Which do you chose for your ESXi datastore; iSCSI or NFS and why? Right now I'm leaning towards NFS based on these results and the hassle I see in general with iSCSI tuning on FreeNAS.

- What other disadvantages do you see with this configuration as it relates to virtualizing FreeNAS, considering you can passthrough an HBA and this is a non production environment?

I'm still not totally sold one way or the other on virtualizing FreeNAS, but the temptation of virtualizing it for the power savings of having less servers running is tempting. It's also tempting to use sync=disabled for the ESXi datastores but I know that's not smart so I need to get that figured out. Additionally, as it relates to virtualizing FreeNAS, it's pretty annoying when you reboot or have a power outage as you have to either SSH into the ESXi host to rescan the storage adapters (which I realize you can automate but it doesn't work if you're using FreeNAS volume encryption) or you have to do it manually from the GUI before you can power on your VMs that are in your nested datastore that's hosted by the FreeNAS VM. It's also of course a huge negative as this type of setup takes down all of your nested VMs when something goes wrong and the system goes down for whatever reason.

Furthermore, my results show that sync=enabled is a huge performance killer, even with an Intel DC s3510 SSD's for a SLOG. I am not sure if I'm doing something wrong but the performance loss is just too great. I don't really care if sync=disabled winds up destroying my VM's in the ESXi datastore as Veeam is easy enough to use but I would be upset if I lost my data on the rest of my FreeNAS volume, so that's concerning. I just can't imagine that the Intel DC s3510 is too slow to use as a proper SLOG and will only put out 100 MB/s. I wish I had an NVMe drive to test SLOG performance with...

At any rate, I will keep testing and see if I can get the performance to an acceptable level whilst still using sync=enabled. My results make me feel like virtualizing FreeNAS will a viable option for my non-production environment and I don't really see any downsides as long as you can passthrough a proper HBA to the VM, but again I'm not sure which way I'll go just yet as I want to do more testing. I just wanted to share a few of my initial tests and start a post to get some discussion going. I'll report back once I come to a conclusion on how I'm going to set things up or if I have any other interesting data to share. I would enjoy any questions, opinions, or feedback you have on this configuration and hearing about your similar setups. I'd also really like to figure out why things are so slow with sync=enabled. Thanks for any feedback.

Last edited: