- Joined

- Nov 22, 2017

- Messages

- 310

@morganL suggested that I make a separate thread for this:

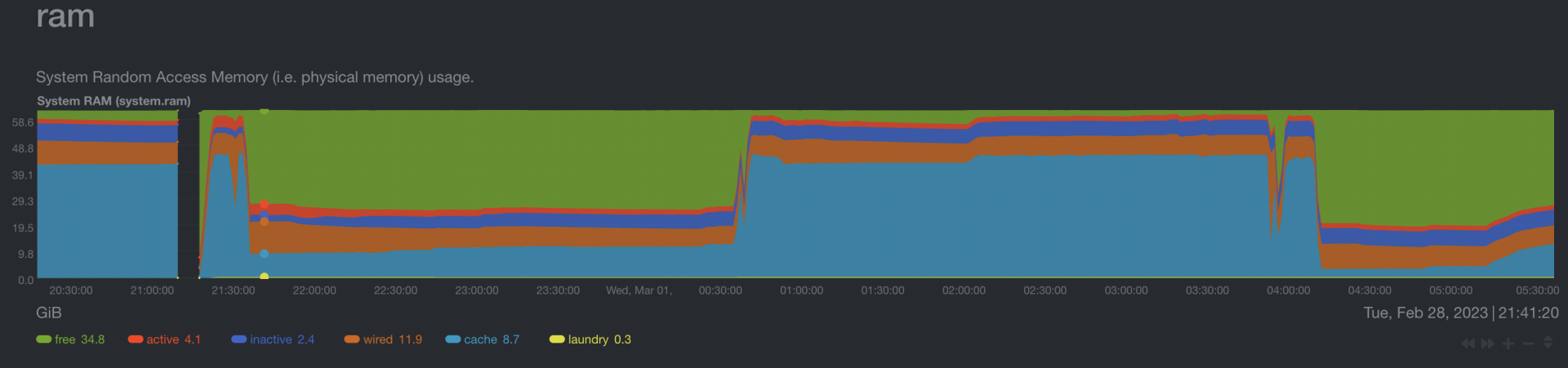

Here is an illustration of what happens with the zfs arc in u4 for me, is any one else seeing anything like this? (the gap is the reboot for upgrade):

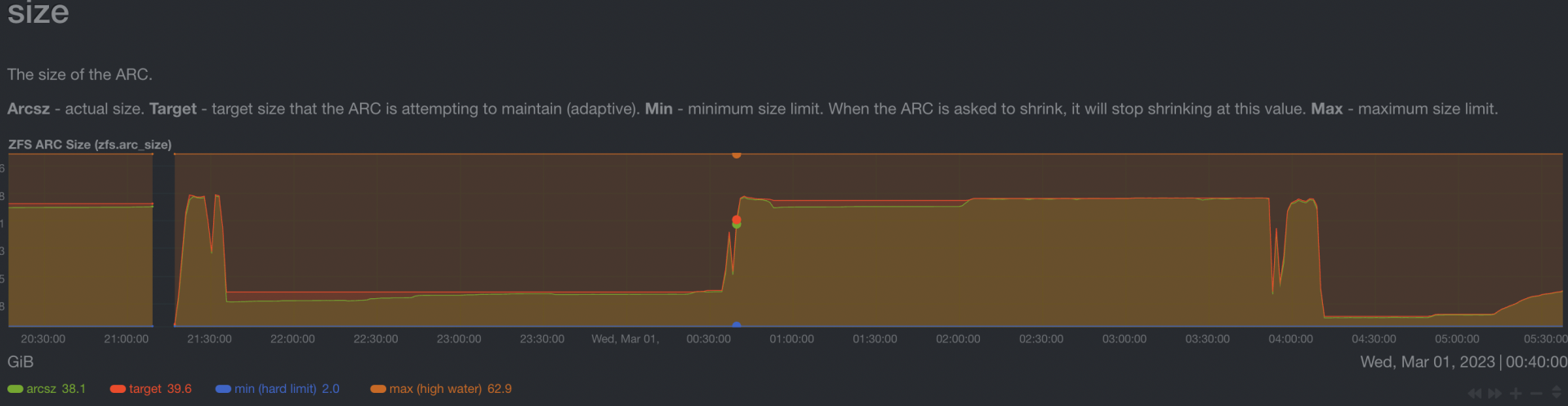

You can also see that though there is no memory pressure the arc target drops during periods of light activity:

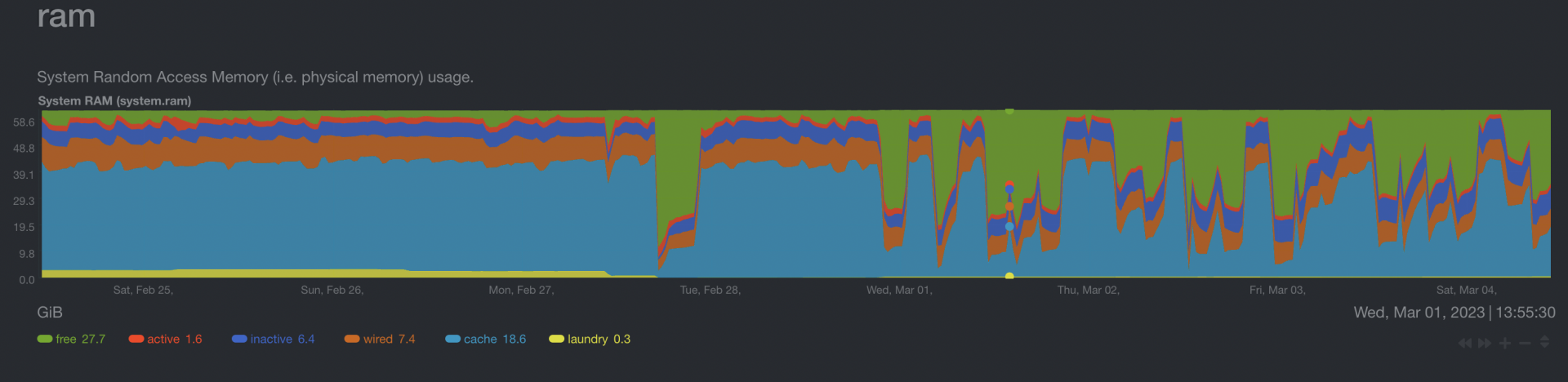

And here is a graph of the last 8 days showing it bouncing around:

Here is an illustration of what happens with the zfs arc in u4 for me, is any one else seeing anything like this? (the gap is the reboot for upgrade):

You can also see that though there is no memory pressure the arc target drops during periods of light activity:

And here is a graph of the last 8 days showing it bouncing around:

Last edited: