Please be gentle, I'm new to the TrueNAS concept and am just dipping my toe into the world of ZFS. Long story short, I was originally going to go unRAID but the lack of trim and some other limitations make TrueNAS a better fit.

This is going to be one of those builds that is overkill. I get it. I'm one of those with a bit more cents than sense.

I have no set IOPS objectives, other than the ability to max out a 25GB SFP28 interface when reading. I'm currently leaning towards a RAID-Z1 6 drive (or possible 8 drive) configuration to start with. The current hardware layout (subject to change but unlikely)

Dell PowerEdge R7525

2x AMD EPYC 7313 (16C, 3.0Ghz)

16x Micron 64GB DDR4-3200 RDIMM

Mellanox ConnectX-5 SFP28 OCP 3.0

2x 1400w PSU

Dell R7525 BOSS-S2 2x240GB Raid 1 (truenas boot drive)

6x (or 8x) Intel D5-P5316 U.2 30.72TB NVMe Gen4

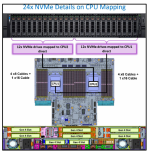

According to this documentation : https://infohub.delltechnologies.co...eredge-r7525-populated-with-24-nvme-drives-4/

The R7525 is the only 15th Generation Dell that doesn't over subscribe the NVMe in a 24x drive configuration with PCIe switches. As such, the first 12 drives map to CPU 1, and the last 12 map to CPU2.

The root of my question is, the current drive configuration plan (either 6x or 8x) will fit entirely within 1 CPU or the other. Is it better to keep these drives all on 1 CPU, or perhaps split them between 2 different CPU's?

Let's say I did 6 drives. Should I do 3 drives on CPU1 and 3 drives on CPU2, or all 6 drives on 1 CPU?

All other things being equal, the goal of splitting the drives or not would be to extract the maximum amount of performance from the hardware. I'm sure other configuration comes into play (L2ARC, ZIL, etc.) but I want to make sure I'm putting the drives in the best "hardware" configuration I can from the very beginning.

This is going to be one of those builds that is overkill. I get it. I'm one of those with a bit more cents than sense.

I have no set IOPS objectives, other than the ability to max out a 25GB SFP28 interface when reading. I'm currently leaning towards a RAID-Z1 6 drive (or possible 8 drive) configuration to start with. The current hardware layout (subject to change but unlikely)

Dell PowerEdge R7525

2x AMD EPYC 7313 (16C, 3.0Ghz)

16x Micron 64GB DDR4-3200 RDIMM

Mellanox ConnectX-5 SFP28 OCP 3.0

2x 1400w PSU

Dell R7525 BOSS-S2 2x240GB Raid 1 (truenas boot drive)

6x (or 8x) Intel D5-P5316 U.2 30.72TB NVMe Gen4

According to this documentation : https://infohub.delltechnologies.co...eredge-r7525-populated-with-24-nvme-drives-4/

The R7525 is the only 15th Generation Dell that doesn't over subscribe the NVMe in a 24x drive configuration with PCIe switches. As such, the first 12 drives map to CPU 1, and the last 12 map to CPU2.

The root of my question is, the current drive configuration plan (either 6x or 8x) will fit entirely within 1 CPU or the other. Is it better to keep these drives all on 1 CPU, or perhaps split them between 2 different CPU's?

Let's say I did 6 drives. Should I do 3 drives on CPU1 and 3 drives on CPU2, or all 6 drives on 1 CPU?

All other things being equal, the goal of splitting the drives or not would be to extract the maximum amount of performance from the hardware. I'm sure other configuration comes into play (L2ARC, ZIL, etc.) but I want to make sure I'm putting the drives in the best "hardware" configuration I can from the very beginning.