ChrisReeve

Explorer

- Joined

- Feb 21, 2019

- Messages

- 91

Good morning

I have the following server:

MB: Supermicro X9SRL-F

CPU: E5-2650 v2 (8C/16T)

RAM: 64GB 1600MHz DDR3

HBAs: 2x LSI 9211-8i flashed to IT-mode

Drives: 10x10TB WD Red (white label, shucked from WD External drives) in one ZFS2 pool

NIC: Intel X540-T2 2x10Gb RJ45

Other relevant HW:

Networking:

Switch: Netgear SX10

Desktop specs:

CPU: 9600K

RAM: 16GB 3200MHz

SSD: Intel 660p 1TB

NIC: Asus XC-C100C 1x10Gb RJ45

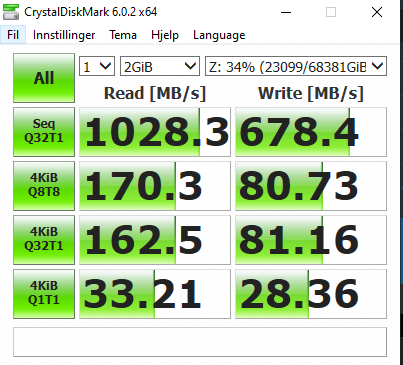

Performance is decent. Crystal Disk Mark reports the following:

This is obviously boosted by ARC, as 678MB/s sequential write is more than the theoretical maximum write speed for 10 drives in ZFS2. Read speeds are as expected, more or less saturating my 10Gb connection, minus overhead.

But, copying large files to my Intel 660p 1TB drive sees speeds closer to 400-600MB/s sustained, while I would expect closer to 1GB/s. My Intel 660p is about 40% filled, and should at least maintain beyond 1GB/s for the first several GBs. Also, read speeds are suffering if I choose "cold" files, by which I mean files that are obviously not cached, files that haven't been accessed in several months. If I transer a file to my server, then immediately copy it back to my SSD, speeds are closer to 800-900 MB/s.

Are there any obvious reasons why I dont se sustained sequential read speeds closer to 1GB/s? Is there any optimization that I might be able to do?

I have the following server:

MB: Supermicro X9SRL-F

CPU: E5-2650 v2 (8C/16T)

RAM: 64GB 1600MHz DDR3

HBAs: 2x LSI 9211-8i flashed to IT-mode

Drives: 10x10TB WD Red (white label, shucked from WD External drives) in one ZFS2 pool

NIC: Intel X540-T2 2x10Gb RJ45

Other relevant HW:

Networking:

Switch: Netgear SX10

Desktop specs:

CPU: 9600K

RAM: 16GB 3200MHz

SSD: Intel 660p 1TB

NIC: Asus XC-C100C 1x10Gb RJ45

Performance is decent. Crystal Disk Mark reports the following:

This is obviously boosted by ARC, as 678MB/s sequential write is more than the theoretical maximum write speed for 10 drives in ZFS2. Read speeds are as expected, more or less saturating my 10Gb connection, minus overhead.

But, copying large files to my Intel 660p 1TB drive sees speeds closer to 400-600MB/s sustained, while I would expect closer to 1GB/s. My Intel 660p is about 40% filled, and should at least maintain beyond 1GB/s for the first several GBs. Also, read speeds are suffering if I choose "cold" files, by which I mean files that are obviously not cached, files that haven't been accessed in several months. If I transer a file to my server, then immediately copy it back to my SSD, speeds are closer to 800-900 MB/s.

Are there any obvious reasons why I dont se sustained sequential read speeds closer to 1GB/s? Is there any optimization that I might be able to do?