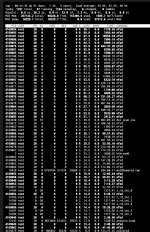

BSD PS does not show threads by default..

If you want to see all the threads associated with BSD processes, you need to add -H to the PS command line, for example:

Code:

root@nas1[~]# ps -waugx | grep nfs

root 3343 1.6 0.0 12760 2784 - S 12Oct23 422:14.56 nfsd: server (nfsd)

root 3342 0.0 0.0 100824 2076 - Is 12Oct23 0:00.15 nfsd: master (nfsd)

root 76724 0.0 0.0 4652 2288 1 R+ 23:20 0:00.00 grep nfs

vs

Code:

root@nas1[~]# ps -Hwaugx | grep nfs

root 3343 1.1 0.0 12760 2784 - S 12Oct23 0:30.07 nfsd: server (nfsd)

root 3342 0.0 0.0 100824 2076 - Is 12Oct23 0:00.15 nfsd: master (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 6:38.63 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.72 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.54 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 1:49.93 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:05.10 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.65 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:05.09 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.63 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:12.65 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.86 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 1:31.74 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:04.90 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 18:17.53 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 12:54.66 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 10:35.28 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 13:06.45 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 12:14.18 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 10:36.20 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 13:24.18 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 10:39.58 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 10:40.61 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 13:13.40 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 13:45.21 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 10:36.60 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 16:54.84 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 17:56.05 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 19:24.95 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 18:11.45 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 16:53.18 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 17:56.60 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 16:57.40 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 18:00.49 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 19:08.29 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 18:42.69 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 21:23.96 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 17:12.69 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:15.89 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 3:46.71 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 8:42.35 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:25.93 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 5:04.62 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 1:42.81 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 0:31.84 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - S 12Oct23 1:54.21 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 4:15.71 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 7:49.70 nfsd: server (nfsd)

root 3343 0.0 0.0 12760 2784 - I 12Oct23 7:37.03 nfsd: server (nfsd)

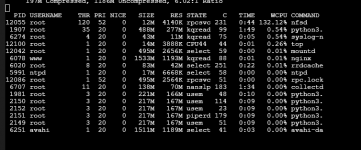

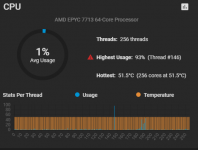

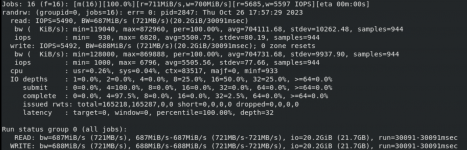

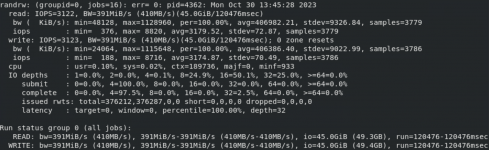

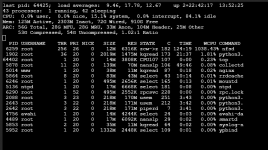

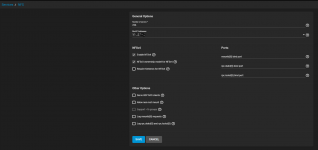

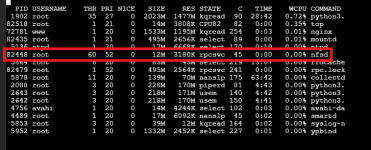

NFS tuning is a bit of an art.. Some of BSD's defaults are somewhat.. constrained but despite this, the TrueNAS defaults (aside from total threads/servers) should be pretty sane for most applications. If you plan to really push NFS with BSD, you will want to look at not only the number of threads/worker processes, but also the buffer sizes on the NFS mount, how the underlying filesystem is handling access times and sync writes and a number of other system and ZFS tunables..

Those tunables may have different defaults on Linux, so it's not always apples to apples to compare raw performance between default tuned OSs.

I had to tune some buffer and zfs timers to get decent performance for my applications. We can now fill a 25Gb/s link.. But there is an upper limit to what 1 thread can do on a single CPU core.

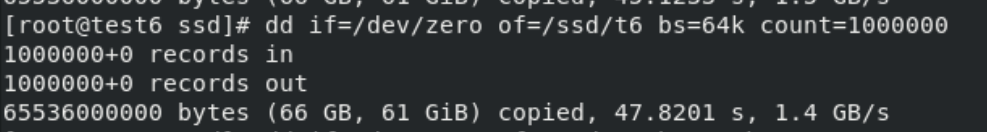

My advice is to pick the platform stack you're going to use, study your actual workload (rarely do workloads resemble DD), learn the tunables and then start tinkering with tuning the relevant nfs and zfs settiings until you get the performance you need.

and remember, don't drag race the bus.. :)

https://sc-wifi.com/2014/07/08/drag-racing-the-bus/