I have a TrueNAS Mini XL that is about 2 years old now, last night I upgraded from the latest FreeNAS 11 (sorry I don't remember the exact version) to TrueNAS 12.0-U1. Previous to my upgrade I had several NFS shares all being shared out over NFS version 4 which were all working and I was even using one of the NFS shares for Kubernetes persistent volumes. After the upgrade, none of my shares will mount either through Kubernetes or autofs on my Ubuntu 20.04 clients (these are my only clients). So I started testing manually, but the mount just hangs with the following output:

Interestingly if I specify the option vers=4 the mount succeeds and mounts immediately:

I have tried using FQDN and IP to eliminate any potential issue with DNS but this has worked for years so I'm hesitant to go chasing problems that don't exist. I did notice the output of 'rpcinfo -p' does not show nfs version 4:

Checking my exports on the NAS:

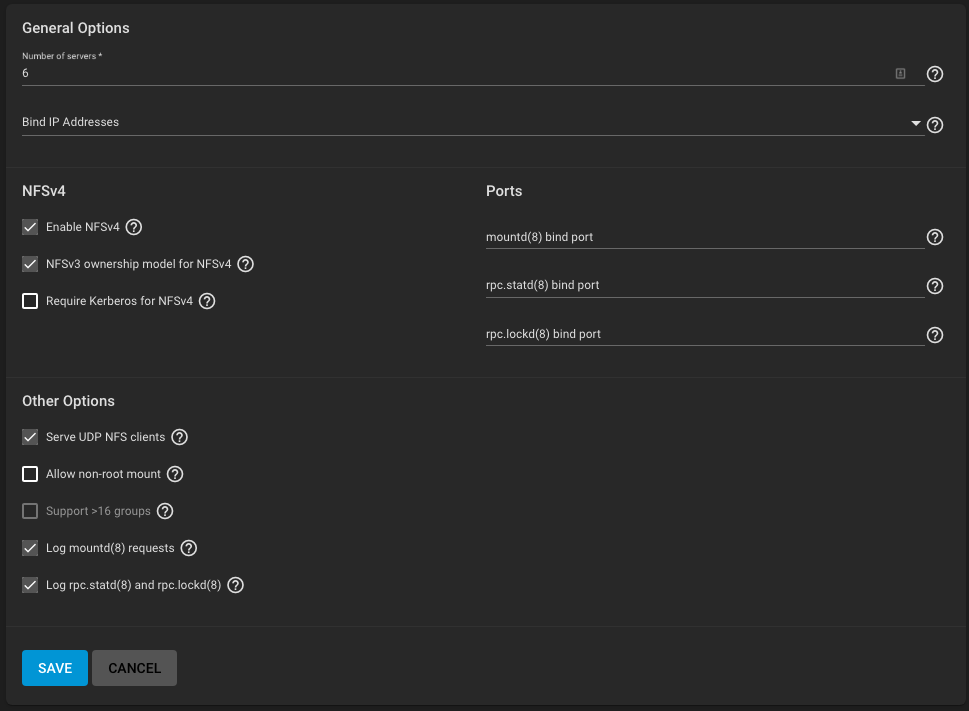

The service configuration on the NAS:

I've been trying different settings here, the original number of servers was 4 and I don't allow UDP or do any logging normally, but was hoping in my testing it would shed some light. Unfortunately, the logs aren't incredibly helpful, here's a snippet:

I spent some time looking into these and mainly found old posts basically saying these are cosmetic issues. I am concerned about the nfs cant register svc name that seems like a thread to pull. The can't open /etc/zfs/exports error from various posts I saw touch the file, or copy /etc/exports, I can confirm the file did not exist and I have tried both of these solutions. I do use LACP and jumbo frames in my configuration but again this configuration has been working for some time I don't believe the issue is related to these. I'm more of a Linux admin, I've reached the end of my BSD knowledge and I hate troubleshooting NFS (regardless of OS) it always seems like there are no helpful logs. If someone could point me in the right direction, or any direction for that matter, any help would be greatly appreciated.

# mount -v 10.12.22.60:/mnt/data/k8s/ /mnt

mount.nfs: timeout set for Sun Jan 3 13:22:21 2021

mount.nfs: trying text-based options 'vers=4.2,addr=10.12.22.60,clientaddr=10.12.22.39'

mount.nfs: mount(2): Protocol not supported

mount.nfs: trying text-based options 'vers=4.1,addr=10.12.22.60,clientaddr=10.12.22.39'

Interestingly if I specify the option vers=4 the mount succeeds and mounts immediately:

# mount -v -o vers=4 10.12.22.60:/mnt/data/k8s/ /mnt

mount.nfs: timeout set for Sun Jan 3 13:24:15 2021

mount.nfs: trying text-based options 'vers=4,addr=10.12.22.60,clientaddr=10.12.22.39'

root@node2:~# df -h /mnt

Filesystem Size Used Avail Use% Mounted on

10.12.22.60:/mnt/data/k8s 13T 8.0G 13T 1% /mnt

I have tried using FQDN and IP to eliminate any potential issue with DNS but this has worked for years so I'm hesitant to go chasing problems that don't exist. I did notice the output of 'rpcinfo -p' does not show nfs version 4:

# rpcinfo -p 10.12.22.60

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100000 4 7 111 portmapper

100000 3 7 111 portmapper

100000 2 7 111 portmapper

100005 1 udp 717 mountd

100005 3 udp 717 mountd

100005 1 tcp 717 mountd

100005 3 tcp 717 mountd

100003 2 udp 2049 nfs

100003 3 udp 2049 nfs

100003 2 tcp 2049 nfs

100003 3 tcp 2049 nfs

100024 1 udp 773 status

100024 1 tcp 773 status

100021 0 udp 744 nlockmgr

100021 0 tcp 874 nlockmgr

100021 1 udp 744 nlockmgr

100021 1 tcp 874 nlockmgr

100021 3 udp 744 nlockmgr

100021 3 tcp 874 nlockmgr

100021 4 udp 744 nlockmgr

100021 4 tcp 874 nlockmgr

Checking my exports on the NAS:

# cat /etc/exports

V4: / -sec=sys

/mnt/data/k8s -maproot="root":"wheel"

The service configuration on the NAS:

I've been trying different settings here, the original number of servers was 4 and I don't allow UDP or do any logging normally, but was hoping in my testing it would shed some light. Unfortunately, the logs aren't incredibly helpful, here's a snippet:

Jan 3 12:47:26 freenas nfsd: can't register svc name

Jan 3 13:02:51 freenas 1 2021-01-03T13:02:51.037773-07:00 freenas.example.com mountd 22808 - - can't open /etc/zfs/exports

Jan 3 13:02:53 freenas 1 2021-01-03T13:02:53.184244-07:00 freenas.example.com mountd 23313 - - can't open /etc/zfs/exports

Jan 3 13:02:53 freenas nfsd: can't register svc name

Jan 3 13:02:53 freenas kernel: NLM: local NSM state is 21

I spent some time looking into these and mainly found old posts basically saying these are cosmetic issues. I am concerned about the nfs cant register svc name that seems like a thread to pull. The can't open /etc/zfs/exports error from various posts I saw touch the file, or copy /etc/exports, I can confirm the file did not exist and I have tried both of these solutions. I do use LACP and jumbo frames in my configuration but again this configuration has been working for some time I don't believe the issue is related to these. I'm more of a Linux admin, I've reached the end of my BSD knowledge and I hate troubleshooting NFS (regardless of OS) it always seems like there are no helpful logs. If someone could point me in the right direction, or any direction for that matter, any help would be greatly appreciated.