I built a new server this month to replace my ubuntu with a hardware server with this more friendly option.

AMD 3600

MSI X470

24 GB Ram

10x 6TB Toshiba MG06ACA600EY

LSI 9211-8i, IT flashed with current Firmware

Right now i have all 10 drives in a stripe to test performance. Raid Z2 is my end goal, which also suffer from the same read performance.

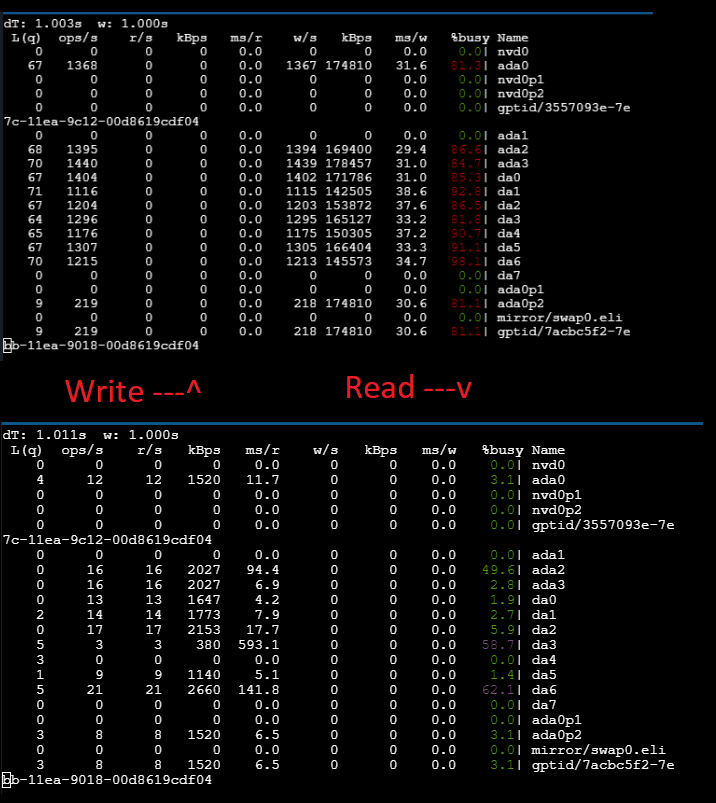

GSTAT while doing both a dd write of a 64 gig file, and read of the same 64 gig file. The performance is the same when reading and writing to the pool through a SMB share.

I am quite new to Freenas, and still reading some of the documentation and I have ran out of possible tests to conduct.

Are there any tests, or commands to pull up other info which may show the reason for the poor read performance?

AMD 3600

MSI X470

24 GB Ram

10x 6TB Toshiba MG06ACA600EY

LSI 9211-8i, IT flashed with current Firmware

Right now i have all 10 drives in a stripe to test performance. Raid Z2 is my end goal, which also suffer from the same read performance.

GSTAT while doing both a dd write of a 64 gig file, and read of the same 64 gig file. The performance is the same when reading and writing to the pool through a SMB share.

I am quite new to Freenas, and still reading some of the documentation and I have ran out of possible tests to conduct.

Are there any tests, or commands to pull up other info which may show the reason for the poor read performance?