Unfortunately, I was unable to confirm gswa's configuration with a 3xdrive RAID-Z1 array...

Code:

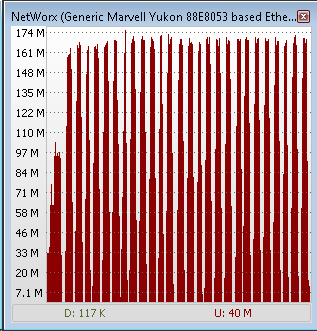

ZFS, All drives formatted 4096K (gnop) ZFS-RAID1, 3x2TB (gnop) ----------------------------------------------------------------- dd if=/dev/zero of=test.dat count=50k bs=2048k 107374182400 bytes transferred in 666.079431 secs (161203270 bytes/sec) -- 153.73Mb/s dd of=/dev/null if=test.dat count=50k bs=2048k 107374182400 bytes transferred in 529.579634 secs (202753610 bytes/sec) -- 193.36Mb/s iSCSI (Jumbo) -- UP (Avg 26Mb/s, Pk 90Mb/s) -- Peaky