In truenas I had a zfs pool consisting of 2 nmve-ssd-storage drives 980 pro samsung

I was fixing network issues and I was kicked out while trying different subnets.

When I got back in via the shell commands the zfs pool was somehow not reachable. It was showing offline not visible.

So I made a very stupid action and disconnected it so I would be able to mount it into a new truenas installation.

But now the zpool somehow fell apart into zfs_members

The data and pool are untoched.

The hardware is 2x nmve ssd's 1TB

I have now installed proxmox and keep the internet going because I wanted to install pfsense in truenas into a vm.

The proxmox is now installed onto another ssd drive so I could run some shell commands without working on the nvme's.

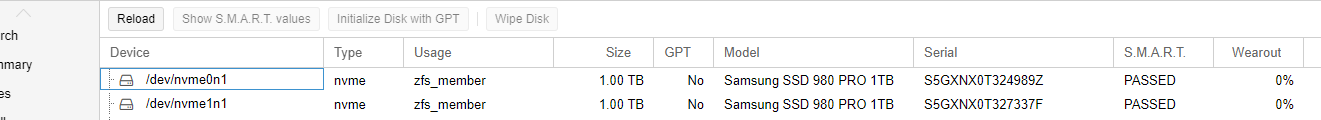

So in proxmox I see the 2 drives there now, being labeled as 2 zfs_members.

I need to restore this data can I still access the 2 drives separatly? is there a way to combine them back into a zpool without destroying the data?

I am very stressed at the moment because all my kids childhood photo's are on these 2 disks.

Please help me fix this.

I was fixing network issues and I was kicked out while trying different subnets.

When I got back in via the shell commands the zfs pool was somehow not reachable. It was showing offline not visible.

So I made a very stupid action and disconnected it so I would be able to mount it into a new truenas installation.

But now the zpool somehow fell apart into zfs_members

The data and pool are untoched.

The hardware is 2x nmve ssd's 1TB

I have now installed proxmox and keep the internet going because I wanted to install pfsense in truenas into a vm.

The proxmox is now installed onto another ssd drive so I could run some shell commands without working on the nvme's.

So in proxmox I see the 2 drives there now, being labeled as 2 zfs_members.

I need to restore this data can I still access the 2 drives separatly? is there a way to combine them back into a zpool without destroying the data?

I am very stressed at the moment because all my kids childhood photo's are on these 2 disks.

Please help me fix this.