-

Important Announcement for the TrueNAS Community.

The TrueNAS Community has now been moved. This forum has become READ-ONLY for historical purposes. Please feel free to join us on the new TrueNAS Community Forums

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Multiple ungraceful reboots and a temporarily unhealthy pool on "scrub-day"

- Thread starter Mastakilla

- Start date

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Holy crap! I wasn't expecting to find someone else with the same problem tbh...same problem here.

Few things are the same with your issue.

1. HBA card, LSI SAS 9211-8i

2. Upgraded from Freenas to Truenas recently

3. Loop reboots during zpool scrubbing, when i stop the scrub, everything fine without reboot

i ordered a new HBA card LSI SAS 9300-8i, will make a test this weekend.

Although I agree with AlexGG that it doesn't sound like a software issue, it is not impossible either... And that someone else has this same problem does increase my suspicion towards it...

Can you provide some more details on your hardware details @normanlu? And what you have tried / investigated so far? Do you also get CKSUM errors during the scrub? Can you reproduce both CKSUM errors and the reboots? Or just the CKSUM errors like me?

Thanks!

Last edited:

my build:

CPU: intel i7-6700k

RAM: 64GB DDR4 non-ECC

HBA: LSI SAS 9211-8i

HDDs: 8x HGST 8TB (RAIDZ2)

Boot disk: Samsung 850evo 256GB

NIC: intel x710-da4

Freenas is virtualized to a PVE host (HBA and one port of x710 are passed through to Freenas) more than one year, solid stable, no issue at all.

After the upgrade from Freenas 11.3-U5 to Truenas core 12.0 release, last week, my PVE host get very unstable, the PVE host with all VMs reboot again and again...

what i did so far:

- Updated PVE, same problem

- Stop the Truenas VM, PVE and other VMs work very well without reboot

- Installed Truenas 12.0 to another SSD disk and replace the PVE boot disk, same problem

- Upgrade Truenas 12.0 to TrueNAS-12.1-Nightlies, same problem

i have ordered a new LSI SAS 9300-8i to replace LSI 9211-8i, because all HW components are shared with PVE host and VMs except HBA card, therefore i guess LSI 9211-8i most likely the root cause...

CPU: intel i7-6700k

RAM: 64GB DDR4 non-ECC

HBA: LSI SAS 9211-8i

HDDs: 8x HGST 8TB (RAIDZ2)

Boot disk: Samsung 850evo 256GB

NIC: intel x710-da4

Freenas is virtualized to a PVE host (HBA and one port of x710 are passed through to Freenas) more than one year, solid stable, no issue at all.

After the upgrade from Freenas 11.3-U5 to Truenas core 12.0 release, last week, my PVE host get very unstable, the PVE host with all VMs reboot again and again...

what i did so far:

- Updated PVE, same problem

- Stop the Truenas VM, PVE and other VMs work very well without reboot

- Installed Truenas 12.0 to another SSD disk and replace the PVE boot disk, same problem

- Upgrade Truenas 12.0 to TrueNAS-12.1-Nightlies, same problem

i have ordered a new LSI SAS 9300-8i to replace LSI 9211-8i, because all HW components are shared with PVE host and VMs except HBA card, therefore i guess LSI 9211-8i most likely the root cause...

@Mastakilla Were there any new features you needed that caused you to update your zfs pool?

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

@diversity : Not really no, I just didn't like potentially missing out, so after it ran ok for some weeks, I decided to update the feature flags. Now I realize that was too soon

@normanlu : Thanks for the details normanlu! Much appreciated... Do you have scrub checksum errors as well? Are you still able to try downgrading to FreeNAS11 for testing? Or did you also update your pools feature flags? How often do the reboots occur? How often do scrub checksum errors occur (if they do)?

Here are my findings so far (updates compared the previous overview in orange, latest updates in purple):

The catastrophy

@normanlu : Thanks for the details normanlu! Much appreciated... Do you have scrub checksum errors as well? Are you still able to try downgrading to FreeNAS11 for testing? Or did you also update your pools feature flags? How often do the reboots occur? How often do scrub checksum errors occur (if they do)?

Here are my findings so far (updates compared the previous overview in orange, latest updates in purple):

The catastrophy

- 28 November at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44 my TrueNAS server rebooted without properly shutting down

- 28 November from 4h00 till 29 November 1h30 a scrub ran which detected and fixed 8 errors on 5 different HDDs

- Reboots

- First time ever after about a year of uptime that my server has an unexpected reboot (6 actually!)

- "Recently" following changes were made to the server

- Intel Optane disk was added as SLOG about 2 months ago

- 32GB ECC RAM was replaced by 64GB ECC RAM about 1 month ago

- FreeNAS11 was upgraded to TrueNAS12

- Weird, unexpected, unlogged reboots often happen because of

- extreme RAM instability

- cheap / broken PSU

- maybe a broken motherboard or HBA?

- a bug in TrueNAS?

- Scrub

- My scrubs are scheduled monthly, so the added Optane disk certainly had a problem-free scrub last month, but the RAM and TrueNAS12 had their first scrub when it started acting weird

- I noticed that scrub checksum errors are cleared on reboot. (Is this a bug?)

- I noticed that sometimes TrueNAS tries to send emails on boot before the network interfaces are online (so maybe some scrub error mails didn't get through)

- As the scrub completed only after all those reboots, it seems like scrub automatically resumes after a crash / reboot, but it also seems like it requires the pool to be unlocked before it can start the resume (still need to confirm this). This could (partly?) explain why it took 21h30min to complete the 28 November scrub, compared to about 12h for all scrubs I did for testing afterwards.

- The "old" 32GB ECC RAM was tested with Memtest86 / Linux / TrueNAS12 and confirmed that

- it was 100% stable

- the platform fully supported ECC error reporting to the OS (both corrected and uncorrected errors) - on FreeNAS 11 my platform doesn't support ECC Error reporting

- The "new" 64GB ECC RAM was also tested with Memtest86 (100% stable and reporting both corrected / uncorrected ECC errors) and in TrueNAS12 (corrected ECC errors, uncorrected probably too, but I didn't test that long).

- I've done all recommended burn-in tests that I could find and the random access dd test (from solnet-array-test), for example, didn't error, but did make me aware of large duration differences between the HDDs, which caused me to suspect overheating (or another problem) of my HBA. So I replaced the HBA, added a fan to it and re-tested it. And the large duration difference between the HDDs was solved and the full burn-in test was consistently passed. In short, it is very well tested and, although I realize it (everything) is still possible, I'm reasonably confident my HBA is pretty ok now...

- I've checked the SMART values of all my HDDs and they are all 100% healthy

- In Windows, using Hard Disk Sentinel, I've performed an "Extended Self Test" of every HDD simultaneously, while running Prime95 (blended mode with AVX) at the same time. This took about 19 hours and was also 100% successful. This is an extreme test for testing

- RAM stability

- CPU stability

- PSU stability

- HDD health

- I've create a logging script for scrub and re-ran scrub on 7/8 December and it again found 4 errors on 2 different HDDs. There were no reboots this time.

- On 8 December, a couple hours later, I ran another scrub and it again found 1 error on yet another HDD. Again no reboots.

- On 10 December, I underclocked my RAM and ran scrub twice (the first time to clear out "old" checksum errors and the second time to confirm if the issue still occurs with underclocked RAM). And I still have checksum errors with the underclocked memory.

- On 11 December, I overclocked my RAM and confirmed that memory ECC errors get written to /var/log/messages

- On 12 December, I've been writing to the Optane-pool for the whole day (The Optane disk is not connected through the HBA). I did this by copying Windows and Program Files folders to it, copying and extracting a 95GB rar file, etc. So a mix of all kinds of files. The scrub afterwards didn't find any errors, so it seems like the issue only occurs for devices connected to the HBA.

- The scrub checksum errors

- are easy to reproduce. So something is certainly VERY wrong with my NAS.

- seem to accumulate over time. I think this means that

- The errors are not caused by scrub incorrectly detecting (and fixing) (non-)issues

- The errors are actually being written to my HDDs and scrub correctly detects and fixes them (for now at least)

- It seems like they are caused not by the HDDs themselves, but by something "before" the HDDs

- Could be the HBA. But as said earlier, this HBA was confirmed to be rock solid stable during burn-in testing.

- Could be the RAM, as that is the only new piece of hardware since the scrubs started failing. However the RAM was thoroughly tested with Memtest86 and I've confirmed that it properly reports memory ECC errors. So I'm quite sure it actually isn't the RAM.

- Could be something with the motherboard

- Could be because of my upgrade from FreeNAS11 to TrueNAS12

- The reboots seem harder to reproduce, no sure what exactly triggered them or to trigger them

- Perhaps the load of the scrub itself triggered them (but then I would expect them to occur during new scrubs as well)

- Probably the same hardware problem that is causing the checksum errors on my HDDs, also caused those reboots (accidently at the same time as the scrub ran)

- Run solnet-array-test again. If that triggers issues, then I'm sure it is not a problem of scrub itself. But maybe it won't trigger anything since it's read-only?

- Remove my Optane SLOG.

- Replace the HBA (I'll need to purchase a new one to try this)

- Replace the PSU (I still have a 11 year old Seasonic 850W PSU laying around)

- Upgrade my BIOS (Agesa 1.0.0.6 just got released some days ago)

- Replace the RAM (I can put my desktops non-ECC memory in it)

- Replace the motherboard (I'll need to purchase a new one to try this)

Last edited:

@Mastakilla, i think that my issue get resolved, replaced a PSU, pve and all VMs are running more than 36 hours no single unexpected reboot.

zpool scrub completed without any errors.

root@truenas[~]# zpool status -v LIN-NAS

pool: LIN-NAS

state: ONLINE

scan: scrub repaired 0B in 13:08:25 with 0 errors on Sun Dec 13 07:04:52 2020

config:

NAME STATE READ WRITE CKSUM

LIN-NAS ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/15893ce0-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/15a0772a-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/15f24eab-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/165cb4f1-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/166f8ee7-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16c11ad5-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16d88b41-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16d30163-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

cache

gptid/2d7c608b-3c21-11eb-9a2a-f8f21e427430 ONLINE 0 0 0

errors: No known data errors

zpool scrub completed without any errors.

root@truenas[~]# zpool status -v LIN-NAS

pool: LIN-NAS

state: ONLINE

scan: scrub repaired 0B in 13:08:25 with 0 errors on Sun Dec 13 07:04:52 2020

config:

NAME STATE READ WRITE CKSUM

LIN-NAS ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

gptid/15893ce0-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/15a0772a-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/15f24eab-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/165cb4f1-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/166f8ee7-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16c11ad5-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16d88b41-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

gptid/16d30163-0074-11eb-8ac1-f8f21e427431 ONLINE 0 0 0

cache

gptid/2d7c608b-3c21-11eb-9a2a-f8f21e427430 ONLINE 0 0 0

errors: No known data errors

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

That is good news for you normanlu!

Did you have scrub checksum errors before replacing the PSU?

Else I think your issue is probably something different...

Did you have scrub checksum errors before replacing the PSU?

Else I think your issue is probably something different...

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

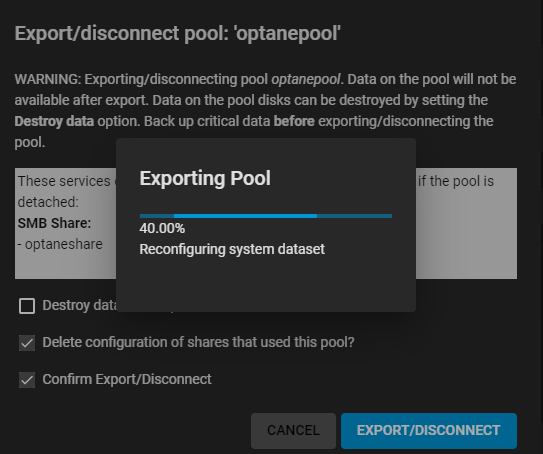

I just wanted to disconnect my Optane disk and tried exporting my pool on this device, but it seems to hang at "Reconfiguring system dataset"... Anyone have an suggestions on how to proceed?

/var/log/daemon.log

/var/log/middlewared.log

edit: I've gotten tired of waiting and did an 'init 6'

edit 2: After reboot, the export / disconnect of the pool worked without problem

/var/log/daemon.log

Code:

Dec 13 16:11:32 data 1 2020-12-13T16:11:32.179921+01:00 data.local avahi-daemon 1956 - - Files changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.179944+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/SMB.service vanished, removing services. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.179983+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/SSH.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180037+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/SFTP_SSH.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180075+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/ISCSITARGET.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180111+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/MIDDLEWARE_SSL.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180150+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/MIDDLEWARE.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180195+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/HTTPS.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180238+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/HTTP.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.180283+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/DEV_INFO.service changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.681745+01:00 data.local avahi-daemon 1956 - - Files changed, reloading. Dec 13 16:11:32 data 1 2020-12-13T16:11:32.682212+01:00 data.local avahi-daemon 1956 - - Got SIGHUP, reloading. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438412+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/SSH.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438454+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/SFTP_SSH.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438484+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/ISCSITARGET.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438516+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/MIDDLEWARE_SSL.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438555+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/MIDDLEWARE.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438597+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/HTTPS.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438641+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/HTTP.service) successfully established. Dec 13 16:11:33 data 1 2020-12-13T16:11:33.438687+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/DEV_INFO.service) successfully established. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044193+01:00 data.local collectd 47196 - - plugin_load: plugin "syslog" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044338+01:00 data.local collectd 47196 - - plugin_load: plugin "threshold" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044380+01:00 data.local collectd 47196 - - plugin_load: plugin "zfs_arc" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044419+01:00 data.local collectd 47196 - - plugin_load: plugin "nfsstat" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044465+01:00 data.local collectd 47196 - - plugin_load: plugin "write_graphite" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044504+01:00 data.local collectd 47196 - - plugin_load: plugin "cputemp" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044551+01:00 data.local collectd 47196 - - plugin_load: plugin "ctl" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044599+01:00 data.local collectd 47196 - - plugin_load: plugin "geom_stat" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.044707+01:00 data.local collectd 47196 - - plugin_load: plugin "nut" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.045559+01:00 data.local collectd 47196 - - plugin_load: plugin "python" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.045613+01:00 data.local collectd 47196 - - plugin_load: plugin "zfs_arc_v2" successfully loaded. Dec 13 16:12:07 data 1 2020-12-13T16:12:07.045620+01:00 data.local collectd 47196 - - plugin = syslog, key = LogLevel, value = err Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634422+01:00 data.local avahi-daemon 1956 - - Files changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634460+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/SSH.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634531+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/SFTP_SSH.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634592+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/ISCSITARGET.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634639+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/MIDDLEWARE_SSL.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634705+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/MIDDLEWARE.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634751+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/HTTPS.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634792+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/HTTP.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634835+01:00 data.local avahi-daemon 1956 - - Service group file /usr/local/etc/avahi/services/DEV_INFO.service changed, reloading. Dec 13 16:12:13 data 1 2020-12-13T16:12:13.634939+01:00 data.local avahi-daemon 1956 - - Loading service file /usr/local/etc/avahi/services/SMB.service. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.137588+01:00 data.local avahi-daemon 1956 - - Files changed, reloading. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.138014+01:00 data.local avahi-daemon 1956 - - Got SIGHUP, reloading. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893778+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/SMB.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893827+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/SSH.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893862+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/SFTP_SSH.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893900+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/ISCSITARGET.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893936+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/MIDDLEWARE_SSL.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.893985+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/MIDDLEWARE.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.894042+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/HTTPS.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.894094+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/HTTP.service) successfully established. Dec 13 16:12:14 data 1 2020-12-13T16:12:14.894152+01:00 data.local avahi-daemon 1956 - - Service "data" (/usr/local/etc/avahi/services/DEV_INFO.service) successfully established.

/var/log/middlewared.log

Code:

[2020/12/13 16:11:29] (WARNING) middlewared.plugins.service_.services.base_freebsd.freebsd_service():134 - nmbd forcestop failed with code 1: 'nmbd not running? (check /var/run/samba4/nmbd.pid).\n' [2020/12/13 16:11:30] (DEBUG) EtcService.generate():375 - No new changes for /etc/local/avahi/avahi-daemon.conf [2020/12/13 16:11:32] (WARNING) middlewared.plugins.service_.services.base_freebsd.freebsd_service():134 - collectd-daemon forcestop failed with code 1: 'collectd_daemon not running? (check /var/run/collectd-daemon.pid).\n' [2020/12/13 16:12:06] (DEBUG) EtcService.generate():375 - No new changes for /etc/local/collectd.conf [2020/12/13 16:12:11] (DEBUG) EtcService.generate():375 - No new changes for /etc/local/smb4.conf [2020/12/13 16:12:11] (DEBUG) EtcService.generate():375 - No new changes for /etc/security/pam_winbind.conf [2020/12/13 16:12:12] (DEBUG) EtcService.generate():375 - No new changes for /etc/local/avahi/avahi-daemon.conf [2020/12/13 16:12:13] (DEBUG) SMBService.add_admin_group():86 - No cache entry indicating delayed action to add admin_group was found. [2020/12/13 16:42:51] (DEBUG) freenasOS.Configuration.TryGetNetworkFile():606 - TryGetNetworkFile(['https://update-master.ixsystems.com/updates/ix_crl.pem']) [2020/12/13 16:42:51] (DEBUG) urllib3.connectionpool._new_conn():955 - Starting new HTTPS connection (1): update-master.ixsystems.com:443 [2020/12/13 16:42:52] (DEBUG) urllib3.connectionpool._make_request():428 - https://update-master.ixsystems.com:443 "GET /updates/ix_crl.pem HTTP/1.1" 200 1028 [2020/12/13 16:42:52] (DEBUG) freenasOS.Configuration.CheckFreeSpace():77 - CheckFreeSpace(path=/tmp/tmpwj0lylt6.pem, pool=None, required=1028) [2020/12/13 16:42:52] (DEBUG) freenasOS.Configuration.TryGetNetworkFile():745 - TryGetNetworkFile(['https://update-master.ixsystems.com/updates/ix_crl.pem']): Read 1028 bytes total [2020/12/13 16:42:53] (DEBUG) freenasOS.Configuration.TryGetNetworkFile():606 - TryGetNetworkFile(['https://update-master.ixsystems.com/updates/ix_crl.pem']) [2020/12/13 16:42:53] (DEBUG) urllib3.connectionpool._new_conn():955 - Starting new HTTPS connection (1): update-master.ixsystems.com:443 [2020/12/13 16:42:53] (DEBUG) urllib3.connectionpool._make_request():428 - https://update-master.ixsystems.com:443 "GET /updates/ix_crl.pem HTTP/1.1" 200 1028 [2020/12/13 16:42:53] (DEBUG) freenasOS.Configuration.CheckFreeSpace():77 - CheckFreeSpace(path=/tmp/tmp7qb8wquy.pem, pool=None, required=1028) [2020/12/13 16:42:53] (DEBUG) freenasOS.Configuration.TryGetNetworkFile():745 - TryGetNetworkFile(['https://update-master.ixsystems.com/updates/ix_crl.pem']): Read 1028 bytes total

edit: I've gotten tired of waiting and did an 'init 6'

edit 2: After reboot, the export / disconnect of the pool worked without problem

Last edited:

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Here are my findings so far (updates compared the previous overview in orange):

The catastrophy

The catastrophy

- 28 November at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44 my TrueNAS server rebooted without properly shutting down

- 28 November from 4h00 till 29 November 1h30 a scrub ran which detected and fixed 8 errors on 5 different HDDs

- Reboots

- First time ever after about a year of uptime that my server has an unexpected reboot (6 actually!)

- "Recently" following changes were made to the server

- Intel Optane disk was added as SLOG about 2 months ago

- 32GB ECC RAM was replaced by 64GB ECC RAM about 1 month ago

- FreeNAS11 was upgraded to TrueNAS12

- Weird, unexpected, unlogged reboots often happen because of

- extreme RAM instability

- cheap / broken PSU

- maybe a broken motherboard or HBA?

- a bug in TrueNAS?

- Scrub

- My scrubs are scheduled monthly, so the added Optane disk certainly had a problem-free scrub last month, but the RAM and TrueNAS12 had their first scrub when it started acting weird

- I noticed that scrub checksum errors are cleared on reboot. (Is this a bug?)

- I noticed that sometimes TrueNAS tries to send emails on boot before the network interfaces are online (so maybe some scrub error mails didn't get through)

- As the scrub completed only after all those reboots, it seems like scrub automatically resumes after a crash / reboot, but it also seems like it requires the pool to be unlocked before it can start the resume (still need to confirm this). This could (partly?) explain why it took 21h30min to complete the 28 November scrub, compared to about 12h for all scrubs I did for testing afterwards.

- The "old" 32GB ECC RAM was tested with Memtest86 / Linux / TrueNAS12 and confirmed that

- it was 100% stable

- the platform fully supported ECC error reporting to the OS (both corrected and uncorrected errors) - on FreeNAS 11 my platform doesn't support ECC Error reporting

- The "new" 64GB ECC RAM was also tested with Memtest86 (100% stable and reporting both corrected / uncorrected ECC errors) and in TrueNAS12 (corrected ECC errors, uncorrected probably too, but I didn't test that long).

- I've done all recommended burn-in tests that I could find and the random access dd test (from solnet-array-test), for example, didn't error, but did make me aware of large duration differences between the HDDs, which caused me to suspect overheating (or another problem) of my HBA. So I replaced the HBA, added a fan to it and re-tested it. And the large duration difference between the HDDs was solved and the full burn-in test was consistently passed. In short, it is very well tested and, although I realize it (everything) is still possible, I'm reasonably confident my HBA is pretty ok now...

- I've checked the SMART values of all my HDDs and they are all 100% healthy

- In Windows, using Hard Disk Sentinel, I've performed an "Extended Self Test" of every HDD simultaneously, while running Prime95 (blended mode with AVX) at the same time. This took about 19 hours and was also 100% successful. This is an extreme test for testing

- RAM stability

- CPU stability

- PSU stability

- HDD health

- I've create a logging script for scrub and re-ran scrub on 7/8 December and it again found 4 errors on 2 different HDDs. There were no reboots this time.

- On 8 December, a couple hours later, I ran another scrub and it again found 1 error on yet another HDD. Again no reboots.

- On 10 December, I underclocked my RAM and ran scrub twice (the first time to clear out "old" checksum errors and the second time to confirm if the issue still occurs with underclocked RAM). And I still have checksum errors with the underclocked memory.

- On 11 December, I overclocked my RAM and confirmed that memory ECC errors get written to /var/log/messages

- On 12 December, I've been writing to the Optane-pool for the whole day (The Optane disk is not connected through the HBA). I did this by copying Windows and Program Files folders to it, copying and extracting a 95GB rar file, etc. So a mix of all kinds of files. The scrub afterwards didn't find any errors, so it seems like the issue only occurs for devices connected to the HBA.

- On 13 December, I've forced "Power Supply Idle Control" to "Typical Current Idle" in the BIOS. Although I'm unable to reproduce the reboots, I've read some reports about my motherboard that this setting sometimes helps against unexpected reboots.

- The scrub checksum errors

- are easy to reproduce. So something is certainly VERY wrong with my NAS.

- seem to accumulate over time. I think this means that

- The errors are not caused by scrub incorrectly detecting (and fixing) (non-)issues

- The errors are actually being written to my HDDs and scrub correctly detects and fixes them (for now at least)

- It seems like they are caused not by the HDDs themselves, but by something "before" the HDDs

- Could be the HBA. But as said earlier, this HBA was confirmed to be rock solid stable during burn-in testing.

- Could be the RAM, as that is the only new piece of hardware since the scrubs started failing. However the RAM was thoroughly tested with Memtest86 and I've confirmed that it properly reports memory ECC errors. So I'm quite sure it actually isn't the RAM.

- Could be something with the motherboard

- Could be because of my upgrade from FreeNAS11 to TrueNAS12

- The reboots seem harder to reproduce, no sure what exactly triggered them or to trigger them

- Perhaps the load of the scrub itself triggered them (but then I would expect them to occur during new scrubs as well)

- Probably the same hardware problem that is causing the checksum errors on my HDDs, also caused those reboots (accidently at the same time as the scrub ran)

- I've just completely removed my Optane device from the system and restored the "PCIe x16/2x8/4x4 Switch" setting in the BIOS from "2x8" back to the default "1x16" (this enables 16 PCIe lanes for my HBA instead of sharing the lanes with the Optane device). I'm now re-running scrub twice to see if this helps...

- Upgrade to TrueNAS12U1

- Run solnet-array-test again. If that triggers issues, then I'm sure it is not a problem of scrub itself. But maybe it won't trigger anything since it's read-only?

- Replace the HBA (I'll need to purchase a new one to try this)

- Replace the PSU (I still have a 11 year old Seasonic 850W PSU from my old desktop)

- Upgrade my BIOS and IPMI (Agesa 1.0.0.6 just got released some days ago)

- Replace the RAM (I can put my desktops non-ECC memory in it)

- Replace the motherboard (I'll need to purchase a new one to try this)

- Re-install FreeNAS 11 instead of TrueNAS 12 (I've started running extended self tests on all my external offline-backup HDDs to prepare for this. I hope it will not need to come this far)

Last edited:

AlexGG

Contributor

- Joined

- Dec 13, 2018

- Messages

- 171

I still have a 11 year old Seasonic 850W PSU from my old desktop

11 years is a little bit on the old side.

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

It's just for testing (so temporarily) and it was an extremely good PSU back then (Seasonic M12D-850). So I think it should do fine...

Although my motherboard is known to be very sensitive to which PSU you use (even brand new ones sometimes don't work), so we'll see...

Hopefully I can solve the issue before I have to try that, as it would be a lot of work (uninstalling it from my old desktop and then replacing the PSU in my new server is quite some work)

Although my motherboard is known to be very sensitive to which PSU you use (even brand new ones sometimes don't work), so we'll see...

Hopefully I can solve the issue before I have to try that, as it would be a lot of work (uninstalling it from my old desktop and then replacing the PSU in my new server is quite some work)

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

I first thought it was because of the scrub that I started after removing the Optane (although even with the scrub running performance normally remains "ok"), but even now the first scrub is completed, performance stays horrible...

I tried finding any tasks the might be running at this time, but couldn't find anything. Also when not doing anything, the HDDs go nearly silent (indicating that no unknown background task is running)

Performance went

from about 800MB/sec to 110MB/sec for a large file copy from pool to my desktops SSD.from about 800MB/sec to 80MB/sec for a large file copy from my desktops SSD to the poolfrom about 100-200MB/sec to 25MB/sec for a large file copy from pool to the same poolAlso the first scrub itself took much longer than "usual" again (20h instead of the usual 12h). There were no reboots though...

Is there something I must do to make TrueNAS "fix" that it now runs without SLOG? I removed the SLOG through the GUI, as instructed...

I forgot to disable sync=always

Last edited:

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

During the 2nd scrub after removing the Optane SLOG, my server has rebooted twice again. As before, there were 4-5 minutes between the 2 reboots. Really weird that the reboots always come in pairs, no?

I've learned a couple things from this:

- The BIOS tips I found regarding unplanned reboots for my motherboard do not help for me

- Advanced - AMD CBS - CPU Common Options - Global C-state Control -> Disabled (actually this was the default)

- Advanced - AMD CBS - CPU Common Options - Power Supply Idle Control -> Typical Current Idle

- Completely removing the Optane drive has no effect at all. The server has rebooted again and also has found an error again.

- I noticed that after a reboot, the scrub actually restarts instead of resumes. This might explain the 21h30min duration of my scrub on 28 November (which had a lot of reboots)

Last edited:

AlexGG

Contributor

- Joined

- Dec 13, 2018

- Messages

- 171

Really weird that the reboots always come in pairs, no?

Something needs to cool down, maybe. At this point in the story, my first choice is power supply.

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Earlier today, while I was simultaneously transferring and scrubbing for many hours, I tried touching my HBA heatsink. It was warm, but I could keep my finger on it. And yes, I did replace the cooling paste after buying it (as these HBAs are often very old). So HBA temp should be ok I think.

The CPU only goes till 30% usage max during a scrub, so I doubt it is stressing the PSU very much... Certainly nothing compared to the stress I've put on it when running Prime95 while running the extended self test on all disks simultaneously.

But yes, you're right, PSU is certainly something I need to try replacing (still not looking forward to that)

(still not looking forward to that)

I will try some other things first though... Like upgrading to TrueNAS12U1, upgrading my BIOS and IPMI.And I'll also re-insert the Optane, as everything is superslow atm.

Edit:

I've had some more reboots since removing the Optane, so it seems like "reproducing the reboots" has become "easier" since removing the Optane.So although performance is horrible atm, I think I'll keep the Optane out for now because it will be easier to see if something solves the issues...

Edit2:

That my NAS became slow after removing the SLOG was just a stupid user error (forgot to turn off Sync=always). So far no reboots though, since I've turned off sync=always

The CPU only goes till 30% usage max during a scrub, so I doubt it is stressing the PSU very much... Certainly nothing compared to the stress I've put on it when running Prime95 while running the extended self test on all disks simultaneously.

But yes, you're right, PSU is certainly something I need to try replacing

I will try some other things first though... Like upgrading to TrueNAS12U1, upgrading my BIOS and IPMI.

Edit:

I've had some more reboots since removing the Optane, so it seems like "reproducing the reboots" has become "easier" since removing the Optane.

Edit2:

That my NAS became slow after removing the SLOG was just a stupid user error (forgot to turn off Sync=always). So far no reboots though, since I've turned off sync=always

Last edited:

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Here are my findings so far (updates compared the previous overview in orange):

The catastrophy

The catastrophy

- 28 November at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44 my TrueNAS server rebooted without properly shutting down

- 28 November from 4h00 till 29 November 1h30 a scrub ran which detected and fixed 8 errors on 5 different HDDs

- Reboots

- First time ever after about a year of uptime that my server has an unexpected reboot (6 actually!)

- "Recently" following changes were made to the server

- Intel Optane disk was added as SLOG about 2 months ago

- 32GB ECC RAM was replaced by 64GB ECC RAM about 1 month ago

- FreeNAS11 was upgraded to TrueNAS12

- Weird, unexpected, unlogged reboots often happen because of

- extreme RAM instability

- cheap / broken PSU

- maybe a broken motherboard or HBA?

- a bug in TrueNAS?

- Scrub

- My scrubs are scheduled monthly, so the added Optane disk certainly had a problem-free scrub last month, but the RAM and TrueNAS12 had their first scrub when it started acting weird

- I noticed that scrub checksum errors are cleared on reboot. (Is this a bug?)

- I noticed that sometimes TrueNAS tries to send emails on boot before the network interfaces are online (so maybe some scrub error mails didn't get through)

- As the scrub completed only after all those reboots,

it seems likescrub automaticallyresumesrestarts after a crash / reboot, butit also seems likeit requires the pool to be unlocked before it can start theresumerestart(still need to confirm this). Thiscould (partly?)explains why it took 21h30min to complete the 28 November scrub, compared to about 12h for all scrubs I did for testing afterwards.

- The "old" 32GB ECC RAM was tested with Memtest86 / Linux / TrueNAS12 and confirmed that

- it was 100% stable

- the platform fully supported ECC error reporting to the OS (both corrected and uncorrected errors) - on FreeNAS 11 my platform doesn't support ECC Error reporting

- The "new" 64GB ECC RAM was also tested with Memtest86 (100% stable and reporting both corrected / uncorrected ECC errors) and in TrueNAS12 (corrected ECC errors, uncorrected probably too, but I didn't test that long and they occur a lot less frequently).

- I've done all recommended burn-in tests that I could find and the random access dd test (from solnet-array-test), for example, didn't error, but did make me aware of large duration differences between the HDDs, which caused me to suspect overheating (or another problem) of my HBA. So I replaced the HBA, added a fan to it and re-tested it. And the large duration difference between the HDDs was solved and the full burn-in test was consistently passed. In short, it is very well tested and, although I realize it (everything) is still possible, I'm reasonably confident my HBA is pretty ok now... (starting to doubt a bit about this)

- I've checked the SMART values of all my HDDs and they are all 100% healthy

- In Windows, using Hard Disk Sentinel, I've performed an "Extended Self Test" of every HDD simultaneously, while running Prime95 (blended mode with AVX) at the same time. This took about 19 hours and was also 100% successful. This is an extreme test for testing

- RAM stability

- CPU stability

- PSU stability

- HDD health

- I've create a logging script for scrub and re-ran scrub on 7/8 December and it again found 4 errors on 2 different HDDs. There were no reboots this time.

- On 8 December, a couple hours later, I ran another scrub and it again found 1 error on yet another HDD. Again no reboots.

- On 10 December, I underclocked my RAM and ran scrub twice (the first time to clear out "old" checksum errors and the second time to confirm if the issue still occurs with underclocked RAM). And I still have checksum errors with the underclocked memory.

- On 11 December, I overclocked my RAM and confirmed that memory ECC errors get written to /var/log/messages (now I'm quite convinced my RAM is not the problem)

- On 12 December, I've been writing to the Optane-pool for the whole day (The Optane disk is not connected through the HBA). I did this by copying Windows and Program Files folders to it, copying and extracting a 95GB rar file, etc. So a mix of all kinds of files. The scrub afterwards didn't find any errors, so it seems like the issue only occurs for devices connected to the HBA.

- On 13 December, I've forced "Power Supply Idle Control" to "Typical Current Idle" in the BIOS. Although I'm unable to reproduce the reboots, I've read some reports about my motherboard that this setting sometimes helps against unexpected reboots. (nope, it doesn't)

- On 13/14 December I've completely removed my Optane device from the system and restored the "PCIe x16/2x8/4x4 Switch" setting in the BIOS from "2x8" back to the default "1x16" (this enables 16 PCIe lanes for my HBA instead of sharing the lanes with the Optane device). I ran the scrub again twice and had many checksum errors and again 2 reboots!

- On 15/16 December I've upgraded my BIOS, upgraded the IPMI firmware, upgraded from TrueNAS12 to TrueNAS12U1 and I've changed sync=always from my pool to sync=standard (I forgot this when removing my Optane). I again ran 2 scrubs and during the second run I "only" had 1 checksum error and no reboots. So it seems sync=always "increases" the problem. This indicates that it is "load-related" I think.

- On 17 December I've run a night of solnet-array-test, not enough to go through the entire test (I don't think it reached the seek test already), but I wanted to try something else first. No reboots occured.

- On 18 December I've removed my HBA from the computer for a visual inspection and re-attached all cables to both HBA and HDDs. Also I've added a screamingly loud 120mm Delta server fan and directed it to the HBA / chipset / NIC. If it's something temperature related, then this should cover that

Second scrub unfortunately did find 1 error again.

- On 20 December I've attached my old desktops 850W PSU. It boots... Will check soon if it helps against the issues...

- The scrub checksum errors

- are easy to reproduce. So something is certainly VERY wrong with my NAS.

- seem to accumulate over time. I think this means that

- The errors are not caused by scrub incorrectly detecting (and fixing) (non-)issues

- The errors are actually being written to my HDDs and scrub correctly detects and fixes them (for now at least)

- It seems like they are caused not by the HDDs themselves, but by something "before" the HDDs

- Could be the HBA. But as said earlier, this HBA was confirmed to be rock solid stable during burn-in testing.

- Could be the RAM, as that is the only new piece of hardware since the scrubs started failing. However the RAM was thoroughly tested with Memtest86 and I've confirmed that it properly reports memory ECC errors. So also RAM seems unlikely (but as always with computers, not impossible)

- Could be something with the motherboard

- Could be something with the PSU (although it is rock solid stable during extreme load)

- Could be because of my upgrade from FreeNAS11 to TrueNAS12

- It seems like sync=always without a SLOG device puts might increase amount of checksum errors (or one of my updates to the BIOS / IPMI / TrueNAS decreased them)

- The reboots seem harder to reproduce, no sure what exactly triggered them or how to trigger them

- Perhaps the load of the scrub itself triggered them (but then I would expect them to occur during new scrubs as well)

- Probably the same hardware problem that is causing the checksum errors on my HDDs, also caused those reboots (accidently at the same time as the scrub ran)

- It seems like sync=always without a SLOG device puts might increase the chance for a reboot (or one of my updates to the BIOS / IPMI / TrueNAS decreased them)

- Run solnet-array-test again. If that triggers reboots, then I'm sure it is not a problem of scrub itself. But maybe it won't trigger anything since it's read-only?

- I've bought below heatsink for the HBA. Could take a month before it gets here though (ali).

- Replace the HBA (I'll need to purchase a new one to try this)

- Replace the PSU (I still have a 11 year old Seasonic 850W PSU from my old desktop)

- Replace the RAM (I can temporary put my new desktops non-ECC memory in it for testing)

- Replace the motherboard (I'll need to purchase a new one to try this)

- Re-install FreeNAS 11 instead of TrueNAS 12 (I've started running extended self tests on all my external offline-backup HDDs to prepare for this. I hope it will not need to come this far)

Last edited:

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Here are my findings so far (updates compared the previous overview in orange):

The catastrophy

The catastrophy

- 28 November at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44 my TrueNAS server rebooted without properly shutting down

- 28 November from 4h00 till 29 November 1h30 a scrub ran which detected and fixed 8 errors on 5 different HDDs

- Reboots

- First time ever after about a year of uptime that my server has an unexpected reboot (6 actually!)

- "Recently" following changes were made to the server

- Intel Optane disk was added as SLOG about 2 months ago (before the last problem-less scrub)

- 32GB ECC RAM was replaced by 64GB ECC RAM about 1 month ago (after the last problem-less scrub)

- FreeNAS11 was upgraded to TrueNAS12 (after the last problem-less scrub)

- Weird, unexpected, unlogged reboots often happen because of

- extreme RAM instability

- cheap / broken PSU

- maybe a broken motherboard or HBA?

- a bug in TrueNAS?

- Scrub

- My scrubs are scheduled monthly

- I noticed that scrub checksum errors are cleared on reboot. (Is this a bug?)

- I noticed that sometimes TrueNAS tries to send emails on boot before the network interfaces are online (so maybe some scrub error mails didn't get through)

- Scrub automatically restarts after a reboot / crash during a scrub, but it requires the encrypted pool to be unlocked first.

- The "old" 32GB ECC RAM was tested with Memtest86 / Linux / TrueNAS12 and confirmed that

- it was 100% stable

- the platform fully supported ECC error reporting to the OS (both corrected and uncorrected errors) - on FreeNAS 11 my platform doesn't support ECC Error reporting

- The "new" 64GB ECC RAM was also tested with Memtest86 (100% stable and reporting both corrected / uncorrected ECC errors) and in TrueNAS12 (corrected ECC errors, uncorrected probably too, but I didn't test that long and they occur a lot less frequently).

- I've done all recommended burn-in tests that I could find (solnet-array-test and badblocks)

- In the 1 year that I have this server, I've already had 2 HBAs. The first HBA was not actively cooled and I noticed performance inconsistencies during the solnet-array-test burn-in. After replacing the first HBA (because I accidently broke off a resistor). I've always actively cooled my 2nd HBA and again did full (successful) burn-in testing on this new HBA.

- I've checked the SMART values of all my HDDs and they are all 100% healthy

- In Windows, using Hard Disk Sentinel, I've performed an "Extended Self Test" of every HDD simultaneously, while running Prime95 (blended mode with AVX) at the same time. This took about 19 hours and was also 100% successful. This is an extreme test which validates

- RAM stability

- CPU stability

- PSU stability

- HDD health

- I've create a logging script for scrub and re-ran scrub on 7/8 December and it again found 4 errors on 2 different HDDs. There were no reboots this time.

- On 8 December, a couple hours later, I ran another scrub and it again found 1 error on yet another HDD. Again no reboots.

- On 10 December, I underclocked my RAM and ran scrub twice (the first time to clear out "old" checksum errors and the second time to confirm if the issue still occurs with underclocked RAM). And I still have checksum errors with the underclocked memory.

- On 11 December, I overclocked my RAM and confirmed that memory ECC errors get written to /var/log/messages (now I'm quite convinced my RAM is not the problem)

- On 12 December, I've been writing to the Optane-pool for the whole day (The Optane disk is not connected through the HBA). I did this by copying Windows and Program Files folders to it, copying and extracting a 95GB rar file, etc. So a mix of all kinds of files. The scrub afterwards didn't find any errors, so it seems like the issue only occurs for devices connected to the HBA.

- On 13 December, I've forced "Power Supply Idle Control" to "Typical Current Idle" in the BIOS. Although I'm unable to reproduce the reboots, I've read some reports about my motherboard that this setting sometimes helps against unexpected reboots. (it didn't help, as reboots still occur)

- On 13/14 December I've completely removed my Optane device from the system and restored the "PCIe x16/2x8/4x4 Switch" setting in the BIOS from "2x8" back to the default "1x16" (this enables 16 PCIe lanes for my HBA instead of sharing the lanes with the Optane device). I ran the scrub again twice and had many checksum errors and again 2 reboots!

- On 15/16 December I've upgraded my BIOS, upgraded the IPMI firmware, upgraded from TrueNAS12 to TrueNAS12U1 and I've changed sync=always from my pool to sync=standard (I forgot this when removing my Optane). I again ran 2 scrubs and during the second run I "only" had 1 checksum error and no reboots. So I suspect that sync=always "slightly increases" the problem. This indicates that it is "load-related" I think.

- On 17 December I've run a night of solnet-array-test, not enough to complete the entire test (I don't think it even reached the seek test already), but I wanted to try something else first. No reboots occurred.

- On 18 December I've removed my HBA from the computer for a visual inspection and re-attached all cables to both HBA and HDDs. Also I've added a screamingly loud 120mm Delta server fan and directed it to the HBA / chipset / NIC. If it's something temperature related, then this should cover that completely

Although it seems like there were less errors than I expected, there were still errors. So perhaps it decreased the problem a little, but it didn't solve it...

- On 20 December I've attached my old desktops 850W PSU. I've run a scrub and it again rebooted and found errors (still have to do the second run though). But the reboot already proves that the PSU isn't the problem.

- On 21 December I've replaced the SFF-8087 cable with one from old RAID controller. Maybe that cable was even worse than the one that was in, because, after another pair of reboots, the scrub, for the first time, started listing issues in the READ (3) and WRITE (1,17K) column of one my HDDs and it marked it as faulty now

- The scrub checksum errors

- are easy to reproduce. So something is certainly VERY wrong with my NAS.

- seem to accumulate over time. I think this means that

- The errors are not caused by scrub incorrectly detecting (and fixing) (non-)issues

- The errors are actually being written to my HDDs and scrub correctly detects and fixes them (for now at least)

- As the checksum errors occur on all HDDs randomly and as all HDDs successfully pass the extended self test, it seems like the issues are not caused by the HDDs themselves, but by something "before" the HDDs

- Although the HBA was very properly burned in, I'm starting to suspect it more and more...

- I first suspected the RAM, as it was the last hardware-change to my server. But the RAM passes all possible tests that I can throw at it and I've also validated that it properly reports memory errors in TrueNAS if they occur. So it would very much surprise me if it is the RAM... (but as always with computers, it's not impossible)

- Could be something with the motherboard, but this would be extremely hard and expensive to test. I'll save this for last...

- As even completely replacing the PSU, by an even heavier type, doesn't solve the issue, I doubt it is PSU related.

- Could be because of my upgrade from FreeNAS11 to TrueNAS12. Also this one is hard to test, as I have already upgraded my pools flags after TrueNAS12 ran a few weeks problem-free (I know now, that was too soon). Also it does really seem like an hardware issue. But perhaps I'll need to test this someday anyway.

- It seems like sync=always without a SLOG device might increase amount of checksum errors. I'm now doing all my tests with a combination of sync=always and sync=standard.

- The reboots seem harder to reproduce, not sure what exactly triggers them. I haven't been able to trigger them without a scrub.

- Perhaps the load of the scrub itself triggers them

- Probably the same hardware problem that is causing the checksum errors on my HDDs, also caused those reboots

- It seems like sync=always without a SLOG device might increase the chance for a reboot. I'm now doing all my tests with a combination of sync=always and sync=standard.

- Further log files analysis

- I've had 15 unexpected reboots so far.

- They always come in pairs with 4 minutes 30-35 seconds in between (7x 2 unexpected reboot-pairs)

- As a test, I've manually rebooted the server after the first unexpected reboot. It didn't have a 2nd unexpected reboot after 4m30s.

- It doesn't matter if the pool is locked or unlocked, the 2nd unexpected reboot always happens +-4m30s after the first unexpected reboot. Fyi: I only have 1 pool on my HBA. So when the pool is locked, it can't start the scrub in the background or do much with it at all.

- The scrubs don't seem to restart completely after crash / reboot (as I first though). It seems like they "jump back" to some specific "resume-points". For example, mine jumped from 36% to 33% and later, during that same scrub, from 69% to 54% and later again from 59% to 54%

- Run solnet-array-test some more to see if I can make it trigger a reboot without a scrub. But maybe it won't trigger anything since it's read-only or a different type of load.

- I've bought below heatsink for the HBA. It could take a month before it gets here though (ali express).

- Replace the HBA (I'll need to purchase a new one to try this). But I have no clue where to purchase this HBA. As this is already my 3rd HBA in less than a year, I've lost all faith in these LSI IT mode HBAs...

Very strange, as I consider my good old LSI MegaRAID 9260-8i controller (which was in my old desktop running RAID5), as the best and most reliable piece of hardware that I ever owned... If anyone has any advice on where to purchase a 9207-8i or 9217-8i, please let me know (I'd like to try the more recent generation now)

- Replace the RAM (I can temporary put my new desktops non-ECC memory in it for testing)

- Replace the motherboard (I'll need to purchase a new one to try this)

- Re-install FreeNAS 11 instead of TrueNAS 12 (I've completed running extended self tests on all my external offline-backup HDDs to prepare for this. I hope it will not need to come this far)

Last edited:

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

Here are my findings so far (updates compared the previous overview in orange):

The catastrophy

The catastrophy

- 28 November at 8h17, 8h21, 9h14, 9h18, 10h39 and 10h44 my TrueNAS server rebooted without properly shutting down

- 28 November from 4h00 till 29 November 1h30 a scrub ran which detected and fixed 8 errors on 5 different HDDs

- Reboots

- First time ever, after about a year of uptime,

that my server has an unexpected reboot (6 actually in a single day!)

- "Recently" following changes were made to the server

- Intel Optane disk was added as SLOG about 2 months ago (before the last problem-less scrub)

- 32GB ECC RAM was replaced by 64GB ECC RAM about 1 month ago (after the last problem-less scrub)

- FreeNAS11 was upgraded to TrueNAS12 (after the last problem-less scrub)

- Weird, unexpected, unlogged reboots often happen because of

- extreme RAM instability

- cheap / broken PSU

- maybe a broken motherboard or HBA?

- a bug in TrueNAS?

- First time ever, after about a year of uptime,

- Scrub

- My scrubs are scheduled monthly

- I noticed that scrub checksum errors are cleared on reboot. (Is this a bug?)

- I noticed that sometimes TrueNAS tries to send emails on boot before the network interfaces are online (so maybe some scrub error mails didn't get through)

- Scrub automatically restarts / resumes after a reboot / crash during a scrub, but it requires the encrypted pool to be unlocked first.

- The "old" 32GB ECC RAM was tested with Memtest86 / Linux / TrueNAS12 and confirmed that

- it was 100% stable

- the platform fully supported ECC error reporting to the OS (both corrected and uncorrected errors) - on FreeNAS 11 my platform doesn't support ECC Error reporting

- The "new" 64GB ECC RAM was also tested with Memtest86 (100% stable and reporting both corrected / uncorrected ECC errors) and in TrueNAS12 (corrected ECC errors, uncorrected probably too, but I didn't test that long and they occur a lot less frequently).

- I've done all recommended burn-in tests that I could find (solnet-array-test and badblocks)

- I've checked the SMART values of all my HDDs and they are all 100% healthy

- In Windows, using Hard Disk Sentinel, I've performed an "Extended Self Test" of every HDD simultaneously, while running Prime95 (blended mode with AVX) at the same time. This took about 19 hours and was also 100% successful. This is an extreme test which validates

- RAM stability

- CPU stability

- PSU stability

- HDD health

- I've create a logging script for scrub and re-ran scrub on 7/8 December and it again found 4 errors on 2 different HDDs. There were no reboots this time.

- On 8 December, a couple hours later, I ran another scrub and it again found 1 error on yet another HDD. Again no reboots.

- On 10 December, I underclocked my RAM and ran scrub twice (the first time to clear out "old" checksum errors and the second time to confirm if the issue still occurs with underclocked RAM). And I still have checksum errors with the underclocked memory.

- On 11 December, I overclocked my RAM and confirmed that memory ECC errors get written to /var/log/messages (now I'm quite convinced my RAM is not the problem)

- On 12 December, I've been writing to the Optane-pool for the whole day (The Optane disk is not connected through the HBA). I did this by copying Windows and Program Files folders to it, copying and extracting a 95GB rar file, etc. So a mix of all kinds of files. The scrub afterwards didn't find any errors, so it seems like the issue only occurs for devices connected to the HBA.

- On 13 December, I've forced "Power Supply Idle Control" to "Typical Current Idle" in the BIOS. Although I'm unable to reproduce the reboots, I've read some reports about my motherboard that this setting sometimes helps against unexpected reboots. (it didn't help, as reboots still occur)

- On 13/14 December I've completely removed my Optane device from the system and restored the "PCIe x16/2x8/4x4 Switch" setting in the BIOS from "2x8" back to the default "1x16" (this enables 16 PCIe lanes for my HBA instead of sharing the lanes with the Optane device). I ran the scrub again twice and had many checksum errors and again 2 reboots!

- On 15/16 December I've upgraded my BIOS, upgraded the IPMI firmware, upgraded from TrueNAS12 to TrueNAS12U1 and I've changed sync=always from my pool to sync=standard (I forgot this when removing my Optane). I again ran 2 scrubs and during the second run I "only" had 1 checksum error and no reboots. So I suspect that sync=always "slightly increases" the problem. This indicates that it is "load-related" I think.

- On 17 December I've run a night of solnet-array-test, not enough to complete the entire test (I don't think it even reached the seek test already), but I wanted to try something else first. No reboots occurred.

- On 18 December I've removed my HBA from the computer for a visual inspection and re-attached all cables to both HBA and HDDs. Also I've added a screamingly loud 120mm Delta server fan and directed it to the HBA / chipset / NIC. If it's something temperature related, then this should cover that completely

Although it seems like there were less errors than I expected, there were still errors. So perhaps it decreased the problem a little, but it didn't solve it...

- On 20 December I've attached my old desktops 850W PSU. I've run a scrub and it again rebooted and found errors (still have to do the second run though). But the reboot already proves that the PSU isn't the problem.

- On 21 December I've replaced the SFF-8087 cable with one from my old RAID controller. Maybe that cable was even worse than the one that was in, because, after another pair of reboots, the scrub, for the first time, started listing issues in the READ (3) and WRITE (1,17K) column of one my HDDs and it marked it as faulty now

- On 29-30-31 December I've replaced my Dell H310 HBA with a LSI 9207-8i HBA (on which I've put a CPU cooler, to make sure heat is not the issue). It automatically started resilvering my pool and managed to successfully complete this (fixing some checksum errors in the process). Still I find it weird that it did this from it self, no? But all of this didn't help, as scrub still finds data corruption and it still reboots from itself. So it is not the HBA that is broken

- The scrub checksum errors

- are easy to reproduce. So something is certainly VERY wrong with my NAS.

- seem to accumulate over time. I think this means that

- The errors are not caused by scrub incorrectly detecting (and fixing) (non-)issues

- The errors are actually being written to my HDDs and scrub correctly detects and fixes them (for now at least)

- As the checksum errors occur on all HDDs randomly and as all HDDs successfully pass the extended self test, it seems like the issues are not caused by the HDDs themselves, but by something "before" the HDDs

- It is not the HBA, as even replacing it, doesn't help

- I first suspected the RAM, as it was the last hardware-change to my server. But the RAM passes all possible tests that I can throw at it and I've also validated that it properly reports memory errors in TrueNAS, if they occur. So it would very much surprise me if it is the RAM... (but as always with computers, it's not impossible)

- Could be something with the motherboard, but this would be extremely hard and expensive to test. I'll save this for last...

- As even completely replacing the PSU, by an even heavier type, doesn't solve the issue, I doubt it is PSU related.

- Could be because of my upgrade from FreeNAS11 to TrueNAS12. Also this one is hard to test, as I have already upgraded my pools flags after TrueNAS12 ran a few weeks problem-free (I know now, that was too soon). Also it does really seem like an hardware issue. But perhaps I'll need to test this someday anyway.

- It seems like sync=always without a SLOG device might increase amount of checksum errors. I'm now doing all my tests with a combination of sync=always and sync=standard.

- The reboots seem harder to reproduce, not sure what exactly triggers them. I haven't been able to trigger them without a scrub.

- Perhaps the load of the scrub itself triggers them

- Probably the same hardware problem that is causing the checksum errors on my HDDs, also caused those reboots

- It seems like sync=always without a SLOG device might increase the chance for a reboot. I'm now doing all my tests with a combination of sync=always and sync=standard.

- Further log files analysis

- I've had 15 unexpected reboots so far.

- They always come in pairs with 4 minutes 30-35 seconds in between (7x 2 unexpected reboot-pairs)

- As a test, I've manually rebooted the server after the first unexpected reboot. It didn't have a 2nd unexpected reboot after 4m30s.

So it seems like I can prevent the 2nd reboot from a "reboot-pair" from happening, by manually rebooting... This seems like an indication that the reboots are software related!

- It doesn't matter if the pool is locked or unlocked, the 2nd unexpected reboot always happens +-4m30s after the first unexpected reboot. Fyi: I only have 1 pool on my HBA. So when the pool is locked, it can't start the scrub in the background or do much with it at all.

- The scrubs don't seem to restart completely after crash / reboot (as I first though). It seems like they "jump back" to some specific "resume-points". For example, mine jumped from 36% to 33% and later, during that same scrub, from 69% to 54% and later again from 59% to 54%

- I'm going to try to put the HBA in a different PCI-e slot

- Replace the RAM (I can temporary put my new desktops non-ECC memory in it for testing)

- Replace the CPU (I could temporarily put in my desktops AMD 3900X)

- Run solnet-array-test some more to see if I can make it trigger a reboot without a scrub. But maybe it won't trigger anything since it's read-only or a different type of load.

- Replace the motherboard (I'll need to purchase a new one to try this)

- Re-install FreeNAS 11 instead of TrueNAS 12 (I've completed running extended self tests on all my external offline-backup HDDs to prepare for this. I hope it will not need to come this far)

Mastakilla

Patron

- Joined

- Jul 18, 2019

- Messages

- 203

After more than 1 month of endless (and effectless) investigations, I think I'm finally on to something...

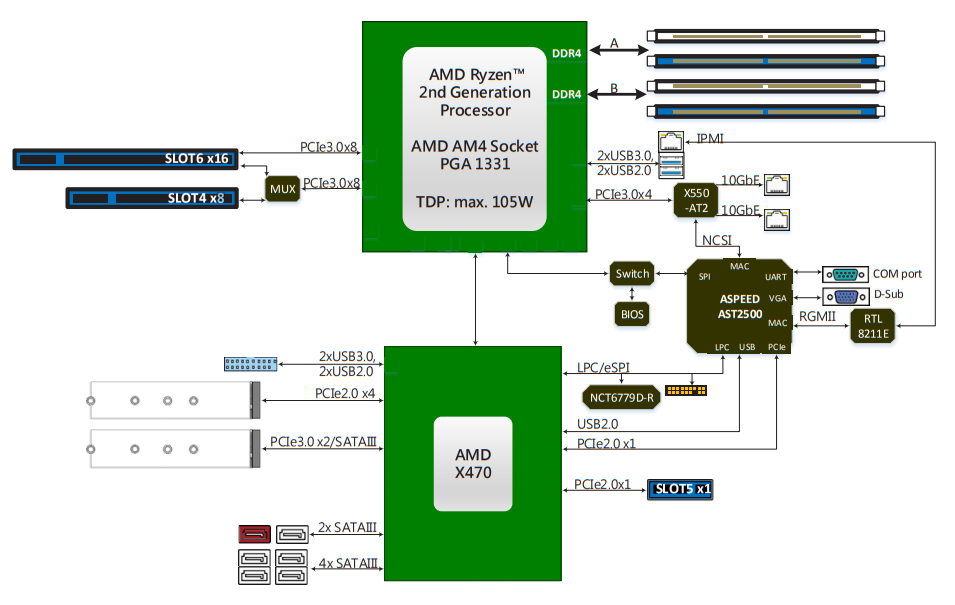

After switching from PCI-e slot 6 to PCI-e slot 4, there are noticeably less CKSUM errors during scrubs (One scrub even without any errors and I also didn't encounter a reboot, but that could be coincidence).

When looking in the manual of my motherboard (see the above drawing), I don't really see how there could be a difference between the 2 PCI-e slots. But the difference is definitely there... Both PCI-e slots are delivered directly by the CPU (not by the chipset, like PCI-e slot 5). The difference is there, even when the BIOS is configured in the "8x 8x" config for both situations (meaning that the "MUX" is used, even when only using slot 6). So I think the next thing I'll try is replace the CPU... If that doesn't help, then I think it's time to contact Asrock Rack...

After switching from PCI-e slot 6 to PCI-e slot 4, there are noticeably less CKSUM errors during scrubs (One scrub even without any errors and I also didn't encounter a reboot, but that could be coincidence).

When looking in the manual of my motherboard (see the above drawing), I don't really see how there could be a difference between the 2 PCI-e slots. But the difference is definitely there... Both PCI-e slots are delivered directly by the CPU (not by the chipset, like PCI-e slot 5). The difference is there, even when the BIOS is configured in the "8x 8x" config for both situations (meaning that the "MUX" is used, even when only using slot 6). So I think the next thing I'll try is replace the CPU... If that doesn't help, then I think it's time to contact Asrock Rack...