Amsoil_Jim

Contributor

- Joined

- Feb 22, 2016

- Messages

- 175

So here is where I'm at, my original pool consist of 6 10TB drives in RAIDZ1 configuration. I had asked on here what was the best way to expand my storage and was advised that using larger drives should be configured in a RAIDZ2 configuration at minimum. That is was best if I made a new pool using RAIDZ2 then transferred the data from the old pool to the new pool, then destroy the old pool and and the disks to the new pool as a second vdev.

I have purchased 6 14TB drives and configured them in a RAIDZ2 for a new pool.

I have automatic snapshots setup.

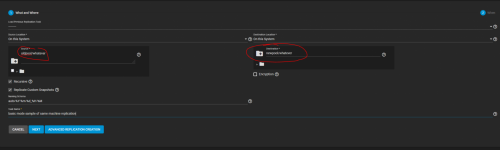

I have made a new snapshot of the oldpool and sent it to the new pool using zfs send | recv

The data copied to the new pool but took about 36 hours and thus there have been changes to the original data but when making a new snapshot and attempting to send it, it would not send

Any help with this would be appreciated, I have been trying to find a guide on this but can't seem to find what I'm looking for.

Edit: What i'm looking for is advice on the correct way to move the data from the old pool to the new pool and then make the new pool take the place of the old pool so that everything works, all jails and plugins. Like can you change the name of the new pool to the name of the old pool after everything is transfer and the old pool is exported/destroyed?

I have purchased 6 14TB drives and configured them in a RAIDZ2 for a new pool.

I have automatic snapshots setup.

I have made a new snapshot of the oldpool and sent it to the new pool using zfs send | recv

The data copied to the new pool but took about 36 hours and thus there have been changes to the original data but when making a new snapshot and attempting to send it, it would not send

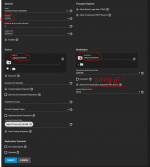

zfs send -RI Media@migrate02-26 Media@migrate02-28new | zfs recv -F Tank

cannot receive new filesystem stream: destination has snapshots (eg. Tank@auto-2022-02-25_00-00)

must destroy them to overwrite it

warning: cannot send 'Media@auto-2022-02-12_00-00': signal received

warning: cannot send 'Media@auto-2022-02-13_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-14_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-15_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-16_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-17_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-18_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-19_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-20_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-21_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-22_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-23_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-24_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-25_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-26_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-27_00-00': Broken pipe

warning: cannot send 'Media@auto-2022-02-28_00-00': Broken pipe

warning: cannot send 'Media@migrate02-28new': Broken pipeAny help with this would be appreciated, I have been trying to find a guide on this but can't seem to find what I'm looking for.

Edit: What i'm looking for is advice on the correct way to move the data from the old pool to the new pool and then make the new pool take the place of the old pool so that everything works, all jails and plugins. Like can you change the name of the new pool to the name of the old pool after everything is transfer and the old pool is exported/destroyed?

Last edited: