thirdgen89gta

Dabbler

- Joined

- May 5, 2014

- Messages

- 32

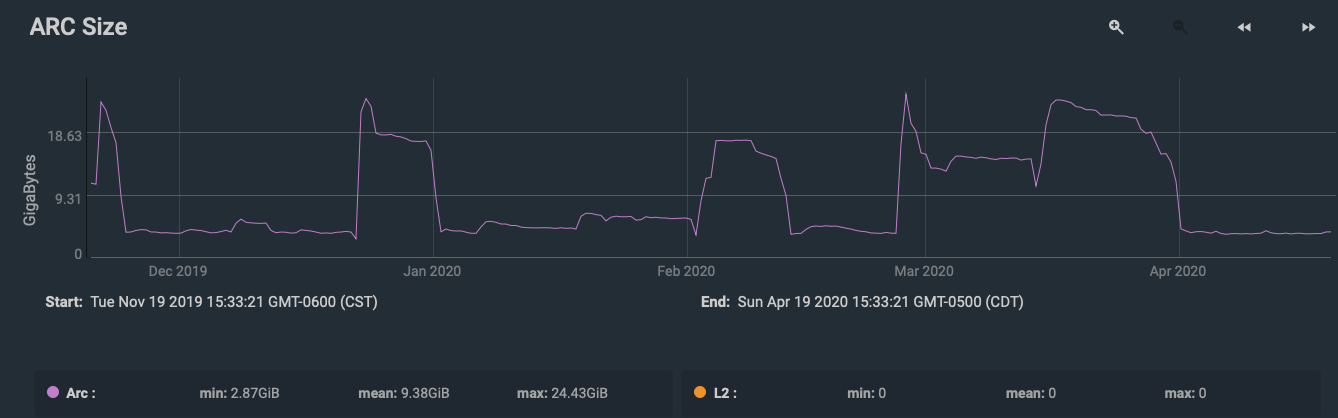

I know that FreeNAS is built to use all available memory, so I'm not concerned that every bit of it is in use by something. What I'm running into though seems to be that the ARC size starts out large, and then as time goes on during the month, the ARC size shrinks, while the Services memory usage grows to what seems like an unreasonable amount.

Performance wise the NAS is fine. Plex is responsive, file transfers no matter which protocol are near maximum line rates (1GigE), no issues with stability. No usual error messages in the logs from a crashing service.

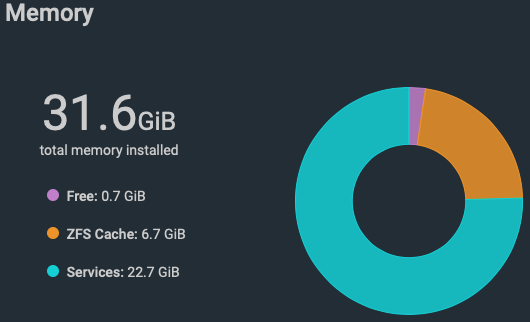

Seem to be having an issue with FreeNAS where after a reboot it starts off good and ARC fills up to about 18-23GB. Then over time the services category seem to suck up more and more ram, leaving less available for ARC. Eventually I end up with something like below where out of 32GB, only about 7GB is used for ARC, and the rest of it gets swallowed by the "Services" general category.

Below, you can see the ZFS usage over time. Spikes after a reboot, holds for a few days, then drops like a stone and holds around 7GB ARC.

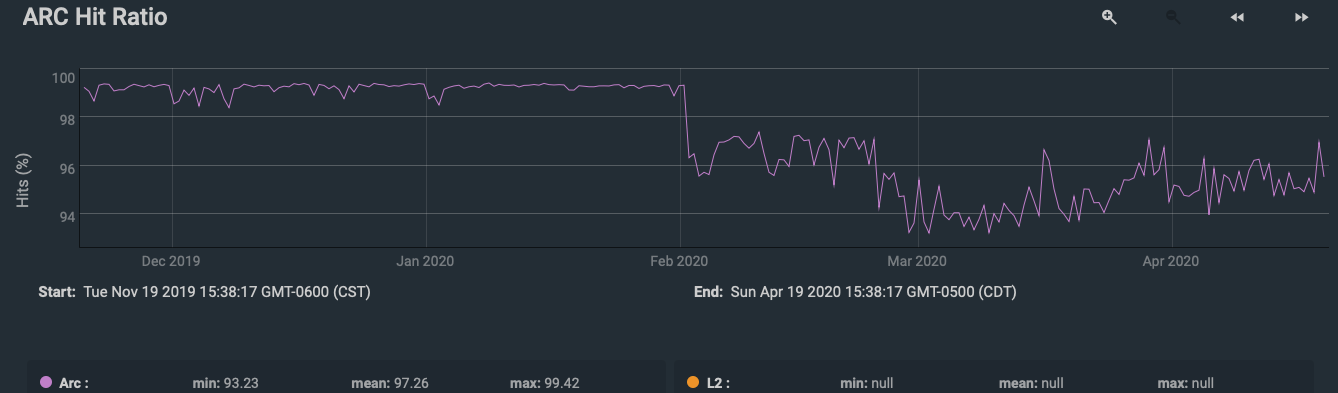

Despite that, my ARC hit-rates remain high enough despite the drop in total ARC size. Consistently above 90%.

Performance wise the NAS is fine. Plex is responsive, file transfers no matter which protocol are near maximum line rates (1GigE), no issues with stability. No usual error messages in the logs from a crashing service.

Seem to be having an issue with FreeNAS where after a reboot it starts off good and ARC fills up to about 18-23GB. Then over time the services category seem to suck up more and more ram, leaving less available for ARC. Eventually I end up with something like below where out of 32GB, only about 7GB is used for ARC, and the rest of it gets swallowed by the "Services" general category.

Below, you can see the ZFS usage over time. Spikes after a reboot, holds for a few days, then drops like a stone and holds around 7GB ARC.

Despite that, my ARC hit-rates remain high enough despite the drop in total ARC size. Consistently above 90%.