depasseg

FreeNAS Replicant

- Joined

- Sep 16, 2014

- Messages

- 2,874

Since the update in Mid-Oct 2015, I've been experiencing major issues with Replication. I've finally spent some time testing and I've confirmed that Replication is broken.

The bottom line is that it appears after a replication, the destination somehow gets unmounted, this causes the reporting collector to fail (collectd & statvfs console errors) and replication failed messages to be emailed. It appears that the replication does in fact work successfully, but these secondary effects are very bothersome. Furthermore, I've found that the replication issue also affect the creation of jails (if the replication happens at the same time the template is being downloaded and the jail creation is happening, the source jail dataset dissappears for a second and causes the "template not found" message. Disabling replication while creating the jail is a workaround that seems to work. Of course, once the jail is created, and replication is re-enabled, the failure emails continue. And once Jail snapshots are created, they can not be deleted. Oh, and there's the issue that one must use an encryption cipher to replicate (disabled, no longer works).

So far I've found that the following bugs all relate to these replication issues:

Bug #5293: Snapshot & Replication: backup pool on the Remote ZFS Volume/Dataset is empty (total loss of data).

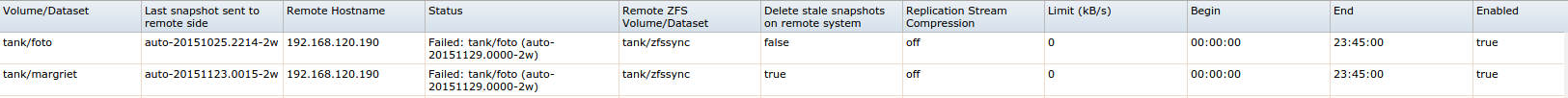

Bug #12143: Replication sends incorrect "fail" emails and doesn't update last snapshot sent in GUI

Bug #12252: "Cannot destroy <long snapshot name>: snapshot has dependent clones use '-R' to destroy the following datasets"

Bug #12379: Replication fails with cipher set to Disabled

Steps to recreate

1. Create a pool called TestSource

2. Create a pool called TestDestination.

3. Create 3 datasets (A, B, C) in TestSource

4. Set up a snapshot task for TestSource (recursive, 5 minutes, keep for 2 hours)

5. Set up a replication task from TestSource to TestDestination on 127.0.0.1 using standard compression and fast encryption (disabled cipher won't work).

6. In 5 minutes TestDestination will show datasets A,B,C. In 10 minutes they will still show up in the storage pane, but if you 'ls /mnt/TestDestination' the results will be empty.

7. If you now reboot, the TestDestination A,B,C datasets get added to the "Partition" tab under Reporting and in the console you'll see "collectd 48717: statvfs(/mnt/TestDestination/A) failed: No such file or directory"

8. try to create a jail, likely the source dataset will get stomped on during replication causing the template to be lost. Disable replication, and then the jail can be created fine. Re-enable replication.

9. Once the jail is created and gets replicated, email "replication failed" alerts start appearing for the jail datasets.

The bottom line is that it appears after a replication, the destination somehow gets unmounted, this causes the reporting collector to fail (collectd & statvfs console errors) and replication failed messages to be emailed. It appears that the replication does in fact work successfully, but these secondary effects are very bothersome. Furthermore, I've found that the replication issue also affect the creation of jails (if the replication happens at the same time the template is being downloaded and the jail creation is happening, the source jail dataset dissappears for a second and causes the "template not found" message. Disabling replication while creating the jail is a workaround that seems to work. Of course, once the jail is created, and replication is re-enabled, the failure emails continue. And once Jail snapshots are created, they can not be deleted. Oh, and there's the issue that one must use an encryption cipher to replicate (disabled, no longer works).

So far I've found that the following bugs all relate to these replication issues:

Bug #5293: Snapshot & Replication: backup pool on the Remote ZFS Volume/Dataset is empty (total loss of data).

Bug #12143: Replication sends incorrect "fail" emails and doesn't update last snapshot sent in GUI

Bug #12252: "Cannot destroy <long snapshot name>: snapshot has dependent clones use '-R' to destroy the following datasets"

Bug #12379: Replication fails with cipher set to Disabled

Steps to recreate

1. Create a pool called TestSource

2. Create a pool called TestDestination.

3. Create 3 datasets (A, B, C) in TestSource

4. Set up a snapshot task for TestSource (recursive, 5 minutes, keep for 2 hours)

5. Set up a replication task from TestSource to TestDestination on 127.0.0.1 using standard compression and fast encryption (disabled cipher won't work).

6. In 5 minutes TestDestination will show datasets A,B,C. In 10 minutes they will still show up in the storage pane, but if you 'ls /mnt/TestDestination' the results will be empty.

7. If you now reboot, the TestDestination A,B,C datasets get added to the "Partition" tab under Reporting and in the console you'll see "collectd 48717: statvfs(/mnt/TestDestination/A) failed: No such file or directory"

8. try to create a jail, likely the source dataset will get stomped on during replication causing the template to be lost. Disable replication, and then the jail can be created fine. Re-enable replication.

9. Once the jail is created and gets replicated, email "replication failed" alerts start appearing for the jail datasets.

Last edited: