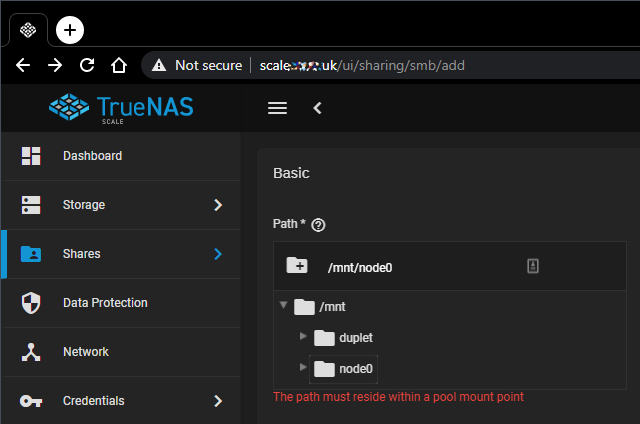

I just tried TrueNAS-SCALE-20.10-ALPHA and confirmed my expectation that there might not be support for any filesystem other than ZFS, again..

This was frustrating and very limiting since the first version of FreeNAS used. Basically, the only major disadvantage of the system that leaves questions about otherwise perfect solution. While highly inconvenient, the whole situation kind of, sort of made sense on FreeBSD, but IMO on a Debian based OS it would be ridiculous to deny native filesystems existence and enforce ZFS as an only option for a storage server.

I understand that:

- ZFS is a main focus for the OS;

- upstream support for other filesystems in FreeBSD might be limited;

- licensing might be an issue;

- since TrueNAS strives to be a cross-platform GUI/API "framework" it gets quite harder for devs to maintain feature sets in both Unix and Linux flavors

What I do not understand is:

- why multi-pool setups with filesystems suitable for use case seem completely not though of, unsupported?

(my goal apart from having a redundant ZFS pool with snapshots and all other perks, is to be able to have separate non-redundant (probably single disk) mounts that I'm still able to share via same ecosystem while keeping additional hardware requirements and costs down)

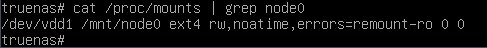

- do you think it is ok, that I can not even workaround in CLI?

- will this always be that way? Now seems like the best time to discuss it before Scale grows into something hardcoded, non-flexible and labor intensive to modify.

Please, if you feel anything like this, share your thoughts.

Other opinions are, of course, welcome too.

This was frustrating and very limiting since the first version of FreeNAS used. Basically, the only major disadvantage of the system that leaves questions about otherwise perfect solution. While highly inconvenient, the whole situation kind of, sort of made sense on FreeBSD, but IMO on a Debian based OS it would be ridiculous to deny native filesystems existence and enforce ZFS as an only option for a storage server.

I understand that:

- ZFS is a main focus for the OS;

- upstream support for other filesystems in FreeBSD might be limited;

- licensing might be an issue;

- since TrueNAS strives to be a cross-platform GUI/API "framework" it gets quite harder for devs to maintain feature sets in both Unix and Linux flavors

What I do not understand is:

- why multi-pool setups with filesystems suitable for use case seem completely not though of, unsupported?

(my goal apart from having a redundant ZFS pool with snapshots and all other perks, is to be able to have separate non-redundant (probably single disk) mounts that I'm still able to share via same ecosystem while keeping additional hardware requirements and costs down)

- do you think it is ok, that I can not even workaround in CLI?

- will this always be that way? Now seems like the best time to discuss it before Scale grows into something hardcoded, non-flexible and labor intensive to modify.

Please, if you feel anything like this, share your thoughts.

Other opinions are, of course, welcome too.