rienk.dejong

Cadet

- Joined

- Mar 19, 2020

- Messages

- 4

Hi all,

I've been having some network issues with our new FreeNAS server. I already posted in another forum to figure out the issue. Link to other tread:

forums.lawrencesystems.com

I think the root of the issues I've been having is in FreeNAS / FreeBSD, but I'm not sure and how I fixed is not future proof I think.

forums.lawrencesystems.com

I think the root of the issues I've been having is in FreeNAS / FreeBSD, but I'm not sure and how I fixed is not future proof I think.

Lets start with the hardware involved:

FreeNAS server:

To separate the traffic I configured separate VLANS for Office and SAN traffic.

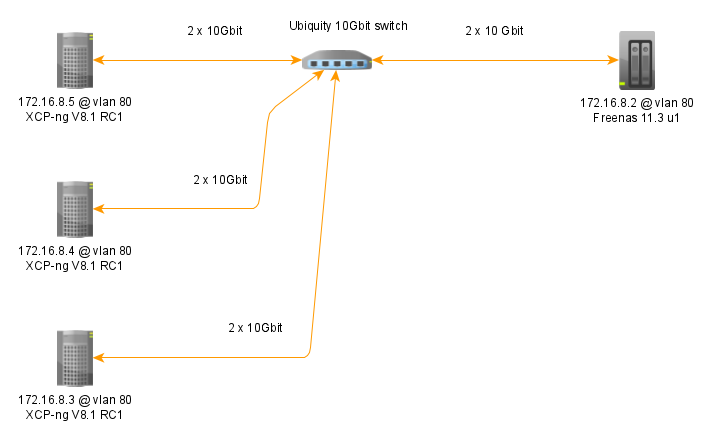

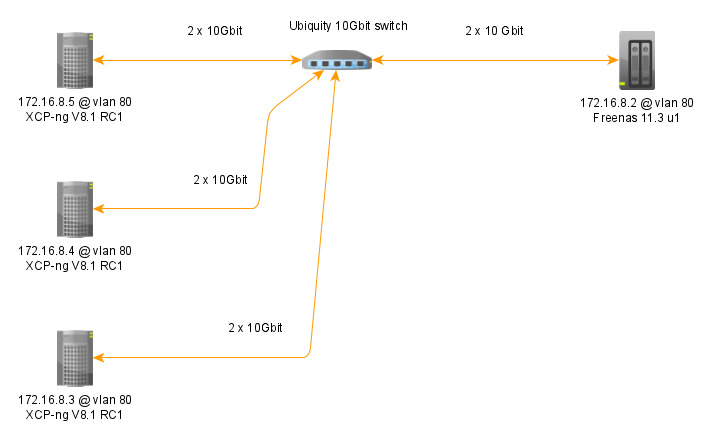

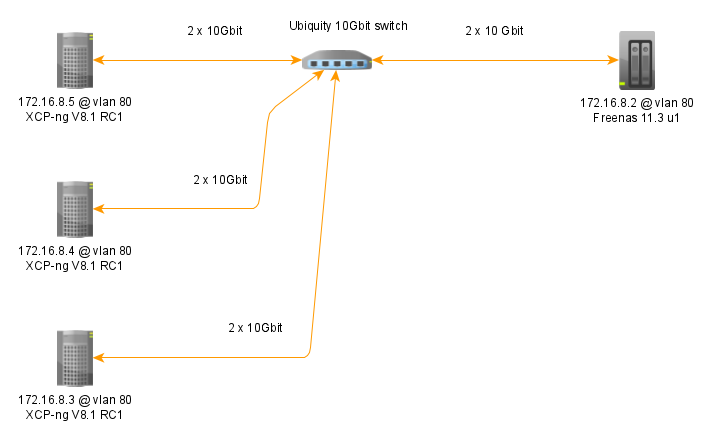

Below is a network diagram.

When I had everything initially configured as above with all 2 x 10Gbit connection configured as LACP lagg I had trouble pinging some hosts ( VM's and real machines)

For instance I could ping the following:

Eventually I found that disconnecting one of the 10Gbit ports of the FreeNAS box solved the problem. And if I reconnected that cable and pulled out the other I got a “Network is down” error.

With some google-ing I found some people that had similar problems but not my exact problem and with older versions of FreeNAS. But they all had to do with the driver of the card.

So I downloaded http://pkg.freebsd.org/FreeBSD:11:amd64/latest/All/intel-ixl-kmod-1.11.9.txz ,

copied the if_ixl_updated.ko to the /boot/modules folder and loaded it with a tunable.

It seems to work now, alt least after a reboot of the FreeNAS box.

The thing that changed is:

original output of dmesg | grep ixl:

ixl0: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.11.9-k>

output with the driver form pkg.freebsd.org dmesg | grep ixl:

ixl0: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.11.9>

My main question is, does anybody have the same experience with a similar setup?

And can anybody make sense of why the above driver change would solve my issue. Because I'm not 100% comfortable with just loading another driver that is not form the official FreeNAS repository.

Kind Regards,

Rienk

I've been having some network issues with our new FreeNAS server. I already posted in another forum to figure out the issue. Link to other tread:

Freenas can't ping some hosts on storage vlan

Hi all, I have a setup with a couple of XCP-ng servers and a FreeNAS server. All servers are connected to two Ubiquity switches, an 1 Gbit switch and a 10Gbit switch. The 1 Gbit switch is used for the management interface, and the 10 Gbit for a SAN and eventually also for office connections...

forums.lawrencesystems.com

forums.lawrencesystems.com

Lets start with the hardware involved:

FreeNAS server:

- Mainboard: Supermicro H11SSL-I

- Processor: AMD EPYC™ Rome 7262

- Network card: AOC-STGF-i2S-O Intel X710 chip ( 2 x 10Gbit SFP+)

- 128 GB RAM

- 2 x 240GB SSD for OS

- 10 x 4TB HDD + 4 x 480GB SSD for storage pool

- Mainboard: Supermicro H11SSL-I

- Processor: AMD EPYC™ Rome 7402P

- Network card: AOC-STGF-i2S-O Intel X710 chip ( 2 x 10Gbit SFP+)

- 128 GB RAM

- 2 x 240GB SSD for OS

- Ubiquity UniFi Switch 16XG ( 16 x 10Gbit )

- Connected to servers with SFP+ DAC cables

- UniFi Switch 16 POE

To separate the traffic I configured separate VLANS for Office and SAN traffic.

Below is a network diagram.

When I had everything initially configured as above with all 2 x 10Gbit connection configured as LACP lagg I had trouble pinging some hosts ( VM's and real machines)

For instance I could ping the following:

- 172.16.8.2 (FreeNAS) to 172.16.8.3 (XCP-ng)

- 172.16.8.2 (FreeNAS) to 172.16.8.4 (XCP-ng)

- 172.16.8.2 (FreeNAS) to 172.16.8.101 (Debinan VM @ 172.16.8.5)

- 172.16.8.5 ((XCP-ng) to 172.16.8.3 (XCP-ng)

- 172.16.8.5 ((XCP-ng) to 172.16.8.4 (XCP-ng)

- 172.16.8.5 ((XCP-ng) to 172.16.8.33 (Debinan VM @ 172.16.8.3)

- 172.16.8.5 ((XCP-ng) to 172.16.8.101 (Debinan VM @ 172.16.8.5)

- 172.16.8.2 (FreeNAS) to 172.16.8.5 (XCP-ng)

- 172.16.8.2 (FreeNAS) to 172.16.8.33 (Debian VM @ 172.16.8.3)

Eventually I found that disconnecting one of the 10Gbit ports of the FreeNAS box solved the problem. And if I reconnected that cable and pulled out the other I got a “Network is down” error.

With some google-ing I found some people that had similar problems but not my exact problem and with older versions of FreeNAS. But they all had to do with the driver of the card.

So I downloaded http://pkg.freebsd.org/FreeBSD:11:amd64/latest/All/intel-ixl-kmod-1.11.9.txz ,

copied the if_ixl_updated.ko to the /boot/modules folder and loaded it with a tunable.

It seems to work now, alt least after a reboot of the FreeNAS box.

The thing that changed is:

original output of dmesg | grep ixl:

ixl0: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.11.9-k>

output with the driver form pkg.freebsd.org dmesg | grep ixl:

ixl0: <Intel(R) Ethernet Connection 700 Series PF Driver, Version - 1.11.9>

My main question is, does anybody have the same experience with a similar setup?

And can anybody make sense of why the above driver change would solve my issue. Because I'm not 100% comfortable with just loading another driver that is not form the official FreeNAS repository.

Kind Regards,

Rienk