Good morning!

A couple days ago I had a disk go bad in one of my pools. No big deal, I offlined the disk, shut down the freenas server, replaced the disk and booted back up. I went into the pool, then I went to replace the disk like normal.

Unfortunately something went wrong. The task hung for over a day and when I checked the usage logs, there was no activity on the new disk. I assumed the task hung for whatever reason. Unfortunately I don't know how to proceed now. I offlined the new disk and tried to reseat it but now I have two orphaned disks with "REPLACING" status, and I'm not sure how to fix this. I still have a pool in a degraded state.

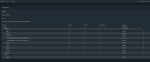

See screen shot for reference;

A couple days ago I had a disk go bad in one of my pools. No big deal, I offlined the disk, shut down the freenas server, replaced the disk and booted back up. I went into the pool, then I went to replace the disk like normal.

Unfortunately something went wrong. The task hung for over a day and when I checked the usage logs, there was no activity on the new disk. I assumed the task hung for whatever reason. Unfortunately I don't know how to proceed now. I offlined the new disk and tried to reseat it but now I have two orphaned disks with "REPLACING" status, and I'm not sure how to fix this. I still have a pool in a degraded state.

See screen shot for reference;