ryanwclark

Cadet

- Joined

- Apr 6, 2019

- Messages

- 2

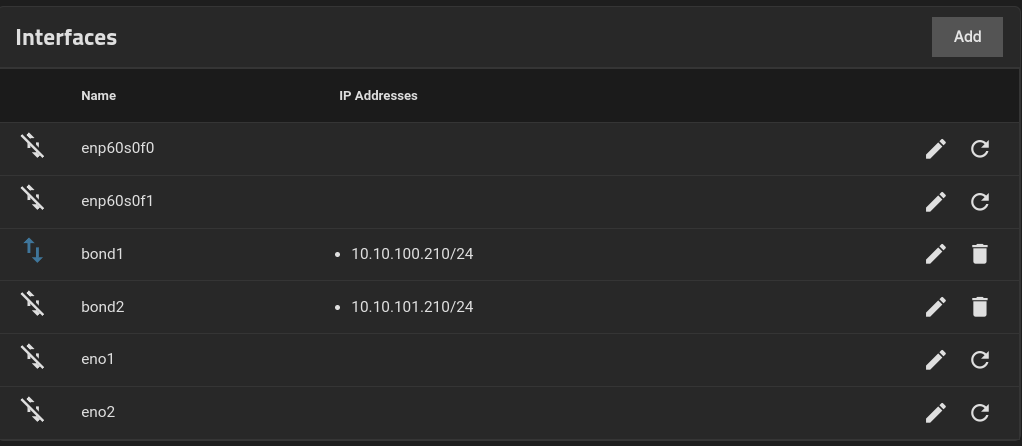

I'm facing an issue with one of my two network cards not working properly on my Truenas system (version 23.10.1, kernel 6.1.63-production+truenas). Below are the steps I've taken to diagnose the issue, along with the relevant outputs.

1a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

1a:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

3c:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

3c:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

$ethtool eno1

Settings for eno1:

Supported ports: [ ]

Supported link modes: 1000baseT/Full

10000baseT/Full

1000baseKX/Full

10000baseKR/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 1000baseT/Full

10000baseT/Full

1000baseKX/Full

10000baseKR/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Speed: Unknown!

Duplex: Unknown! (255)

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Supports Wake-on: g

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: no

$ethtool enp60s0f0

Settings for enp60s0f0:

Supported ports: [ FIBRE ]

Supported link modes: 10000baseT/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: 10000baseT/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Direct Attach Copper

PHYAD: 0

Transceiver: internal

Supports Wake-on: d

Wake-on: d

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

$lspci -vvv -k

1a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

DeviceName: Intel LAN X557 #1

Subsystem: Super Micro Computer Inc Ethernet Connection X722 for 10GBASE-T

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr+ Stepping- SERR+ FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 32 bytes

Interrupt: pin A routed to IRQ 38

NUMA node: 0

IOMMU group: 9

Region 0: Memory at 39fffe000000 (64-bit, prefetchable) [size=16M]

Region 3: Memory at 39ffff808000 (64-bit, prefetchable) [size=32K]

Expansion ROM at aac80000 [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI+ D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot+,D3cold+)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Address: 0000000000000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [70] MSI-X: Enable+ Count=129 Masked-

Vector table: BAR=3 offset=00000000

PBA: BAR=3 offset=00001000

Capabilities: [a0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 512 bytes, PhantFunc 0, Latency L0s <512ns, L1 <64us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr- NonFatalErr- FatalErr+ UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop- FLReset-

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr+ NonFatalErr- FatalErr- UnsupReq+ AuxPwr+ TransPend-

LnkCap: Port #0, Speed 2.5GT/s, Width x1, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s, Width x1

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range AB, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCtl2: Target Link Speed: 2.5GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete- EqualizationPhase1-

EqualizationPhase2- EqualizationPhase3- LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] Vital Product Data

Product Name: Example VPD

Read-only fields:

[V0] Vendor specific:

[RV] Reserved: checksum good, 0 byte(s) reserved

End

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq+ ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [140 v1] Device Serial Number a4-c4-6e-ff-ff-6b-1f-ac

Capabilities: [150 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [160 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy+ 10BitTagReq-

IOVSta: Migration-

Initial VFs: 32, Total VFs: 32, Number of VFs: 0, Function Dependency Link: 00

VF offset: 16, stride: 1, Device ID: 37cd

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 000039ffff400000 (64-bit, prefetchable)

Region 3: Memory at 000039ffff890000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [1a0 v1] Transaction Processing Hints

Device specific mode supported

No steering table available

Capabilities: [1b0 v1] Access Control Services

ACSCap: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

ACSCtl: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

Kernel driver in use: i40e

Kernel modules: i40e

$dmesg | grep -e eth0 -e eno1 -e i40e -e eth1 -e eno2

[ 2.998794] i40e: Intel(R) Ethernet Connection XL710 Network Driver

[ 3.002532] i40e: Copyright (c) 2013 - 2019 Intel Corporation.

[ 3.175949] i40e 0000:1a:00.0: fw 3.1.52349 api 1.5 nvm 3.25 0x800009e7 1.1638.0 [8086:37d2] [15d9:37d2]

[ 3.221237] i40e 0000:1a:00.0: MAC address: ac:1f:6b:6e:c4:a4

[ 3.227714] i40e 0000:1a:00.0: FW LLDP is enabled

[ 3.343716] i40e 0000:1a:00.0: Added LAN device PF0 bus=0x1a dev=0x00 func=0x00

[ 3.354455] i40e 0000:1a:00.0: Features: PF-id[0] VFs: 32 VSIs: 66 QP: 72 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

[ 3.461103] i40e 0000:1a:00.1: fw 3.1.52349 api 1.5 nvm 3.25 0x800009e7 1.1638.0 [8086:37d2] [15d9:37d2]

[ 3.467960] i40e 0000:1a:00.1: MAC address: ac:1f:6b:6e:c4:a5

[ 3.470981] i40e 0000:1a:00.1: FW LLDP is enabled

[ 3.481559] i40e 0000:1a:00.1: Added LAN device PF1 bus=0x1a dev=0x00 func=0x01

[ 3.493325] i40e 0000:1a:00.1: Features: PF-id[1] VFs: 32 VSIs: 66 QP: 72 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

[ 5.376134] i40e 0000:1a:00.0 eno1: renamed from eth0

[ 5.587444] i40e 0000:1a:00.1 eno2: renamed from eth1

[241870.327714] br0: port 1(eno1) entered blocking state

[241870.328044] br0: port 1(eno1) entered disabled state

[241870.328437] device eno1 entered promiscuous mode

[241870.332808] i40e 0000:1a:00.0: entering allmulti mode.

[241975.863152] br0: port 1(eno1) entered disabled state

[241975.974621] device eno1 left promiscuous mode

[241975.974937] br0: port 1(eno1) entered disabled state

[242185.211843] br0: port 1(eno1) entered blocking state

[242185.212114] br0: port 1(eno1) entered disabled state

[242185.212448] device eno1 entered promiscuous mode

[242185.216437] i40e 0000:1a:00.0: entering allmulti mode.

[242415.790511] br0: port 1(eno1) entered disabled state

[242415.831123] device eno1 left promiscuous mode

[242415.831675] br0: port 1(eno1) entered disabled state

[242415.856742] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242415.887397] bond2: (slave eno1): Enslaving as a backup interface with a down link

[242415.972156] i40e 0000:1a:00.1 eno2: set new mac address ac:1f:6b:6e:c4:a4

[242416.023427] bond2: (slave eno2): Enslaving as a backup interface with a down link

[242476.941935] bond2: (slave eno1): Releasing backup interface

[242476.942263] bond2: (slave eno1): the permanent HWaddr of slave - ac:1f:6b:6e:c4:a4 - is still in use by bond - set the HWaddr of slave to a different address to avoid conflicts

[242477.034365] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242477.038030] br0: port 1(eno1) entered blocking state

[242477.038381] br0: port 1(eno1) entered disabled state

[242477.038744] device eno1 entered promiscuous mode

[242477.040858] i40e 0000:1a:00.0: entering allmulti mode.

[242477.647118] bond2 (unregistering): (slave eno2): Releasing backup interface

[242477.685996] i40e 0000:1a:00.1 eno2: returning to hw mac address ac:1f:6b:6e:c4:a5

[242541.301871] br0: port 1(eno1) entered disabled state

[242541.423923] device eno1 left promiscuous mode

[242541.424250] br0: port 1(eno1) entered disabled state

[242600.975828] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242601.030305] bond2: (slave eno1): Enslaving as a backup interface with a down link

[242601.168014] i40e 0000:1a:00.1 eno2: set new mac address ac:1f:6b:6e:c4:a4

[242601.222243] bond2: (slave eno2): Enslaving as a backup interface with a down link

$uname -a

Linux tank 6.1.63-production+truenas #2 SMP PREEMPT_DYNAMIC Tue Jan 23 22:31:02 UTC 2024 x86_64 GNU/Li

$cat /etc/version

23.10.1

Hardware Information:

My system has two Intel Ethernet controllers:- Two ports of Intel Ethernet Connection X722 for 10GBASE-T (rev 09)

- Two ports of Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

Issue:

One of the network interfaces (eno1/eno2) is not showing any link detected, while the other (enp60s0f0/enp60s0f1) is working fine with a 10Gb/s full duplex link detected. Note, I have tested the physical cables and different ports on my router.Diagnostic Steps and Outputs:

Ethernet Controllers Detected:

- lspci output for Ethernet controllers shows both the Intel Ethernet Connection X722 and the 82599ES controllers detected by the system.

ethtool Outputs:

- For eno1, ethtool shows "Link detected: no" with "Speed: Unknown" and "Duplex: Unknown". Auto-negotiation is off.

Detailed Device Information (lspci -vvv -k):

- The lspci output for both devices shows detailed configuration and capabilities. The device using the i40e driver is the one experiencing issues (eno1 and eno2).

Kernel Messages (dmesg):

- The dmesg output filtered for eth0, eno1, i40e, eth1, and eno2 shows various operations, including renaming interfaces from eth0 to eno1 and eth1 to eno2, attempts to enter promiscuous mode, and issues with MAC addresses being already in use.

Questions:

- Given the ethtool and lspci outputs, why might eno1 not be detecting a link, while enp60s0f0 operates normally?

- Could the issue be related to the i40e driver or a specific configuration required for the Ethernet Connection X722 controller?

- Are there any known compatibility issues with the kernel version 6.1.63-production+truenas and the network cards in use?

- What steps can I take to further diagnose and resolve the link detection issue on eno1/eno2?

Outputs

$lspci | grep Ethernet1a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

1a:00.1 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

3c:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

3c:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

$ethtool eno1

Settings for eno1:

Supported ports: [ ]

Supported link modes: 1000baseT/Full

10000baseT/Full

1000baseKX/Full

10000baseKR/Full

Supported pause frame use: Symmetric Receive-only

Supports auto-negotiation: Yes

Supported FEC modes: Not reported

Advertised link modes: 1000baseT/Full

10000baseT/Full

1000baseKX/Full

10000baseKR/Full

Advertised pause frame use: No

Advertised auto-negotiation: Yes

Advertised FEC modes: Not reported

Speed: Unknown!

Duplex: Unknown! (255)

Auto-negotiation: off

Port: Other

PHYAD: 0

Transceiver: internal

Supports Wake-on: g

Wake-on: g

Current message level: 0x00000007 (7)

drv probe link

Link detected: no

$ethtool enp60s0f0

Settings for enp60s0f0:

Supported ports: [ FIBRE ]

Supported link modes: 10000baseT/Full

Supported pause frame use: Symmetric

Supports auto-negotiation: No

Supported FEC modes: Not reported

Advertised link modes: 10000baseT/Full

Advertised pause frame use: Symmetric

Advertised auto-negotiation: No

Advertised FEC modes: Not reported

Speed: 10000Mb/s

Duplex: Full

Auto-negotiation: off

Port: Direct Attach Copper

PHYAD: 0

Transceiver: internal

Supports Wake-on: d

Wake-on: d

Current message level: 0x00000007 (7)

drv probe link

Link detected: yes

$lspci -vvv -k

1a:00.0 Ethernet controller: Intel Corporation Ethernet Connection X722 for 10GBASE-T (rev 09)

DeviceName: Intel LAN X557 #1

Subsystem: Super Micro Computer Inc Ethernet Connection X722 for 10GBASE-T

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr+ Stepping- SERR+ FastB2B- DisINTx+

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0, Cache Line Size: 32 bytes

Interrupt: pin A routed to IRQ 38

NUMA node: 0

IOMMU group: 9

Region 0: Memory at 39fffe000000 (64-bit, prefetchable) [size=16M]

Region 3: Memory at 39ffff808000 (64-bit, prefetchable) [size=32K]

Expansion ROM at aac80000 [disabled] [size=512K]

Capabilities: [40] Power Management version 3

Flags: PMEClk- DSI+ D1- D2- AuxCurrent=0mA PME(D0+,D1-,D2-,D3hot+,D3cold+)

Status: D0 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [50] MSI: Enable- Count=1/1 Maskable+ 64bit+

Address: 0000000000000000 Data: 0000

Masking: 00000000 Pending: 00000000

Capabilities: [70] MSI-X: Enable+ Count=129 Masked-

Vector table: BAR=3 offset=00000000

PBA: BAR=3 offset=00001000

Capabilities: [a0] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 512 bytes, PhantFunc 0, Latency L0s <512ns, L1 <64us

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr- NonFatalErr- FatalErr+ UnsupReq-

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop- FLReset-

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr+ NonFatalErr- FatalErr- UnsupReq+ AuxPwr+ TransPend-

LnkCap: Port #0, Speed 2.5GT/s, Width x1, ASPM L0s L1, Exit Latency L0s <64ns, L1 <1us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM Disabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 2.5GT/s, Width x1

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Range AB, TimeoutDis+ NROPrPrP- LTR-

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt- EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR- 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCtl2: Target Link Speed: 2.5GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete- EqualizationPhase1-

EqualizationPhase2- EqualizationPhase3- LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] Vital Product Data

Product Name: Example VPD

Read-only fields:

[V0] Vendor specific:

[RV] Reserved: checksum good, 0 byte(s) reserved

End

Capabilities: [100 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq+ ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [140 v1] Device Serial Number a4-c4-6e-ff-ff-6b-1f-ac

Capabilities: [150 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 1

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [160 v1] Single Root I/O Virtualization (SR-IOV)

IOVCap: Migration- 10BitTagReq- Interrupt Message Number: 000

IOVCtl: Enable- Migration- Interrupt- MSE- ARIHierarchy+ 10BitTagReq-

IOVSta: Migration-

Initial VFs: 32, Total VFs: 32, Number of VFs: 0, Function Dependency Link: 00

VF offset: 16, stride: 1, Device ID: 37cd

Supported Page Size: 00000553, System Page Size: 00000001

Region 0: Memory at 000039ffff400000 (64-bit, prefetchable)

Region 3: Memory at 000039ffff890000 (64-bit, prefetchable)

VF Migration: offset: 00000000, BIR: 0

Capabilities: [1a0 v1] Transaction Processing Hints

Device specific mode supported

No steering table available

Capabilities: [1b0 v1] Access Control Services

ACSCap: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

ACSCtl: SrcValid- TransBlk- ReqRedir- CmpltRedir- UpstreamFwd- EgressCtrl- DirectTrans-

Kernel driver in use: i40e

Kernel modules: i40e

$dmesg | grep -e eth0 -e eno1 -e i40e -e eth1 -e eno2

[ 2.998794] i40e: Intel(R) Ethernet Connection XL710 Network Driver

[ 3.002532] i40e: Copyright (c) 2013 - 2019 Intel Corporation.

[ 3.175949] i40e 0000:1a:00.0: fw 3.1.52349 api 1.5 nvm 3.25 0x800009e7 1.1638.0 [8086:37d2] [15d9:37d2]

[ 3.221237] i40e 0000:1a:00.0: MAC address: ac:1f:6b:6e:c4:a4

[ 3.227714] i40e 0000:1a:00.0: FW LLDP is enabled

[ 3.343716] i40e 0000:1a:00.0: Added LAN device PF0 bus=0x1a dev=0x00 func=0x00

[ 3.354455] i40e 0000:1a:00.0: Features: PF-id[0] VFs: 32 VSIs: 66 QP: 72 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

[ 3.461103] i40e 0000:1a:00.1: fw 3.1.52349 api 1.5 nvm 3.25 0x800009e7 1.1638.0 [8086:37d2] [15d9:37d2]

[ 3.467960] i40e 0000:1a:00.1: MAC address: ac:1f:6b:6e:c4:a5

[ 3.470981] i40e 0000:1a:00.1: FW LLDP is enabled

[ 3.481559] i40e 0000:1a:00.1: Added LAN device PF1 bus=0x1a dev=0x00 func=0x01

[ 3.493325] i40e 0000:1a:00.1: Features: PF-id[1] VFs: 32 VSIs: 66 QP: 72 RSS FD_ATR FD_SB NTUPLE DCB VxLAN Geneve PTP VEPA

[ 5.376134] i40e 0000:1a:00.0 eno1: renamed from eth0

[ 5.587444] i40e 0000:1a:00.1 eno2: renamed from eth1

[241870.327714] br0: port 1(eno1) entered blocking state

[241870.328044] br0: port 1(eno1) entered disabled state

[241870.328437] device eno1 entered promiscuous mode

[241870.332808] i40e 0000:1a:00.0: entering allmulti mode.

[241975.863152] br0: port 1(eno1) entered disabled state

[241975.974621] device eno1 left promiscuous mode

[241975.974937] br0: port 1(eno1) entered disabled state

[242185.211843] br0: port 1(eno1) entered blocking state

[242185.212114] br0: port 1(eno1) entered disabled state

[242185.212448] device eno1 entered promiscuous mode

[242185.216437] i40e 0000:1a:00.0: entering allmulti mode.

[242415.790511] br0: port 1(eno1) entered disabled state

[242415.831123] device eno1 left promiscuous mode

[242415.831675] br0: port 1(eno1) entered disabled state

[242415.856742] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242415.887397] bond2: (slave eno1): Enslaving as a backup interface with a down link

[242415.972156] i40e 0000:1a:00.1 eno2: set new mac address ac:1f:6b:6e:c4:a4

[242416.023427] bond2: (slave eno2): Enslaving as a backup interface with a down link

[242476.941935] bond2: (slave eno1): Releasing backup interface

[242476.942263] bond2: (slave eno1): the permanent HWaddr of slave - ac:1f:6b:6e:c4:a4 - is still in use by bond - set the HWaddr of slave to a different address to avoid conflicts

[242477.034365] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242477.038030] br0: port 1(eno1) entered blocking state

[242477.038381] br0: port 1(eno1) entered disabled state

[242477.038744] device eno1 entered promiscuous mode

[242477.040858] i40e 0000:1a:00.0: entering allmulti mode.

[242477.647118] bond2 (unregistering): (slave eno2): Releasing backup interface

[242477.685996] i40e 0000:1a:00.1 eno2: returning to hw mac address ac:1f:6b:6e:c4:a5

[242541.301871] br0: port 1(eno1) entered disabled state

[242541.423923] device eno1 left promiscuous mode

[242541.424250] br0: port 1(eno1) entered disabled state

[242600.975828] i40e 0000:1a:00.0 eno1: already using mac address ac:1f:6b:6e:c4:a4

[242601.030305] bond2: (slave eno1): Enslaving as a backup interface with a down link

[242601.168014] i40e 0000:1a:00.1 eno2: set new mac address ac:1f:6b:6e:c4:a4

[242601.222243] bond2: (slave eno2): Enslaving as a backup interface with a down link

$uname -a

Linux tank 6.1.63-production+truenas #2 SMP PREEMPT_DYNAMIC Tue Jan 23 22:31:02 UTC 2024 x86_64 GNU/Li

$cat /etc/version

23.10.1