Hi all,

I'm not usually one to post on forums as I like to find solutions myself, but I'm at a dead end here after spending 60+ hours reading about TrueNAS and ZFS and trying to optimise my setup.

So my setup:

- HPE DL360 Gen9

- HPE FLR544 FlexLOM Dual 40GbE (Mellanox Connect-X 3 Pro)

- 2x Intel Xeon E5-2650v4 2.2 Ghz

- Sonnet Technologies Fusion M.2 4X4 PCIe card (has a PLX chip on it so no need for Bifrication support from motherboard)

- 128 GB (8x 16GB RDIMM 2400Mhz Samsung DIMMS)

- Running on ESXi 7.0.3

- 4X Lexar NM790 2TB SSDs

My TrueNAS Core VM has following specs:

- 16 vCPUs

- 64GB RAM

- 60 GB Boot Disk

- Sonnet Tech Fusion card is passed through (well all the NVME SSDs are passed through).

- 2 interfaces: one for MGMT and one for the iSCSI/SMB/NFS etc. They are on seperate VLANs.

I have 2 DSwitches: One for all my VM traffic and ESXi MGMT and one dedicated for 'vSAN' that I use for storage. In the vSAN one, only the 40GB NICs are present as uplinks. I have a seperate VLAN for the 'vSAN' network. Please don't mind the name, it's because I was planning on using vSAN before.

The 2 Physical Hosts are conencted toghether via a DAC cable, so no switching for that VLAN is done.

Each ESXi instance has a seperate vmkernel interface in that vlan/subnet and I have jumbo frames enabled in the whole path, also on the interface in TrueNAS.

The issue I have is that no matter what config I try, it seems like the performance is nowhere near what a single of those SSDs can do.

I even tried installing TrueNas Core bare metal with the full resources of the server, but to no avail. Performance stayed the same.

I am running TrueNAS Core 13U5.2 at the moment, but I have also tried SCALE 22.12 and even the 23.12 Nightly. All gave me the same results.

I have 2 VDEVs that each have 2 NVME disks in mirror, so they are striped together.

I also triend RAID-Z1 and Z2 just for diagnosing purposes but performance is the same on all of them.

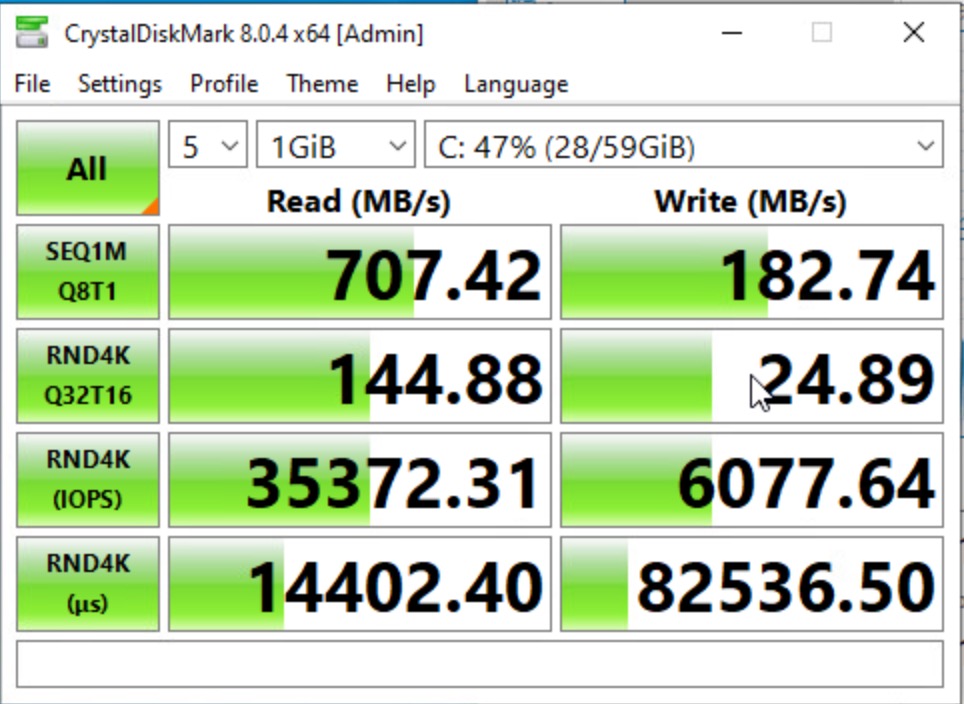

Here's an image of the kind of performance I get with CrystalDiskMark and the NVME + Max Performance profile:

My IOPS seems at the lower end of what I would expect, but especially the writes are a BIG issue for me. I tried with 32, 128K Record sizes on the ZVOL, but results stay mostly the same. Maybe 1-5 MB/s improvement between the settings.

Has anyone got any ideas ? I'm really hitting a dead end here.

Also, can anyone give me a correct way to test with fio locally on TrueNAS? I found a lot of different ways to test with different IO Engines, but if someone could point me in the right direction for my setup...

PS: CPU utilisation is not high while performing the test over iSCSI.

I also tried NFS v4 but that gave me half of the IOPS.

I'm not usually one to post on forums as I like to find solutions myself, but I'm at a dead end here after spending 60+ hours reading about TrueNAS and ZFS and trying to optimise my setup.

So my setup:

- HPE DL360 Gen9

- HPE FLR544 FlexLOM Dual 40GbE (Mellanox Connect-X 3 Pro)

- 2x Intel Xeon E5-2650v4 2.2 Ghz

- Sonnet Technologies Fusion M.2 4X4 PCIe card (has a PLX chip on it so no need for Bifrication support from motherboard)

- 128 GB (8x 16GB RDIMM 2400Mhz Samsung DIMMS)

- Running on ESXi 7.0.3

- 4X Lexar NM790 2TB SSDs

My TrueNAS Core VM has following specs:

- 16 vCPUs

- 64GB RAM

- 60 GB Boot Disk

- Sonnet Tech Fusion card is passed through (well all the NVME SSDs are passed through).

- 2 interfaces: one for MGMT and one for the iSCSI/SMB/NFS etc. They are on seperate VLANs.

I have 2 DSwitches: One for all my VM traffic and ESXi MGMT and one dedicated for 'vSAN' that I use for storage. In the vSAN one, only the 40GB NICs are present as uplinks. I have a seperate VLAN for the 'vSAN' network. Please don't mind the name, it's because I was planning on using vSAN before.

The 2 Physical Hosts are conencted toghether via a DAC cable, so no switching for that VLAN is done.

Each ESXi instance has a seperate vmkernel interface in that vlan/subnet and I have jumbo frames enabled in the whole path, also on the interface in TrueNAS.

The issue I have is that no matter what config I try, it seems like the performance is nowhere near what a single of those SSDs can do.

I even tried installing TrueNas Core bare metal with the full resources of the server, but to no avail. Performance stayed the same.

I am running TrueNAS Core 13U5.2 at the moment, but I have also tried SCALE 22.12 and even the 23.12 Nightly. All gave me the same results.

I have 2 VDEVs that each have 2 NVME disks in mirror, so they are striped together.

I also triend RAID-Z1 and Z2 just for diagnosing purposes but performance is the same on all of them.

Here's an image of the kind of performance I get with CrystalDiskMark and the NVME + Max Performance profile:

My IOPS seems at the lower end of what I would expect, but especially the writes are a BIG issue for me. I tried with 32, 128K Record sizes on the ZVOL, but results stay mostly the same. Maybe 1-5 MB/s improvement between the settings.

Has anyone got any ideas ? I'm really hitting a dead end here.

Also, can anyone give me a correct way to test with fio locally on TrueNAS? I found a lot of different ways to test with different IO Engines, but if someone could point me in the right direction for my setup...

PS: CPU utilisation is not high while performing the test over iSCSI.

I also tried NFS v4 but that gave me half of the IOPS.