Hi, I recently started with ESXI, Freenas, pfsense and some windows vm's.

I develop software and offer some services (online backup) and was looking for an energy efficient way to separate my data from the customer's.

Thus I got a xeon D1540, 32G DDR4 ECC, a LSI SAS 3008 and 3x 4T WD Red Pro drives and a SSD to boot from and store the VM's on.

So far so good :)

Freenas installed, SAS card flashed to IT 9 version (green light in freenas) and created a CIFS share and a ZVOL (ISCSI). The ISCSI is passed on to ESXI and can be given to one VM as a drive (once I get the problem solved, I will pass this to the DMZ VM).

The CIFS is a share accessible from the LAN (where the freenas server is located as well).

This way my data and the customer's data are nicely separated.

The VM has 8G ram, Freenas has also 8G ram. I tested with 16x1G zip files. The network controller is an intel I350 (driver igb, 1500MTU).

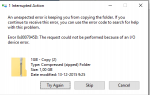

The problem:

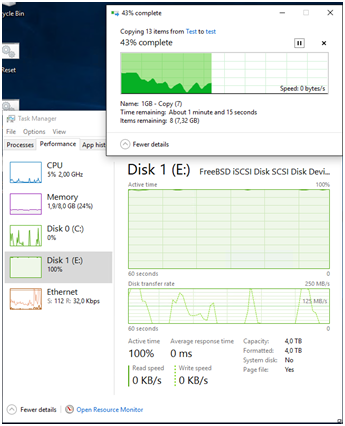

ISCSI seems to give irregular performance.

I did some tests: (and please tell me if the performance is as expected or too low and where I should look to find the cause!)

SSD-SSD same pc: 145 MB/s

SSD pc1 -> SSD VM 112MB/s (network speed)

SSD VM -> CIFS share: 250-260MB/s

CIFS share -> SSD VM: 150-200 MB/s

SSD VM -> ISCSI: 20-250 MB/s *irregular

ISCSI -> VM: 20-250 MB/s *irregular

CIFS share -> SSD PC1: 80-90 MB/s (over the network)

The most concerning is the irregular ISCSI speed.

If you look at the disk transfer rate, you see several times it has 0 speed.

How do I go about solving this?

Thanks for the help,

Reinier

I develop software and offer some services (online backup) and was looking for an energy efficient way to separate my data from the customer's.

Thus I got a xeon D1540, 32G DDR4 ECC, a LSI SAS 3008 and 3x 4T WD Red Pro drives and a SSD to boot from and store the VM's on.

So far so good :)

Freenas installed, SAS card flashed to IT 9 version (green light in freenas) and created a CIFS share and a ZVOL (ISCSI). The ISCSI is passed on to ESXI and can be given to one VM as a drive (once I get the problem solved, I will pass this to the DMZ VM).

The CIFS is a share accessible from the LAN (where the freenas server is located as well).

This way my data and the customer's data are nicely separated.

The VM has 8G ram, Freenas has also 8G ram. I tested with 16x1G zip files. The network controller is an intel I350 (driver igb, 1500MTU).

The problem:

ISCSI seems to give irregular performance.

I did some tests: (and please tell me if the performance is as expected or too low and where I should look to find the cause!)

SSD-SSD same pc: 145 MB/s

SSD pc1 -> SSD VM 112MB/s (network speed)

SSD VM -> CIFS share: 250-260MB/s

CIFS share -> SSD VM: 150-200 MB/s

SSD VM -> ISCSI: 20-250 MB/s *irregular

ISCSI -> VM: 20-250 MB/s *irregular

CIFS share -> SSD PC1: 80-90 MB/s (over the network)

The most concerning is the irregular ISCSI speed.

If you look at the disk transfer rate, you see several times it has 0 speed.

How do I go about solving this?

Thanks for the help,

Reinier