Current version - TrueNAS-13.0-U5.2 (haven't updated because of corruption)

Specs -

Intel Xeon E5-2650L v2 (40 threads)

383.9 GiB DDR3 ECC RAM

Network - 10GbE Mellanox Connect-X3 (MCX311A)

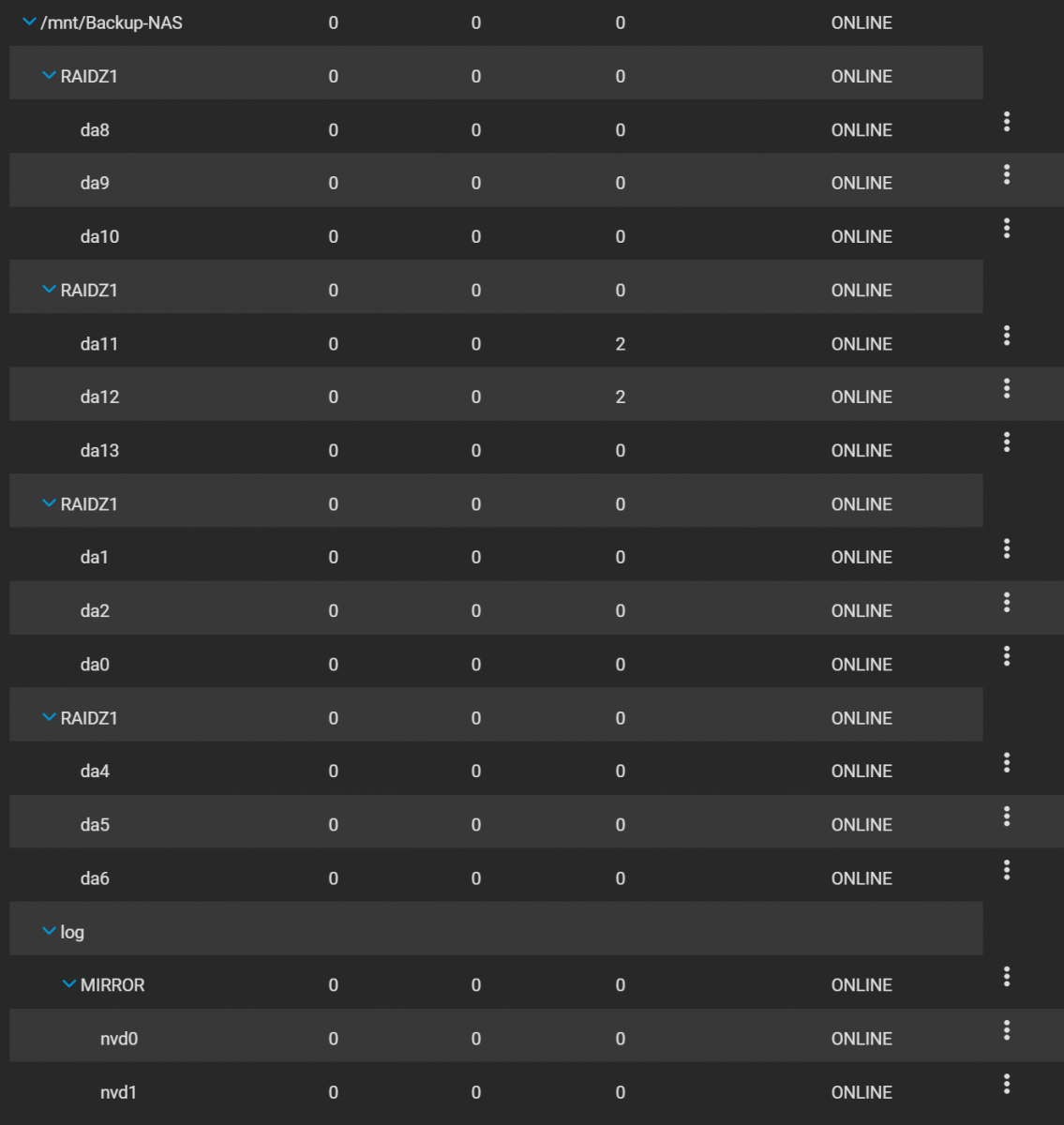

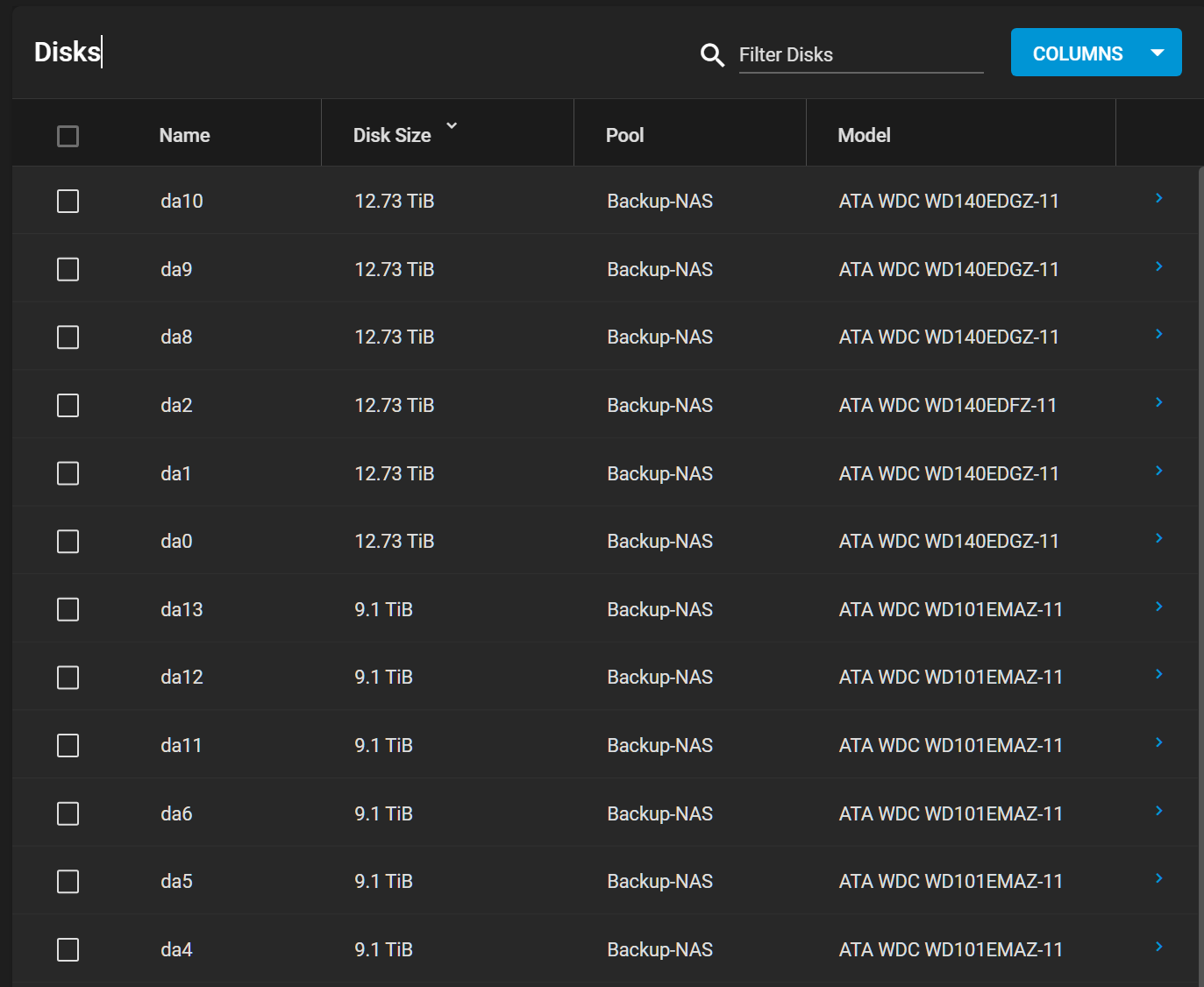

Pool Layout -

Disks:

Disks:

Long story short: A bad RAM module (replaced and validated RAM integrity) and potentially some impatient upgrading of disks (didn't know resilvers can't run in parallel) caused some corruption in my pool.

Specifically 2 of 3 disks on one of my vdevs report 2 checksum errors and a permanent error in was found.

There was also another file that had a permanent error. I deleted the file (wasn't precious data) and the snapshots that had that file in it. I also deleted the folder that file was in as well.

I have cleared the error and scrubbed the pool multiple times. So far the metadata error still exists.

Are there any steps to identify the problem metadata and rebuild it? Or do I need to rebuild the pool to get rid of the error? From what I can tell the rest of the data on the pool is intact with no issues. Should I try deleting all snapshots I have on my pool and see if that clears things up?

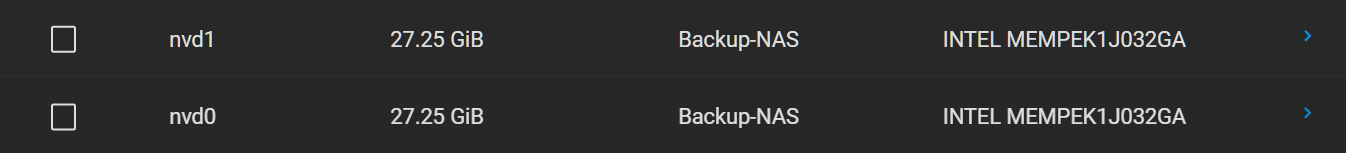

I am running to see if it will be able to tell me any more details about the metadata error.

Current output:

So so far there are 4 metadata pointers that are unreadable.

What should I do with this information? ZDB isn't super well documented so I am kind of lost.

Thanks for reading!

EDIT:

Specs -

Intel Xeon E5-2650L v2 (40 threads)

383.9 GiB DDR3 ECC RAM

Network - 10GbE Mellanox Connect-X3 (MCX311A)

Pool Layout -

Long story short: A bad RAM module (replaced and validated RAM integrity) and potentially some impatient upgrading of disks (didn't know resilvers can't run in parallel) caused some corruption in my pool.

Specifically 2 of 3 disks on one of my vdevs report 2 checksum errors and a permanent error in

Code:

<metadata>:<0x0>

There was also another file that had a permanent error. I deleted the file (wasn't precious data) and the snapshots that had that file in it. I also deleted the folder that file was in as well.

I have cleared the error and scrubbed the pool multiple times. So far the metadata error still exists.

Are there any steps to identify the problem metadata and rebuild it? Or do I need to rebuild the pool to get rid of the error? From what I can tell the rest of the data on the pool is intact with no issues. Should I try deleting all snapshots I have on my pool and see if that clears things up?

I am running

Code:

zdb -U /data/zfs/zpool.cache -c Backup-NAS

Current output:

So so far there are 4 metadata pointers that are unreadable.

What should I do with this information? ZDB isn't super well documented so I am kind of lost.

Thanks for reading!

EDIT:

An update:

Exporting the pool and reimporting the pool then scrubbing the pool cleared the error.

This was one of the solutions I found.

Basically:

After resolving corrupted data in the pool-

Scrub

Export

Import

Scrub

Repeat if metadata error isn't gone.

Last edited: