I am looking into options for building a small-ish but fast small business NAS. I am also considering a TrueNAS Mini X+, but I'd like to check out the DIY options. I might be in over my head on this. If anyone has input or recommendations I truly appreciate it.

Situation:

- SMB share use only, all Mac OS clients

- 5TB minimum of usable space in a 'RAID 10' config

- 3-400 MBps (at least) disk speed for each user. ie, 1Gbe is not enough, 2.5Gbe might be

- backed up to mobile hard drive every night, is an all SSD overkill or too risky?

Possible setup:

4x4TB 3.5" HDD

1x1TB SSD read cache

1x256GB SSD OS disk

16GB ECC RAM

10Gbe RJ45 NIC

Intel processors are what I am used to

Supermicro Barebones 5029C-T

www.newegg.com

This might fit, plus would need to find a compatible NIC. Suggestions? I see that intel is highly recommended.

www.newegg.com

This might fit, plus would need to find a compatible NIC. Suggestions? I see that intel is highly recommended.

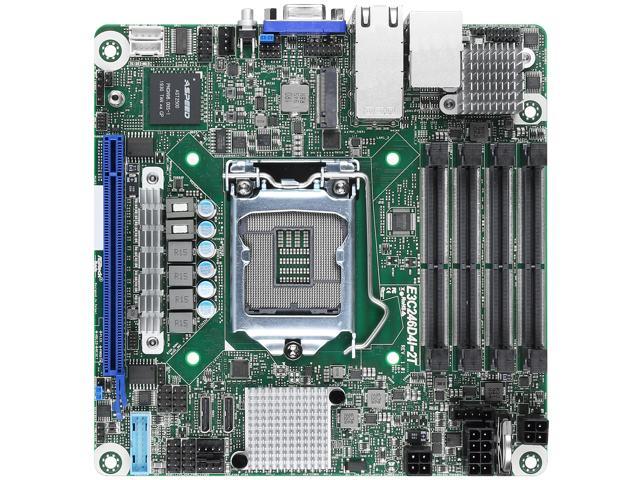

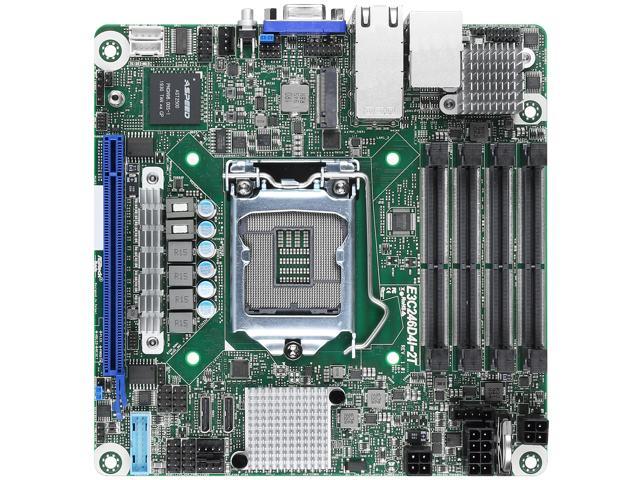

AsRock Rack E3C246D4I-2T

www.newegg.com

This looks really good. I don't know much about Oculink. Is it reliable? This has a 10Gbe NIC built in based on Intel X550. Does that mean it is compatible with FreeNAS?

www.newegg.com

This looks really good. I don't know much about Oculink. Is it reliable? This has a 10Gbe NIC built in based on Intel X550. Does that mean it is compatible with FreeNAS?

iStarUSA S-35-B5SA

www.bhphotovideo.com

This is just a nice looking mini ITX enclosure alternative to the Supermicro.

www.bhphotovideo.com

This is just a nice looking mini ITX enclosure alternative to the Supermicro.

Situation:

- SMB share use only, all Mac OS clients

- 5TB minimum of usable space in a 'RAID 10' config

- 3-400 MBps (at least) disk speed for each user. ie, 1Gbe is not enough, 2.5Gbe might be

- backed up to mobile hard drive every night, is an all SSD overkill or too risky?

Possible setup:

4x4TB 3.5" HDD

1x1TB SSD read cache

1x256GB SSD OS disk

16GB ECC RAM

10Gbe RJ45 NIC

Intel processors are what I am used to

Supermicro Barebones 5029C-T

Supermicro Micro-tower, NAS, Cloud, Edge, Backup, Storage, Server Barebone 5029C-T, Intel C246 Chipset, Supports Intel Xeon E-2100 CPUs, 4 x Hot-swappable Drive Bays, IPMI - Newegg.com

Buy Supermicro Micro-tower, NAS, Cloud, Edge, Backup, Storage, Server Barebone 5029C-T, Intel C246 Chipset, Supports Intel Xeon E-2100 CPUs, 4 x Hot-swappable Drive Bays, IPMI with fast shipping and top-rated customer service. Once you know, you Newegg!

AsRock Rack E3C246D4I-2T

AsRock Rack E3C246D4I-2T Mini-ITX Server Motherboard Intel LGA 1151 C246 - Newegg.com

Buy AsRock Rack E3C246D4I-2T Mini-ITX Server Motherboard Intel LGA 1151 C246 with fast shipping and top-rated customer service. Once you know, you Newegg!

iStarUSA S-35-B5SA

iStarUSA S-35-B5SA Compact Stylish 5x 3.5" Hotswap mini-ITX Tower (Black HDD Handles)

Buy iStarUSA S-35-B5SA Compact Stylish 5x 3.5" Hotswap mini-ITX Tower (Black HDD Handles) Review iStarUSA null