2*18T HDD stripe pool

I clicked Expand on the page, which caused the MainPool to be damaged. Please tell me how to recover the data.

我点击了页面的Expand,这导致MainPool损坏,请告诉我应该如何恢复数据

1.After clicking Expand, the system reports an error message:

[EFAULT] Command partprobe /dev/sdc failed (code 1): Error: Partition(s) 2 on /dev/sdc have been written, but we have been unable to inform the kernel of the change, probably because it/they are in use. As a result, the old partition(s) will remain in use. You should reboot now before making further changes.

当我点击Expand后,系统提示:

2.My MainPool is a stripe composed of 2 hard disks. When the system reported an error, the page showed that one hard disk was not allocated. I felt something was wrong and immediately restarted the system. After the restart was completed, it showed that the 2 hard disks had not been initialized. And the status of the pool shows offline.

我的MainPool是由2个硬盘组成的条带,当系统报错后,页面上显示一个硬盘未分配,我感到事情有点不妙,立刻重启了系统,重启完成后,显示2个硬盘未被初始化,并且池的状态显示离线

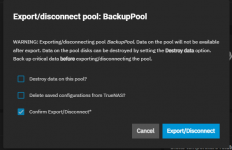

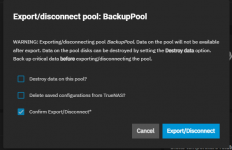

3.Then I performed Export/disconnect on MainPool

随后我对MainPool进行了Export/disconnect

4.The Import Pool is empty. Does this mean that my data is damaged?

Import Pool中为空,这是否表示我的数据已经损毁?

I want to know what the function of Expandis. I clicked this in the backupPool composed of a hard disk, and the storage pool did not change.

我想知道Expand的作用是什么,我在由一个硬盘的成的backupPool中点击了这个,存储池没有变更

I clicked Expand on the page, which caused the MainPool to be damaged. Please tell me how to recover the data.

我点击了页面的Expand,这导致MainPool损坏,请告诉我应该如何恢复数据

1.After clicking Expand, the system reports an error message:

[EFAULT] Command partprobe /dev/sdc failed (code 1): Error: Partition(s) 2 on /dev/sdc have been written, but we have been unable to inform the kernel of the change, probably because it/they are in use. As a result, the old partition(s) will remain in use. You should reboot now before making further changes.

当我点击Expand后,系统提示:

2.My MainPool is a stripe composed of 2 hard disks. When the system reported an error, the page showed that one hard disk was not allocated. I felt something was wrong and immediately restarted the system. After the restart was completed, it showed that the 2 hard disks had not been initialized. And the status of the pool shows offline.

我的MainPool是由2个硬盘组成的条带,当系统报错后,页面上显示一个硬盘未分配,我感到事情有点不妙,立刻重启了系统,重启完成后,显示2个硬盘未被初始化,并且池的状态显示离线

3.Then I performed Export/disconnect on MainPool

随后我对MainPool进行了Export/disconnect

4.The Import Pool is empty. Does this mean that my data is damaged?

Import Pool中为空,这是否表示我的数据已经损毁?

I want to know what the function of Expandis. I clicked this in the backupPool composed of a hard disk, and the storage pool did not change.

我想知道Expand的作用是什么,我在由一个硬盘的成的backupPool中点击了这个,存储池没有变更

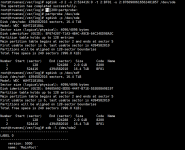

root@truenas[/dev/disk/by-partuuid]# zpool import

pool: MainPool

id: 8760900616562481857

state: UNAVAIL

status: One or more devices contains corrupted data.

action: The pool cannot be imported due to damaged devices or data.

see: [URL]https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-5E[/URL]

config:

MainPool UNAVAIL insufficient replicas

5dcf2847-0f8f-42c9-a75a-33ce8e7b78e9 UNAVAIL invalid label

e99f8385-9e1c-44e4-91c7-4b1b8a6b160f ONLINE

root@truenas[/dev/disk/by-partuuid]#

root@truenas[~]# zdb -l /dev/sdd2

failed to unpack label 0

failed to unpack label 1

------------------------------------

LABEL 2 (Bad label cksum)

------------------------------------

version: 5000

name: 'MainPool'

state: 0

txg: 13065955

pool_guid: 8760900616562481857

errata: 0

hostid: 2139740830

hostname: 'truenas'

top_guid: 6877940474972169588

guid: 6877940474972169588

vdev_children: 2

vdev_tree:

type: 'disk'

id: 0

guid: 6877940474972169588

path: '/dev/disk/by-partuuid/5dcf2847-0f8f-42c9-a75a-33ce8e7b78e9'

metaslab_array: 143

metaslab_shift: 34

ashift: 12

asize: 17998054948864

is_log: 0

DTL: 117795

create_txg: 4

features_for_read:

com.delphix:hole_birth

com.delphix:embedded_data

com.klarasystems:vdev_zaps_v2

labels = 2 3

root@truenas[~]# sfdisk -d /dev/sdd

label: gpt

label-id: 8F674207-71A3-4B4C-A9CB-64C24ED98A3C

device: /dev/sdd

unit: sectors

first-lba: 6

last-lba: 4394582010

sector-size: 4096

/dev/sdd1 : start= 128, size= 524161, type=0657FD6D-A4AB-43C4-84E5-0933C84B4F4F, uuid=24C14E32-7AE7-4D5D-87EF-C03AFB3B55C2

/dev/sdd2 : start= 4195328, size= 4390386683, type=6A898CC3-1DD2-11B2-99A6-080020736631, uuid=5DCF2847-0F8F-42C9-A75A-33CE8E7B78E9

root@truenas[~]# sfdisk -d /dev/sde

label: gpt

label-id: B4605A92-EDDE-4447-B71E-553E08A9E197

device: /dev/sde

unit: sectors

first-lba: 6

last-lba: 4394582010

sector-size: 4096

/dev/sde1 : start= 128, size= 524161, type=0657FD6D-A4AB-43C4-84E5-0933C84B4F4F, uuid=9FE3FA6C-6C5D-4386-BC53-850CDE38A42D

/dev/sde2 : start= 524416, size= 4394057595, type=6A898CC3-1DD2-11B2-99A6-080020736631, uuid=E99F8385-9E1C-44E4-91C7-4B1B8A6B160F