I know this has been discussed back and forth. We are running FreeNAS on ESX5.5 with a local boot into a FC channel drive array. We mirror the unit to another ESX server where the FC array is attached for failover. Its tested and worked everytime ..

What we do seem to notice is that over time (maybe a few weeks) the san becomes super slow. We try disabling sync which makes a huge difference in the first few days, but nothing seems to speed it up or make any changes. A reboot in the past seems to have a huge increase in performance back to normal.

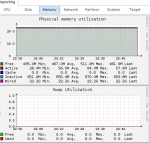

Right now we have 4vCPU and 32GB ram assigned. We had disabled swap on the disks from some performance tweaks we were reading about so not sure if thats the issue or not. Once the device starts crawling its almost unusable ... and i cannot get the disk i/o at anything normal.

Currently with sync=disabled I am getting 36/35MB/s peak. When I reboot it and disable sync I will hit 350/300 easy.

Im running with 12x1tb SATA Drives in 4 RaidZ sets with a 300GB SAS Cache and 300GB SAS Log. I tried removing the log/cache and it doesnt seem to make a difference.

NAME STATE READ WRITE CKSUM

SAN40 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/eabfb9ca-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/eb7edba4-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/ec6fe31b-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

gptid/ed288400-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/edd0e41b-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/ee87b10d-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-2 ONLINE 0 0 0

gptid/ef3605f6-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/efeddb61-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f096d748-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-3 ONLINE 0 0 0

gptid/f15170d0-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f20c7366-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f2b85a7a-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

logs

gptid/f4266076-882b-11e7-9bcc-005056a58f4f ONLINE 0 0 0

cache

gptid/fc4a0dbc-882b-11e7-9bcc-005056a58f4f ONLINE 0 0 0

spares

gptid/f36d4bb7-5ce5-11e7-8c44-005056a58f4f AVAIL

What we do seem to notice is that over time (maybe a few weeks) the san becomes super slow. We try disabling sync which makes a huge difference in the first few days, but nothing seems to speed it up or make any changes. A reboot in the past seems to have a huge increase in performance back to normal.

Right now we have 4vCPU and 32GB ram assigned. We had disabled swap on the disks from some performance tweaks we were reading about so not sure if thats the issue or not. Once the device starts crawling its almost unusable ... and i cannot get the disk i/o at anything normal.

Currently with sync=disabled I am getting 36/35MB/s peak. When I reboot it and disable sync I will hit 350/300 easy.

Im running with 12x1tb SATA Drives in 4 RaidZ sets with a 300GB SAS Cache and 300GB SAS Log. I tried removing the log/cache and it doesnt seem to make a difference.

NAME STATE READ WRITE CKSUM

SAN40 ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

gptid/eabfb9ca-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/eb7edba4-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/ec6fe31b-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-1 ONLINE 0 0 0

gptid/ed288400-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/edd0e41b-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/ee87b10d-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-2 ONLINE 0 0 0

gptid/ef3605f6-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/efeddb61-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f096d748-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

raidz1-3 ONLINE 0 0 0

gptid/f15170d0-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f20c7366-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

gptid/f2b85a7a-5ce5-11e7-8c44-005056a58f4f ONLINE 0 0 0

logs

gptid/f4266076-882b-11e7-9bcc-005056a58f4f ONLINE 0 0 0

cache

gptid/fc4a0dbc-882b-11e7-9bcc-005056a58f4f ONLINE 0 0 0

spares

gptid/f36d4bb7-5ce5-11e7-8c44-005056a58f4f AVAIL