I recently moved to a new build and I am guessing I made a mistake on a configuration somewhere, but I can not find what it is. My issue is that my current TrueNAS install is reporting the full size of my datastore as being used space, where as my old install would have TrueNAS reporting slightly less than what ESXi was seeing as used since I am using compression.

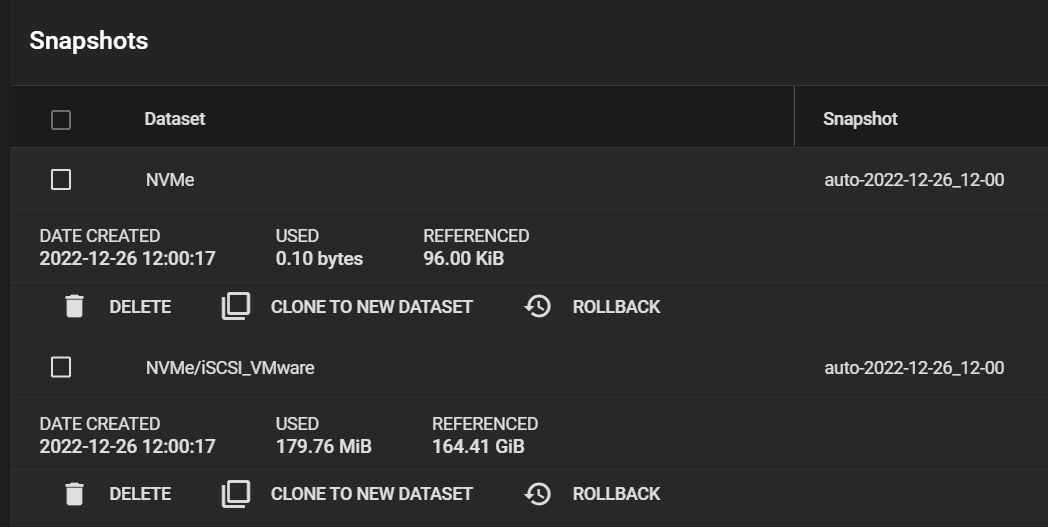

On top of that, my snapshots appear to take up the full referenced size....

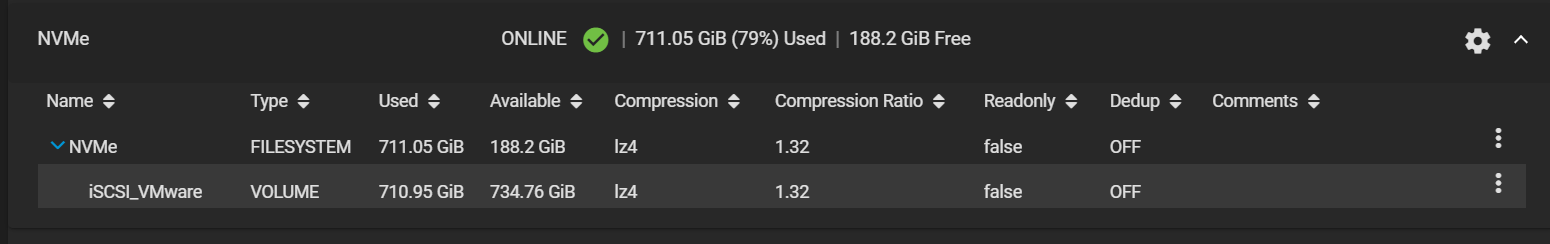

Here it is without any snapeshots.

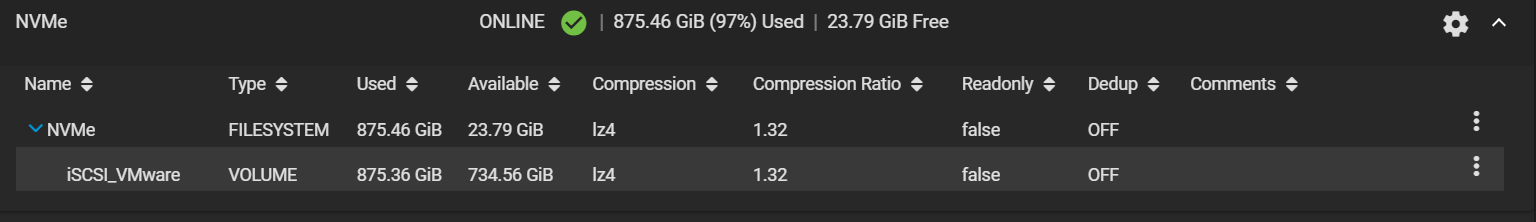

Now after a snapshot I get this.

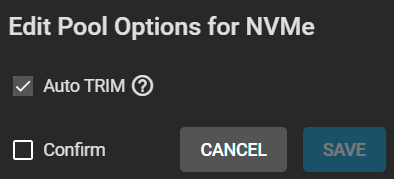

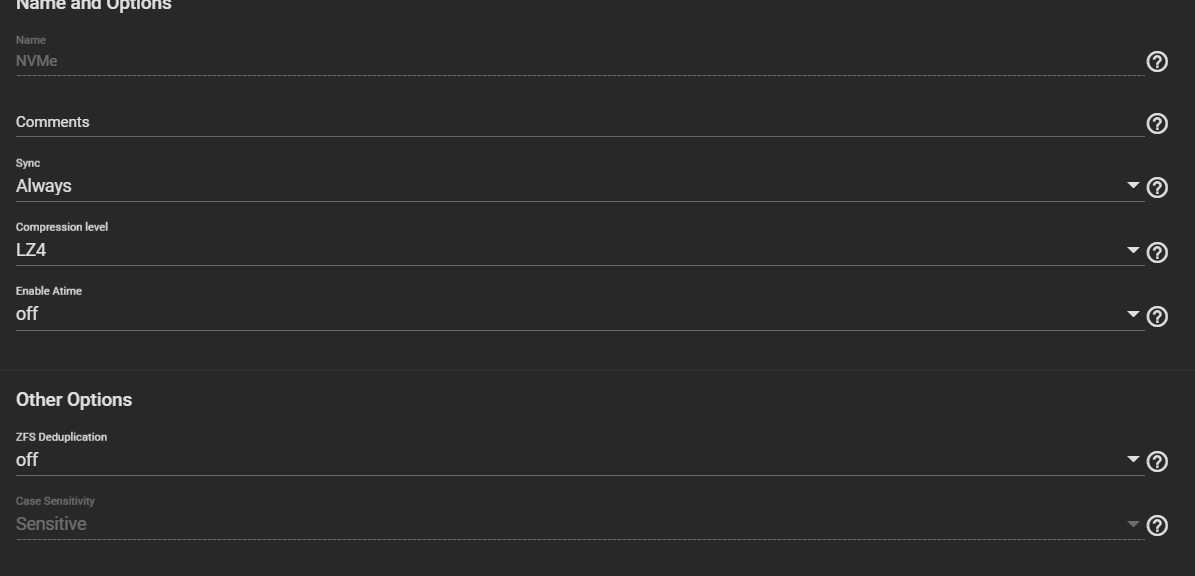

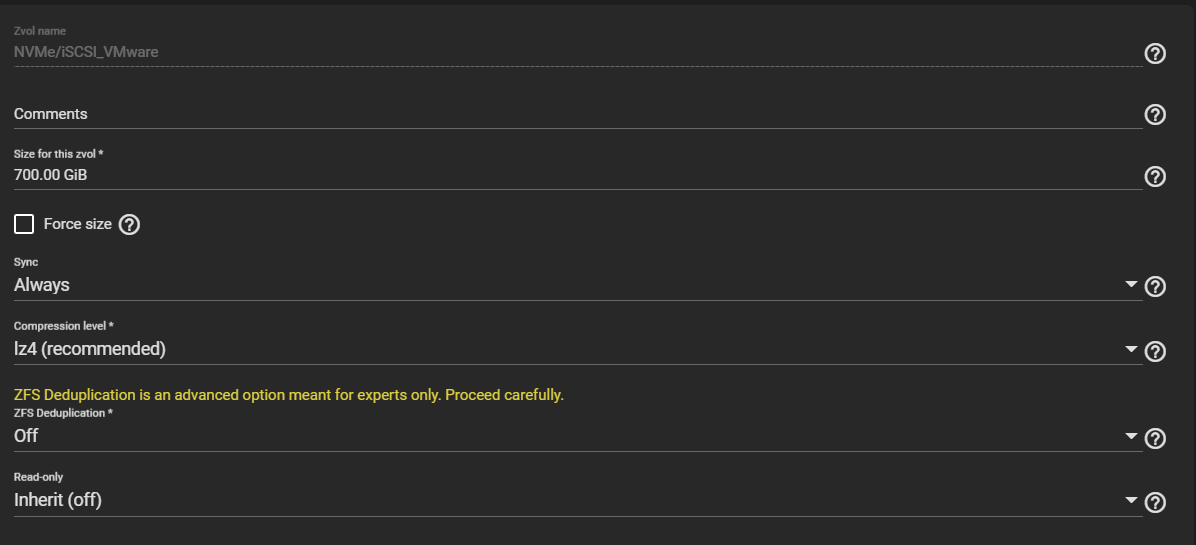

Here is how I have it configured.

On top of that, my snapshots appear to take up the full referenced size....

Here it is without any snapeshots.

Now after a snapshot I get this.

Here is how I have it configured.