Thibaut

Dabbler

- Joined

- Jun 21, 2014

- Messages

- 33

Hello, this is somewhat related to this other thread (89487), but I think it deserves a separate discussion since it pinpoints an error occurring in the TrueNAS interface.

I'm using TrueNAS-12.0-U4, but the problem has occurred on earlier v12 versions also, I can't remember whether it happened under v11 as well but I think it did.

The situation:

A drive fails in a raidz pool and the system outputs an alert letting know that it has to be replaced.

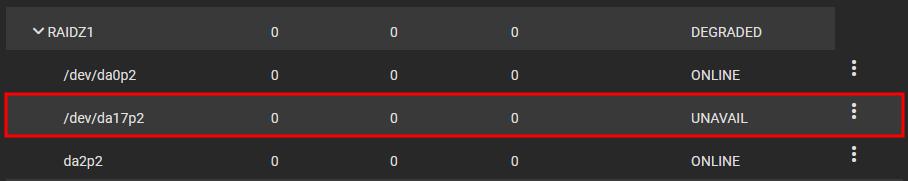

The failed hard disk is referred as UNAVAILABLE in the TrueNAS interface:

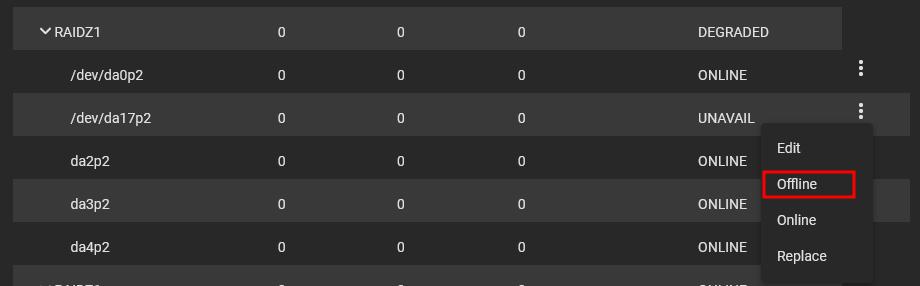

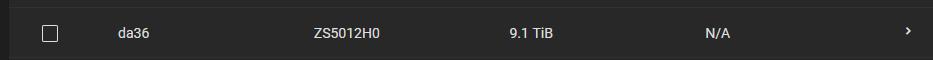

The failed hard disk is put offline using the menu and confirmed in the dialog that pops up:

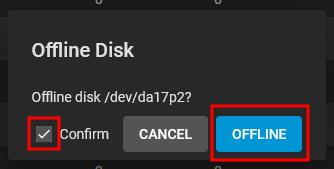

The failed hard disk is then taken out of the server, and physically replaced with a new hard disk that then appears in the Storage > Disks list:

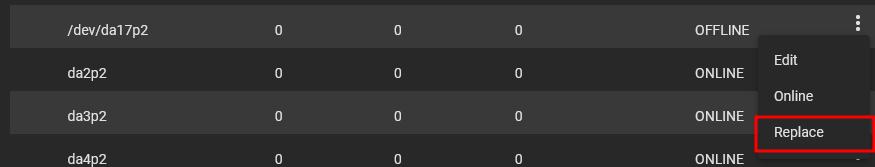

Finally, the reference to the failed disk is replaced in the pool's disks list:

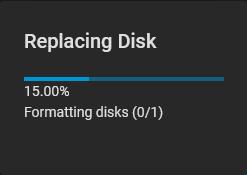

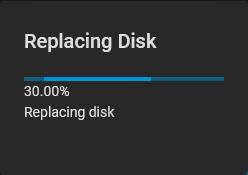

The replacement process starts:

The problem:

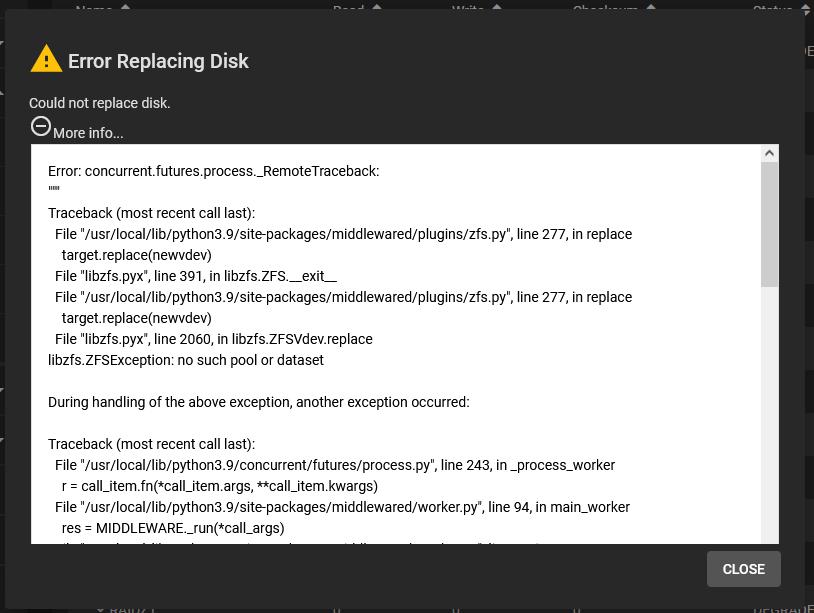

After a moment, an error is displayed:

The full content of the error report is:

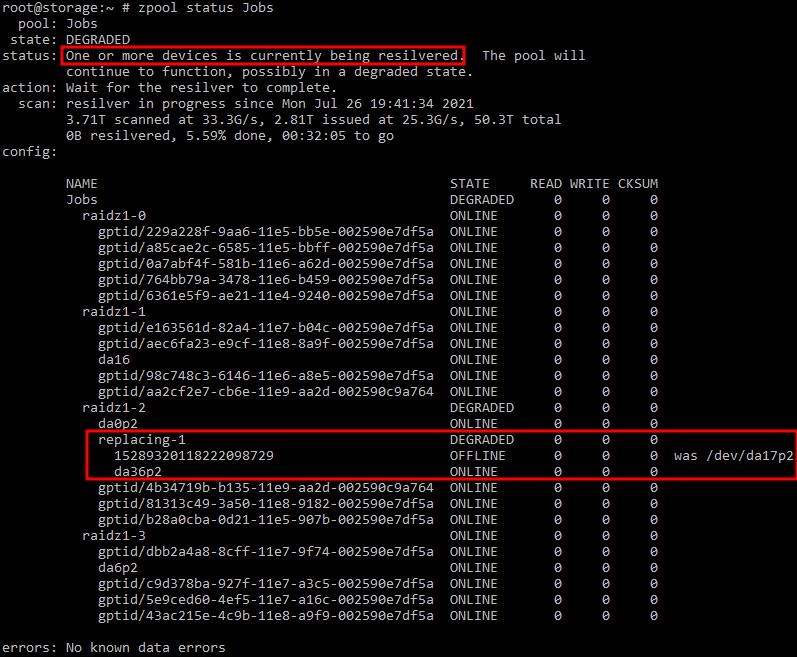

Although at this point it could seem that the whole disk replacement process has failed, the resilvering effectively gets started, as can be seen from the CLI with the

At that point, the TrueNAS web interface does NOT report the silvering being in progress.

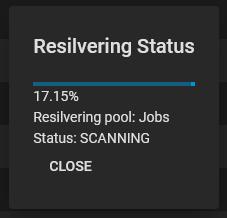

After a moment, reloading the TrueNAS web page shows that a resilvering is going on:

Reproducibility:

From what I experimented so far, this error doesn't occur each time a disk is replaced. Out of three disks replaced so far under v12, two have shown this problem but one went without a glitch.

I currently have no clue about what is causing the problem and why it happens, or whether it is some problem in the TrueNAS code?

Any idea regarding this would be welcome.

Thank you.

I'm using TrueNAS-12.0-U4, but the problem has occurred on earlier v12 versions also, I can't remember whether it happened under v11 as well but I think it did.

The situation:

A drive fails in a raidz pool and the system outputs an alert letting know that it has to be replaced.

The failed hard disk is referred as UNAVAILABLE in the TrueNAS interface:

The failed hard disk is put offline using the menu and confirmed in the dialog that pops up:

The failed hard disk is then taken out of the server, and physically replaced with a new hard disk that then appears in the Storage > Disks list:

Finally, the reference to the failed disk is replaced in the pool's disks list:

The replacement process starts:

The problem:

After a moment, an error is displayed:

The full content of the error report is:

Code:

Error: concurrent.futures.process._RemoteTraceback:

"""

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 277, in replace

target.replace(newvdev)

File "libzfs.pyx", line 391, in libzfs.ZFS.__exit__

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 277, in replace

target.replace(newvdev)

File "libzfs.pyx", line 2060, in libzfs.ZFSVdev.replace

libzfs.ZFSException: no such pool or dataset

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/concurrent/futures/process.py", line 243, in _process_worker

r = call_item.fn(*call_item.args, **call_item.kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 94, in main_worker

res = MIDDLEWARE._run(*call_args)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 45, in _run

return self._call(name, serviceobj, methodobj, args, job=job)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/worker.py", line 39, in _call

return methodobj(*params)

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 977, in nf

return f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/zfs.py", line 279, in replace

raise CallError(str(e), e.code)

middlewared.service_exception.CallError: [EZFS_NOENT] no such pool or dataset

"""

The above exception was the direct cause of the following exception:

Traceback (most recent call last):

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 367, in run

await self.future

File "/usr/local/lib/python3.9/site-packages/middlewared/job.py", line 403, in __run_body

rv = await self.method(*([self] + args))

File "/usr/local/lib/python3.9/site-packages/middlewared/schema.py", line 973, in nf

return await f(*args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool_/replace_disk.py", line 122, in replace

raise e

File "/usr/local/lib/python3.9/site-packages/middlewared/plugins/pool_/replace_disk.py", line 102, in replace

await self.middleware.call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1241, in call

return await self._call(

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1206, in _call

return await self._call_worker(name, *prepared_call.args)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1212, in _call_worker

return await self.run_in_proc(main_worker, name, args, job)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1139, in run_in_proc

return await self.run_in_executor(self.__procpool, method, *args, **kwargs)

File "/usr/local/lib/python3.9/site-packages/middlewared/main.py", line 1113, in run_in_executor

return await loop.run_in_executor(pool, functools.partial(method, *args, **kwargs))

middlewared.service_exception.CallError: [EZFS_NOENT] no such pool or datasetAlthough at this point it could seem that the whole disk replacement process has failed, the resilvering effectively gets started, as can be seen from the CLI with the

zpool status mypool command:At that point, the TrueNAS web interface does NOT report the silvering being in progress.

After a moment, reloading the TrueNAS web page shows that a resilvering is going on:

Reproducibility:

From what I experimented so far, this error doesn't occur each time a disk is replaced. Out of three disks replaced so far under v12, two have shown this problem but one went without a glitch.

I currently have no clue about what is causing the problem and why it happens, or whether it is some problem in the TrueNAS code?

Any idea regarding this would be welcome.

Thank you.

Last edited: