Hazer

Cadet

- Joined

- Jan 11, 2024

- Messages

- 9

Environment: TrueNAS-SCALE-23.10.1

HW Config:

AMD EPYC 7551P CPU

Supermicro H11SSL-I Motherboard (348 GB RAM slotted, 1x 4Tb TeamData NVME in M.2)

Broadcom / LSI SAS3008 PCI-Express Fusion-MPT SAS-3 (rev 02)

Supermicro NVME Carrier board (4x TeamData 1Tb NVME Drives)

I've ordered a 45Drives HL15 case, planned on populating with 12x Seagate Exos 2x14 Mach.2 drives. I have the hardware and the drives, figured I'd test and verify the drives as they are Ebay purchased. Bought a SFF-8643 to 4x SFF-8482 with power breakout cable. Expected to test 4 drives at a time. Connected the first 4 drives and I'm running into some oddities that I didn't expect.

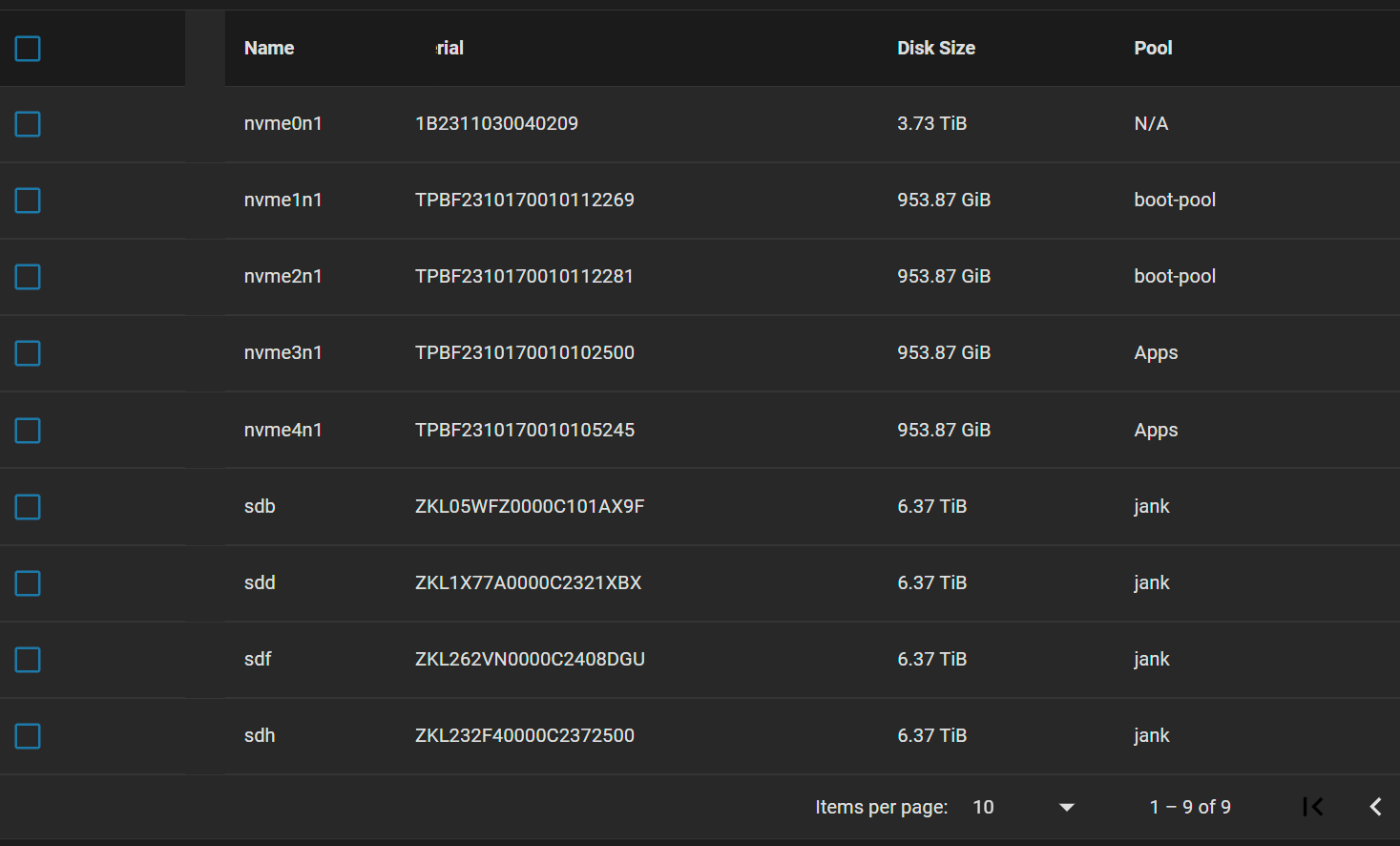

I expected to see 8 new drives in the system, sda through sdh. In the GUI, after several restarts trying to troubleshoot things, the best I see is below:

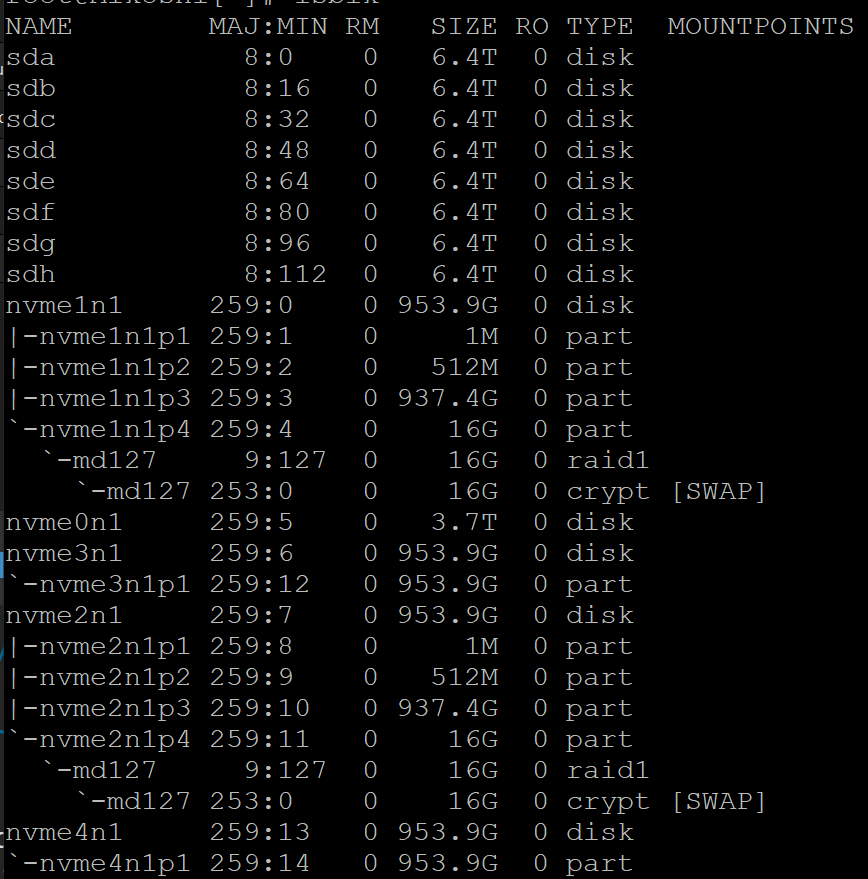

It appears to show the "bottom" half of each drive only. When I check lsblk I do see all the drives as I would expect.

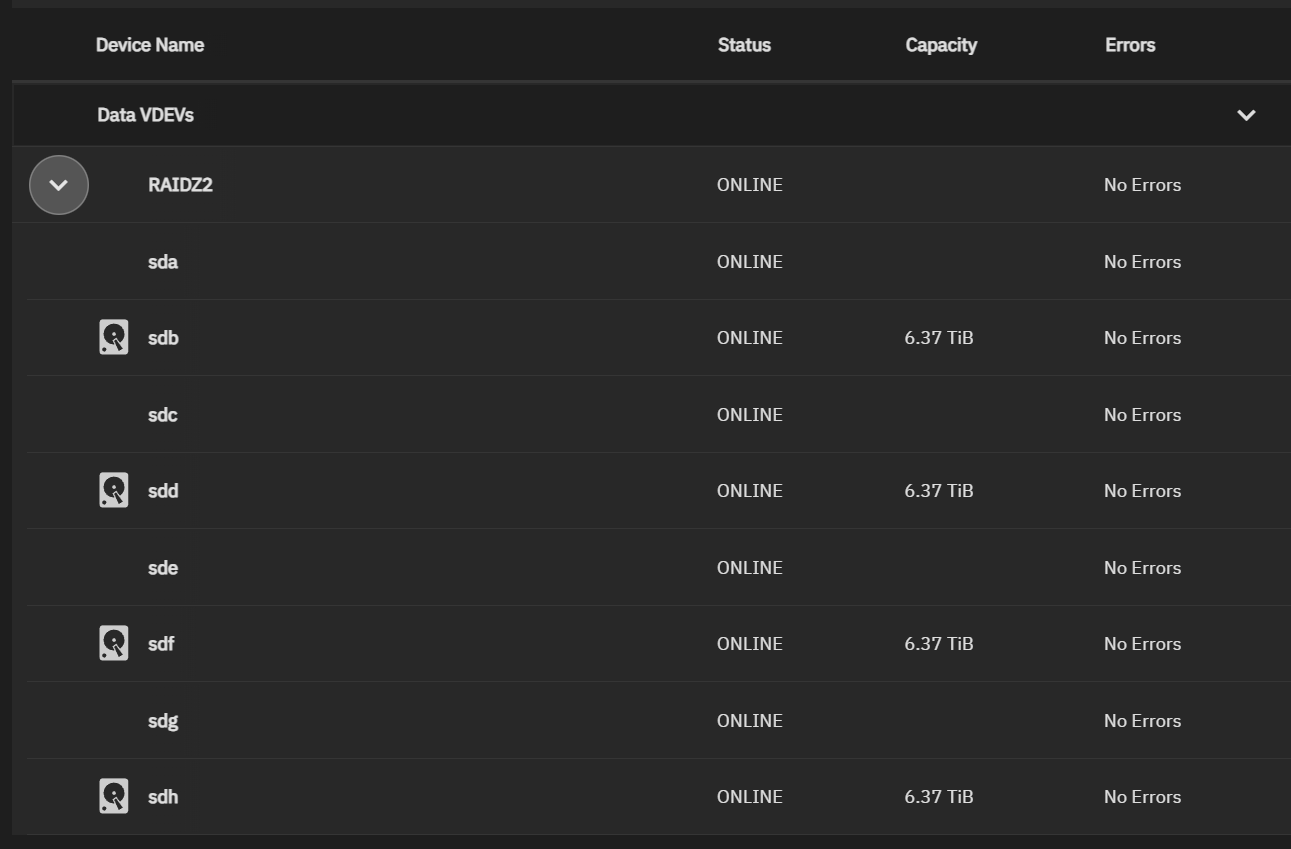

I was able via the CLI to build them into a RAIDZ2 pool, then export from the CLI so that I could Import in the GUI... and as shown below the results are a bit odd with respect to the members of this pool...

Have I missed something or misconfigured something that I'm not seeing? I *REALLY* do not want to have to build and manage the array from the CLI and only be able to effectively be able to SMART Test half the spindles in the array... I've tried searching for various solutions about the GUI not showing all the drives expected and most of them seem to trace back to questionable HW choices (RAID vs HBA or USB Enclosures usually) but as far as I can tell there should be no reason that the GUI is not picking up these drives as expected unless there's something funky with the breakout cable but then why is the OS actually seeing the drives and ZFS happy with an array of them? I even ran a test writing out 1Tb of data across that array (honestly was mostly wanting to test speed) without any issues....

HW Config:

AMD EPYC 7551P CPU

Supermicro H11SSL-I Motherboard (348 GB RAM slotted, 1x 4Tb TeamData NVME in M.2)

Broadcom / LSI SAS3008 PCI-Express Fusion-MPT SAS-3 (rev 02)

Supermicro NVME Carrier board (4x TeamData 1Tb NVME Drives)

I've ordered a 45Drives HL15 case, planned on populating with 12x Seagate Exos 2x14 Mach.2 drives. I have the hardware and the drives, figured I'd test and verify the drives as they are Ebay purchased. Bought a SFF-8643 to 4x SFF-8482 with power breakout cable. Expected to test 4 drives at a time. Connected the first 4 drives and I'm running into some oddities that I didn't expect.

I expected to see 8 new drives in the system, sda through sdh. In the GUI, after several restarts trying to troubleshoot things, the best I see is below:

It appears to show the "bottom" half of each drive only. When I check lsblk I do see all the drives as I would expect.

I was able via the CLI to build them into a RAIDZ2 pool, then export from the CLI so that I could Import in the GUI... and as shown below the results are a bit odd with respect to the members of this pool...

Have I missed something or misconfigured something that I'm not seeing? I *REALLY* do not want to have to build and manage the array from the CLI and only be able to effectively be able to SMART Test half the spindles in the array... I've tried searching for various solutions about the GUI not showing all the drives expected and most of them seem to trace back to questionable HW choices (RAID vs HBA or USB Enclosures usually) but as far as I can tell there should be no reason that the GUI is not picking up these drives as expected unless there's something funky with the breakout cable but then why is the OS actually seeing the drives and ZFS happy with an array of them? I even ran a test writing out 1Tb of data across that array (honestly was mostly wanting to test speed) without any issues....