icecoke

Cadet

- Joined

- Sep 6, 2012

- Messages

- 3

Hello everyone!

We are using 4 FreeNAS-11.3-U1 nodes (each with 128GB RAM, 20 xeon cores, 16 Intel Enterprise SSDs, 10Gbit/s NICs), each with raidz3 and mirror logs as NFS v4 storage for a Xen Cluster.

The overall performance is quite well, but we see (since setup of the FreeNAS nodes) the following effect:

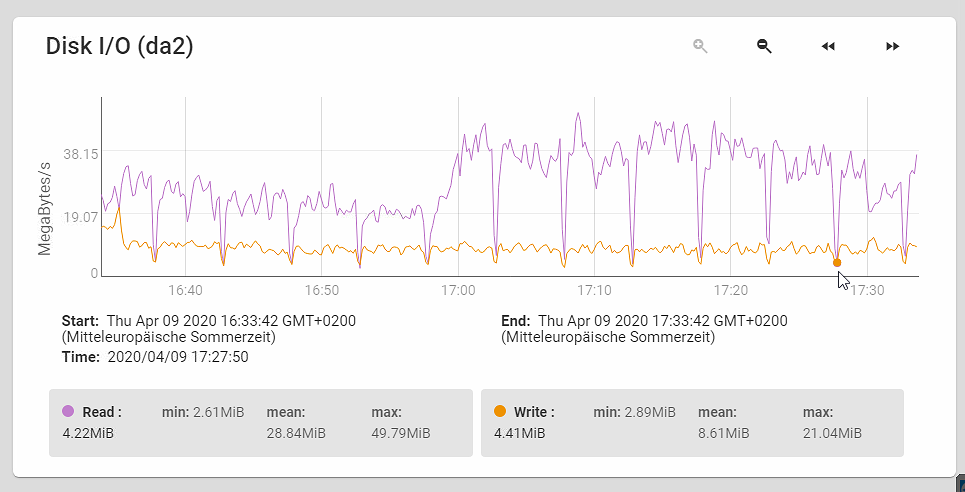

The complete traffic from and to the nodes drop exactly every 300 seconds. Not in full */5 minute terms as like from cron jobs, but exactly 5 minutes (as seen in the screenshot - here 17:27:50, next drop 17:32:50 and so on).

We have no cronjobs corresponding on the Xen Cluster nor we can see such a job in FreeNAS. The effect ist, that all VMs nearly stop to work in these seconds and looking at the FreeNAS dashboard, the bandwith of the main NIC is dropping each time, too (from ~300-500MB/s to kilobytes). After a few seconds all is normal again.

We can't believe that this is normal and we would really, really appreciate any help and input regarding this!

Many thanks in advance!

We are using 4 FreeNAS-11.3-U1 nodes (each with 128GB RAM, 20 xeon cores, 16 Intel Enterprise SSDs, 10Gbit/s NICs), each with raidz3 and mirror logs as NFS v4 storage for a Xen Cluster.

The overall performance is quite well, but we see (since setup of the FreeNAS nodes) the following effect:

The complete traffic from and to the nodes drop exactly every 300 seconds. Not in full */5 minute terms as like from cron jobs, but exactly 5 minutes (as seen in the screenshot - here 17:27:50, next drop 17:32:50 and so on).

We have no cronjobs corresponding on the Xen Cluster nor we can see such a job in FreeNAS. The effect ist, that all VMs nearly stop to work in these seconds and looking at the FreeNAS dashboard, the bandwith of the main NIC is dropping each time, too (from ~300-500MB/s to kilobytes). After a few seconds all is normal again.

We can't believe that this is normal and we would really, really appreciate any help and input regarding this!

Many thanks in advance!