I am trying to migrate from OpenMediaVault (where I was already using a zfs pool for storage) to TrueNAS Core and I'm facing the issue that I no longer can access several terrabytes of data after modifying the pools mountpoint in TrueNAS. By "cannot access" I mean that they are no longer visible. This is a rather complex issue, with a number of factors that may play a role, so I'll try to provide some context. (I am new to BSD/TrueNAS, so please bear with me.)

Once I had installed TrueNAS on a new SSD, I went ahead and imported my existing pool (

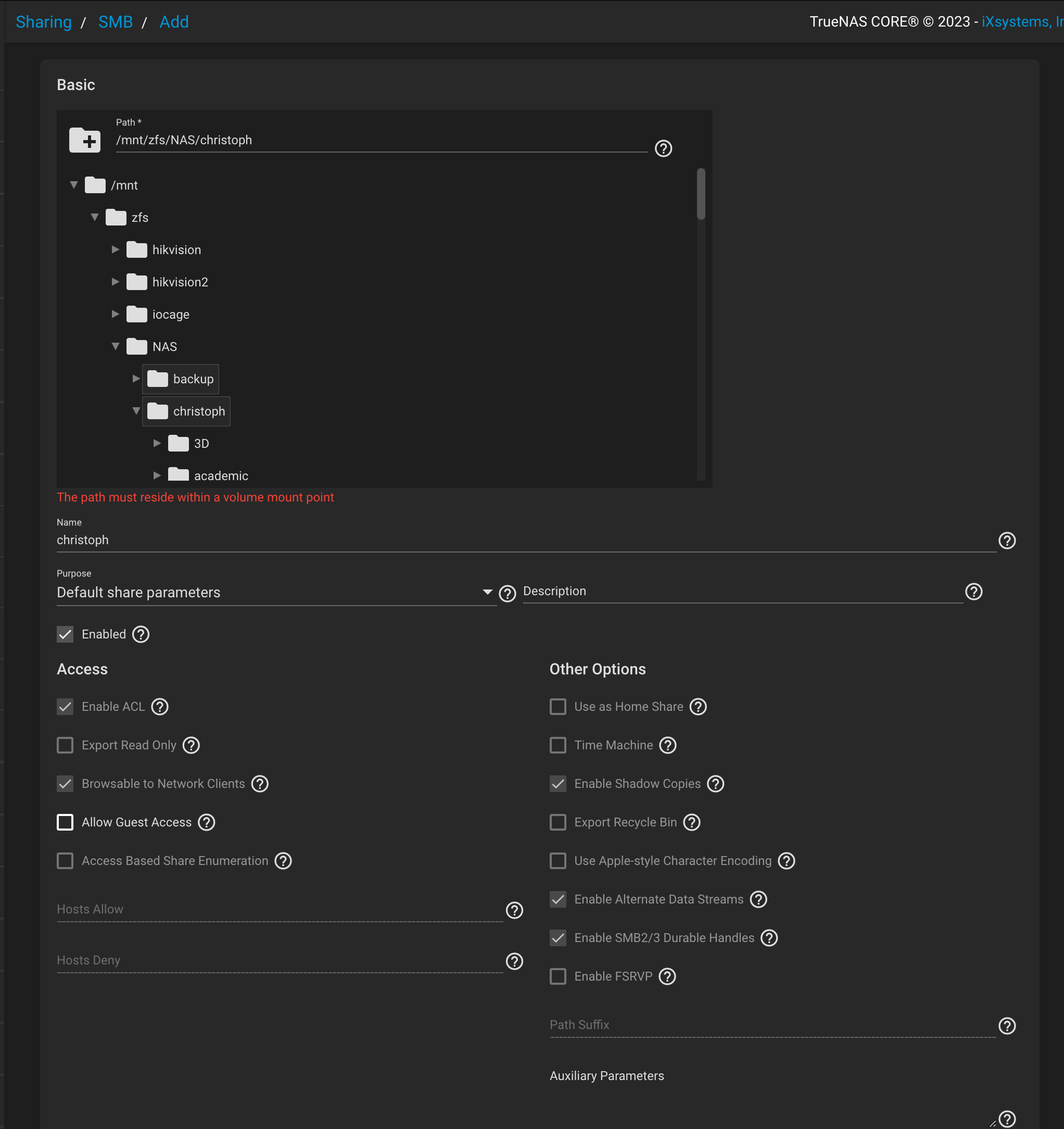

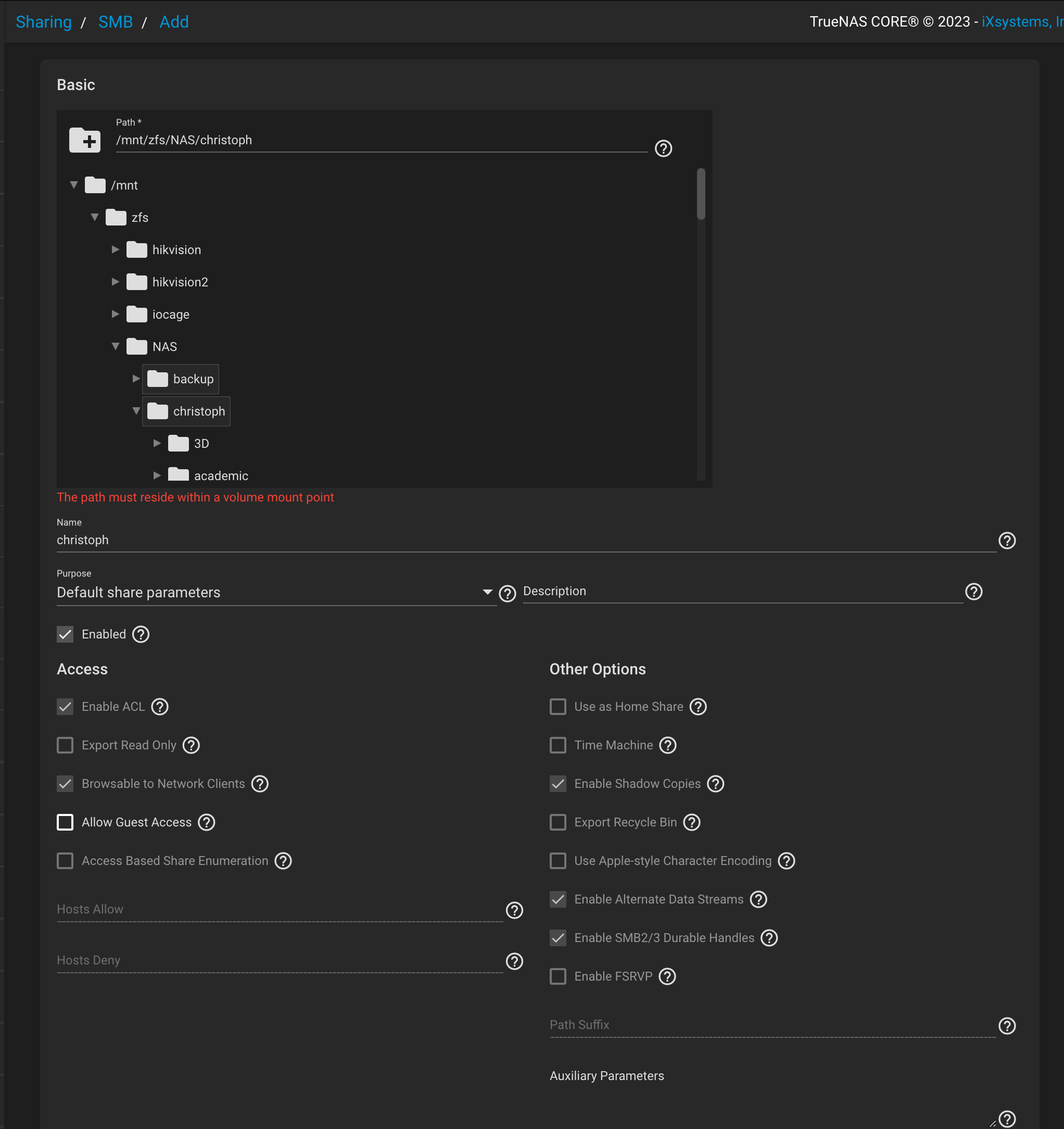

But when I tried to recreate my SMB shares, it would complain that "the path must reside within a volume mount point":

I learned that the reason for this was a problem which has previously been described in this thread, namely that the name of the mount point did not include the name of the pool. As you can infer from the screenshot above, the mount point was

In order to fix this, I did the following

I then exported and re-imported the pool via the TrueNAS UI.

I was now able to create SMB shares, but a number of datasets no longer showed up. The data still seems to be there, as they are taking up several TB of space, but I can't see them.

Based on this post, my hunch is that the problem may be related to an issue I face on OMV a couple of years ago where data somehow got written into

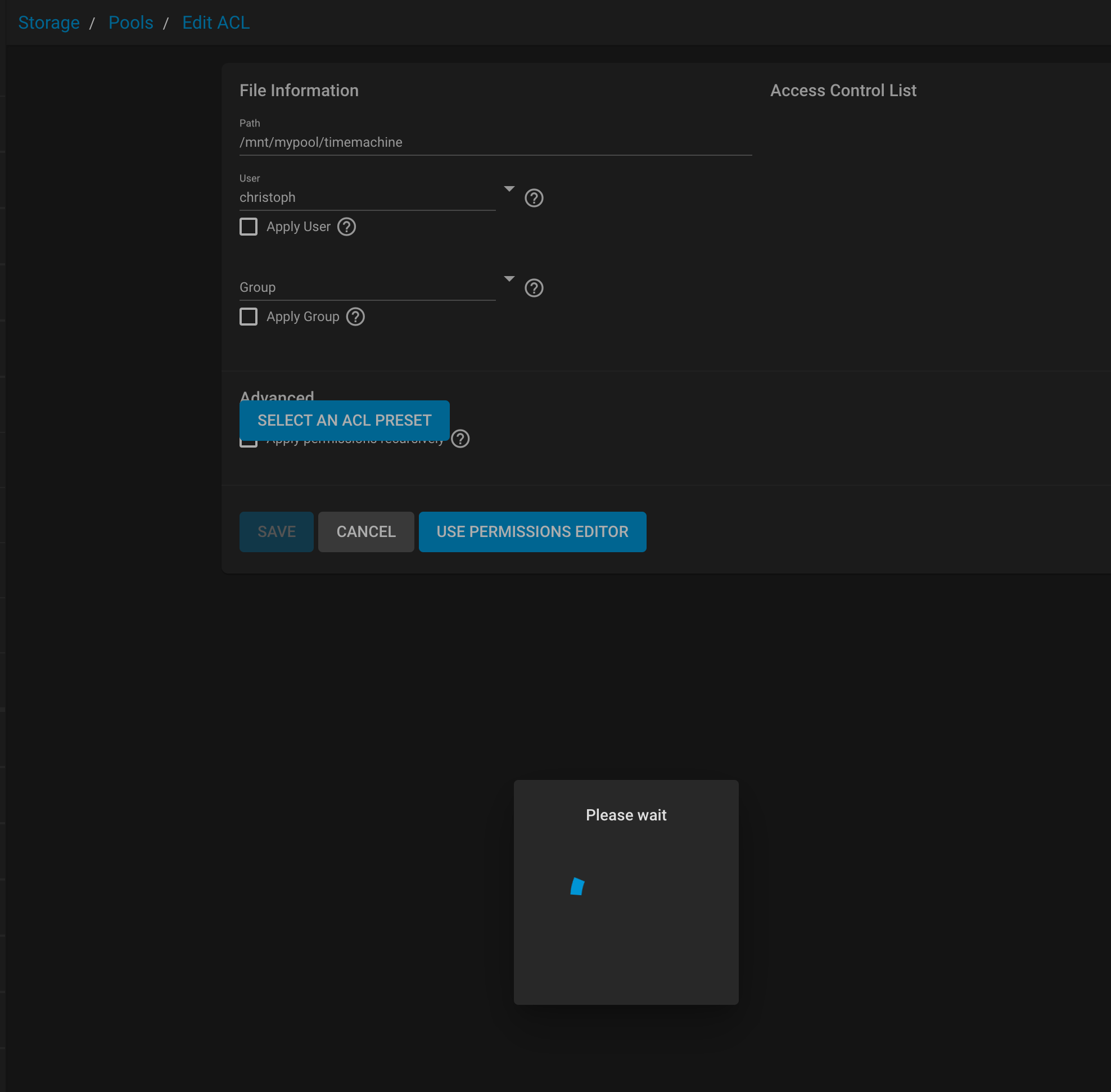

Hoping that I might regain access to the missing datasets, I tried changing the mountpoint back to what it was, but that doesn't seem to be possible:

Is there any way of fixing this?

Once I had installed TrueNAS on a new SSD, I went ahead and imported my existing pool (

mypool) via the TrueNAS UI. It seemed to go smoothly even though I did not export the pool on OMV before shutting it down. All datasets were accessible as expected.But when I tried to recreate my SMB shares, it would complain that "the path must reside within a volume mount point":

I learned that the reason for this was a problem which has previously been described in this thread, namely that the name of the mount point did not include the name of the pool. As you can infer from the screenshot above, the mount point was

/mnt/zfs/ even though the pool is called mypool. (On OMV, the pool was mounted at /zfs.)In order to fix this, I did the following

Code:

# zfs unmount mypool # zfs get mountpoint mypool NAME PROPERTY VALUE SOURCE mypool mountpoint /mnt/zfs local # zfs set mountpoint /mnt/mypool mypool # zfs get mountpoint mypool NAME PROPERTY VALUE SOURCE mypool mountpoint /mnt/mnt/mypool local # zfs set mountpoint=mypool mypool cannot set property for 'mypool': 'mountpoint' must be an absolute path, 'none', or 'legacy' # zfs set mountpoint=/mypool mypool # zfs get mountpoint mypool NAME PROPERTY VALUE SOURCE mypool mountpoint /mnt/mypool local

I then exported and re-imported the pool via the TrueNAS UI.

I was now able to create SMB shares, but a number of datasets no longer showed up. The data still seems to be there, as they are taking up several TB of space, but I can't see them.

Based on this post, my hunch is that the problem may be related to an issue I face on OMV a couple of years ago where data somehow got written into

/zfs/NAS while the pool was not mounted (or something like that, I don't remember the details). At the time, I somehow solved the issue by creating /zfs/NASx as a temporary directory so that I was able to move the data (that had been written into /zfs/NAS and which would become invisible once the pool was mounted "on top of it") into the pool. - Sorry, I don't remember more, but if this seems relevant, feel free to ask specific questions, and I may manage to remember.Hoping that I might regain access to the missing datasets, I tried changing the mountpoint back to what it was, but that doesn't seem to be possible:

Code:

# zfs set mountpoint=/zfs mypool cannot set property for 'mypool': child dataset with inherited mountpoint is used in a non-global zone

Is there any way of fixing this?