I used to run FreeNAS, then TrueNAS (now core, of course) and several months ago, I migrated to SCALE. I really like the Debian base which allows for docker integration and better virtualization, so I didn't migrate back... but...

Quick history:

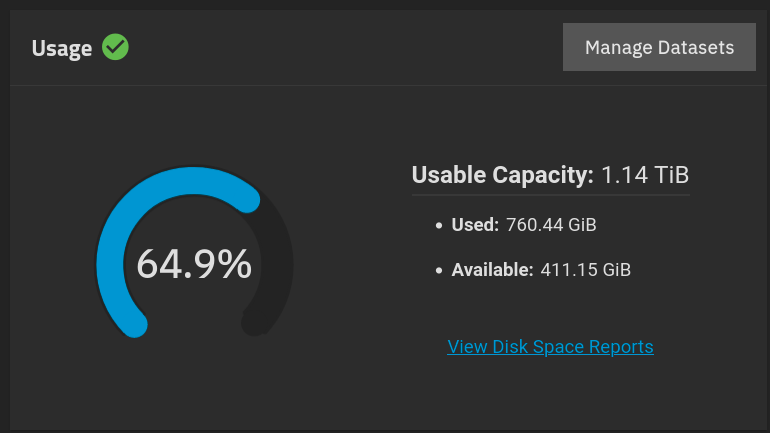

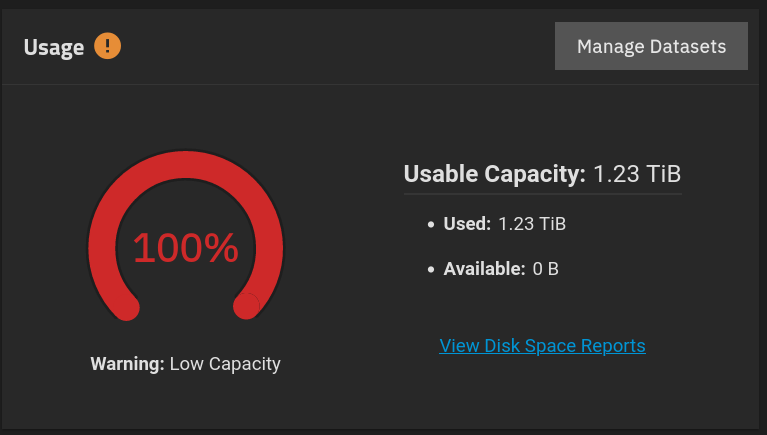

I created a snapshot task for the pool, scheduled at midnight (it was at 10:30 PM). I then created a replication task to send the snapshots to the second pool on the same system (for now). About 10 minutes later, I saw the pool was at 0 bytes free... The snapshot task wasn't even SCHEDULED to run yet, since it still isn't midnight yet... but now the pool is wedged, and I can't delete any snapshots to free it up:

So, is scale still not ready for snapshots? It's quite ironic that snapshots (used for protecting data) is causing a pool to be mostly unusable. At least my VMs are still running, but I cannot create or delete anything new on the pool.

What options do I have other than a backup, recreate the pool and restore?

Thanks!

Quick history:

- When I upgraded in place from core to scale, it bombed - kernel panic on every boot.

- I reinstalled scale, imported the pool and reconfigured all services.

- When I set up snapshots, the pool wedged at 100% full, 0 bytes.

- I had to 'zfs send' all datasets to a new pool. No way to delete from a full COW FS.

- I avoided setting up snapsopts so this didn't happen again.

I created a snapshot task for the pool, scheduled at midnight (it was at 10:30 PM). I then created a replication task to send the snapshots to the second pool on the same system (for now). About 10 minutes later, I saw the pool was at 0 bytes free... The snapshot task wasn't even SCHEDULED to run yet, since it still isn't midnight yet... but now the pool is wedged, and I can't delete any snapshots to free it up:

Code:

root@nas[/mnt/raid1]# zfs destroy sas15k/dsVMs/xcp-ng@auto-2022-12-27_22-28 internal error: cannot destroy snapshots: Channel number out of range zsh: abort (core dumped) zfs destroy sas15k/dsVMs/xcp-ng@auto-2022-12-27_22-28 root@nas[/mnt/raid1]#

So, is scale still not ready for snapshots? It's quite ironic that snapshots (used for protecting data) is causing a pool to be mostly unusable. At least my VMs are still running, but I cannot create or delete anything new on the pool.

What options do I have other than a backup, recreate the pool and restore?

Thanks!