denis_photographer

Dabbler

- Joined

- Nov 4, 2021

- Messages

- 18

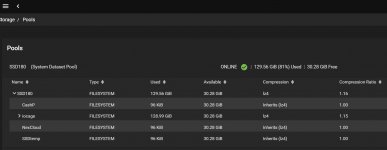

I can not solve the issue: the xsystem has one system dataset disk with a pool "SSD180" (ZFS). I cloned it in the Windows environment to a larger disk (just one to one). Started up with a new drive. The web shows all the same 180 GB.

Nextcloud is installed on this system disk and all the information that is there, I need exactly the way it is now, so I resorted to cloning.

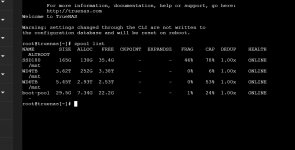

I understand very poorly and when creating a NAS I did everything gradually, reading on the forum. But now a specific plug. How can I add the unallocated space of my new disk to its own pool, and make the web interface see a larger size? If possible, then we need direct commands one by one, because everything that I have read and tried does not help. Most likely I'm doing something wrong. Even the zpool status command shows that there is just a pool SSD180 (that's what it's called), but it doesn't show any gptids. Disk without a mirror. I just need to change it to a larger disk but I don't know how to do it

I would be very grateful for help in resolving this issue.

Nextcloud is installed on this system disk and all the information that is there, I need exactly the way it is now, so I resorted to cloning.

I understand very poorly and when creating a NAS I did everything gradually, reading on the forum. But now a specific plug. How can I add the unallocated space of my new disk to its own pool, and make the web interface see a larger size? If possible, then we need direct commands one by one, because everything that I have read and tried does not help. Most likely I'm doing something wrong. Even the zpool status command shows that there is just a pool SSD180 (that's what it's called), but it doesn't show any gptids. Disk without a mirror. I just need to change it to a larger disk but I don't know how to do it

I would be very grateful for help in resolving this issue.