ctag

Patron

- Joined

- Jun 16, 2017

- Messages

- 225

This thread is a spiritual successor to my FreeNAS build thread.

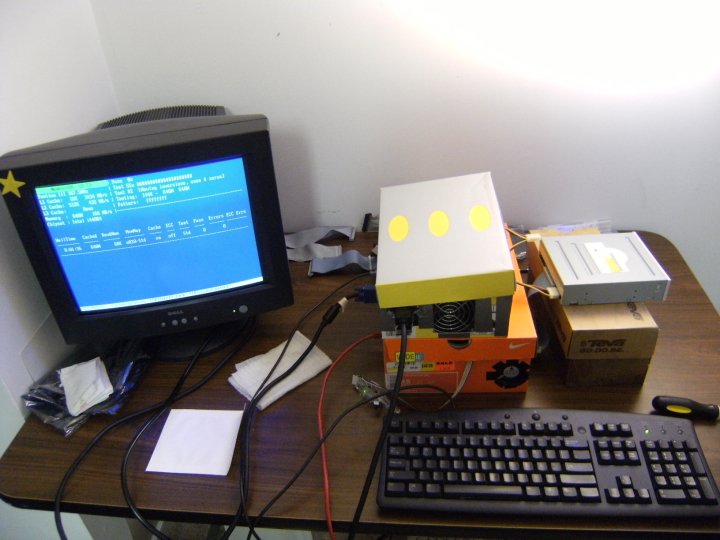

In middle school I would visit my local thrift store and pick apart donated PCs to buy components, eventually assembling my own desktop from the bits. Not knowing any better I housed it in a shoebox:

I enjoyed the DIY, but it came with some challenges. In highschool I started the typical home photo and video collection for school trips and projects I tinkered on. When it was almost entirely lost in a hard drive failure I felt the unexpected punch in the gut that comes with real loss, and a new door to hobby adventures was opened: how do I keep these things that have only value to me intact?

The solutions varied for a while. But in early 2018 I settled on FreeNAS, and I've been benefiting from the hard work that goes into FreeNAS/TrueNAS and the support of this community ever since. So as I start this next chapter of my not-quite-a-NAS-blog, I'd like to highlight how grateful I am to the various forum members and iX contributors that have made my DIY data backup journey possible.

Ever since the scrounging around in middle school, my computer hostnames have been tributes to fictional spacecraft. And while it seems almost too corny to continue now, I'm still sticking with it. My TrueNAS powered machine is called Citadel after the fictional hub world in Mass Effect.

Citadel started out as a repurposed Dell T7500 tower server with FreeNAS installed and 4 8TB hard drives for 20TB of usable space. Today it's the same Dell server with TrueNAS Cobia and has 8 8TB drives in a 41TB pool, along with an SSD pool and secondary CPU card for virtualized services.

In middle school I would visit my local thrift store and pick apart donated PCs to buy components, eventually assembling my own desktop from the bits. Not knowing any better I housed it in a shoebox:

I enjoyed the DIY, but it came with some challenges. In highschool I started the typical home photo and video collection for school trips and projects I tinkered on. When it was almost entirely lost in a hard drive failure I felt the unexpected punch in the gut that comes with real loss, and a new door to hobby adventures was opened: how do I keep these things that have only value to me intact?

The solutions varied for a while. But in early 2018 I settled on FreeNAS, and I've been benefiting from the hard work that goes into FreeNAS/TrueNAS and the support of this community ever since. So as I start this next chapter of my not-quite-a-NAS-blog, I'd like to highlight how grateful I am to the various forum members and iX contributors that have made my DIY data backup journey possible.

Ever since the scrounging around in middle school, my computer hostnames have been tributes to fictional spacecraft. And while it seems almost too corny to continue now, I'm still sticking with it. My TrueNAS powered machine is called Citadel after the fictional hub world in Mass Effect.

Citadel started out as a repurposed Dell T7500 tower server with FreeNAS installed and 4 8TB hard drives for 20TB of usable space. Today it's the same Dell server with TrueNAS Cobia and has 8 8TB drives in a 41TB pool, along with an SSD pool and secondary CPU card for virtualized services.