We have a Backup-System consisting of a Supermicro server and two Supermicro JBODs. Here some system info:

FreeNAS version: FreeNAS-11.3-U3.2

Server:

Manufacturer: Supermicro

Product Name: SYS-5019P-WTR

Bios Vendor: American Megatrends Inc.

Bios Version: 3.2

Bios Release: 10/17/2019

JBOD1:

Vendor: Supermicro

JBOD2:

Vendor: Supermicro

My system keeps crashing because (my suspicion) a faulty drive. Here is some of the log regarding the error:

Sep 27 14:30:40 backupserverMTP syslog-ng[2659]: syslog-ng starting up; version='3.23.1'

Sep 27 14:30:40 backupserverMTP kernel: pid 1461 (syslog-ng), jid 0, uid 0: exited on signal 6 (core dumped)

Sep 27 14:32:46 backupserverMTP smartd[1705]: Device: /dev/da104, SMART Failure: DATA CHANNEL IMPENDING FAILURE DATA ERROR RATE TOO HIGH

Sep 27 14:34:39 backupserverMTP collectd[1194]: Traceback (most recent call last):

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 46, in init

self.powermode = c.call('smart.config')['powermode']

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 45, in init

self.smartctl_args = c.call('disk.smartctl_args_for_devices', self.disks)

File "/usr/local/lib/python3.7/site-packages/middlewared/client/client.py", line 386, in call

So the faulty disk is da104.

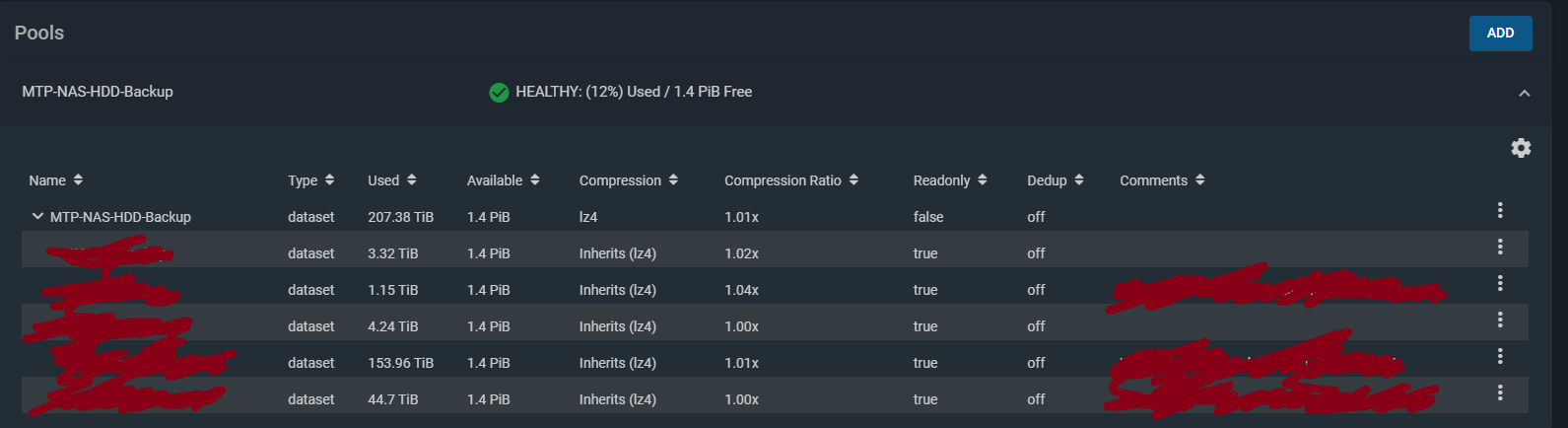

All the disks in the two JBODs are part of a RAIDZ2 pool. Here the high-level structure:

The detailed structure is the following:

Every RAIDZ2 above contains 30 HDDs (Seagate Exos X16 14TB).

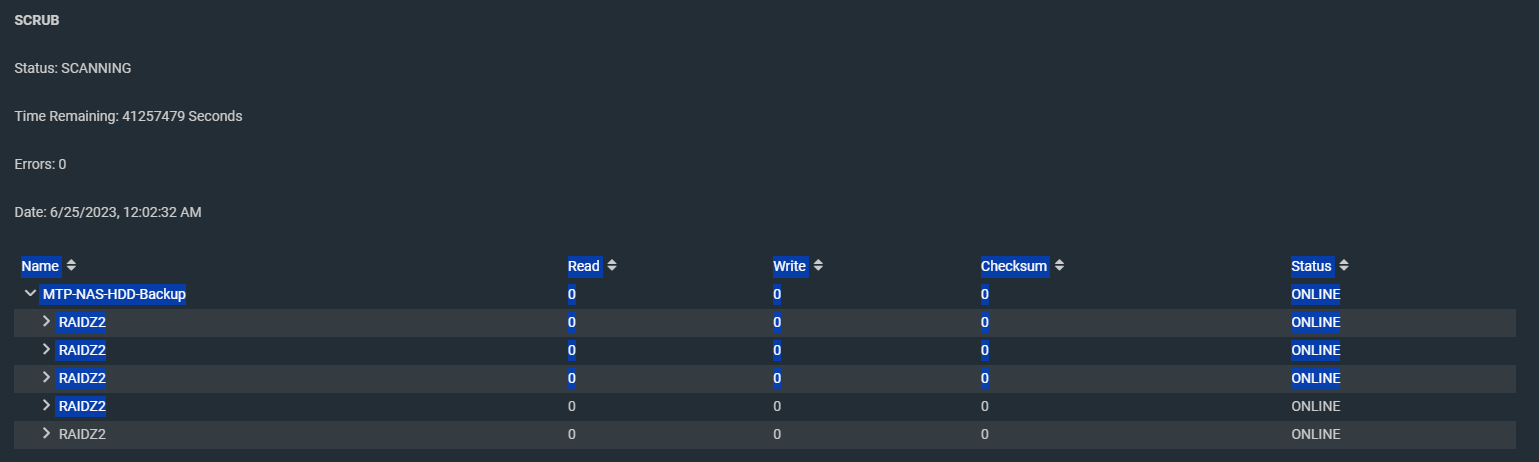

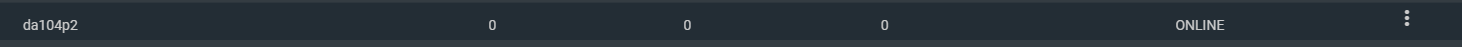

The faulty disk (da104) does not show an error in the FreeNAS gui:

Obviously, I want to replace disk da104. Now, if I want to put the disk offline via the FreeNAS gui I am not successful, because it takes a lot of time and the system keeps crashing. At least this is what I understand.

What additional info do you need and what is your recomendation on how to replace the disk in a propper way if I can not offline it? Or how might I be able to offline it?

FreeNAS version: FreeNAS-11.3-U3.2

Server:

Manufacturer: Supermicro

Product Name: SYS-5019P-WTR

Bios Vendor: American Megatrends Inc.

Bios Version: 3.2

Bios Release: 10/17/2019

JBOD1:

Vendor: Supermicro

JBOD2:

Vendor: Supermicro

My system keeps crashing because (my suspicion) a faulty drive. Here is some of the log regarding the error:

Sep 27 14:30:40 backupserverMTP syslog-ng[2659]: syslog-ng starting up; version='3.23.1'

Sep 27 14:30:40 backupserverMTP kernel: pid 1461 (syslog-ng), jid 0, uid 0: exited on signal 6 (core dumped)

Sep 27 14:32:46 backupserverMTP smartd[1705]: Device: /dev/da104, SMART Failure: DATA CHANNEL IMPENDING FAILURE DATA ERROR RATE TOO HIGH

Sep 27 14:34:39 backupserverMTP collectd[1194]: Traceback (most recent call last):

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 46, in init

self.powermode = c.call('smart.config')['powermode']

File "/usr/local/lib/collectd_pyplugins/disktemp.py", line 45, in init

self.smartctl_args = c.call('disk.smartctl_args_for_devices', self.disks)

File "/usr/local/lib/python3.7/site-packages/middlewared/client/client.py", line 386, in call

So the faulty disk is da104.

All the disks in the two JBODs are part of a RAIDZ2 pool. Here the high-level structure:

The detailed structure is the following:

Every RAIDZ2 above contains 30 HDDs (Seagate Exos X16 14TB).

The faulty disk (da104) does not show an error in the FreeNAS gui:

Obviously, I want to replace disk da104. Now, if I want to put the disk offline via the FreeNAS gui I am not successful, because it takes a lot of time and the system keeps crashing. At least this is what I understand.

What additional info do you need and what is your recomendation on how to replace the disk in a propper way if I can not offline it? Or how might I be able to offline it?