Johan Palsson

Cadet

- Joined

- Jan 28, 2016

- Messages

- 4

Hello,

I am a bit confused, have been migrating data from a physical server with 4 physical data disks, one volume at 2TB per disk (a total of 8TB data formatted as NTFS)

My target is a Proliant Microserver with 4 physical 4TB disks, no RAID configured (best handled by ZFS), 10 GB RAM.

On this machine i have a running installation of "FreeNAS-9.3-CURRENT-201503161938"

The 4 disks are visible in Freenas GUI and are set up as a RAIDZ1

After that 4 zvols where created with the size 2T

These volumes are shared with iSCSI, 4 volumes at all.

No problem to mount them at the source server, partition them and formatting them at 2TB size.

No problem to write to them also, good performance and everything were going smoothly, i thought.

But when i had copied around 7.8TB from the source server, suddenly all iSCSI disks dropped.

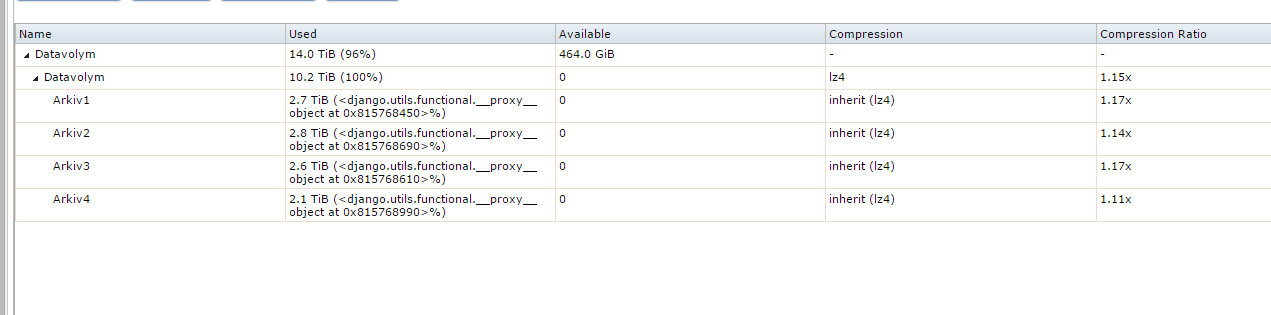

When i look into the Freenas GUI i see "CRITICAL: The capacity for the volume 'Datavolym' is currently at 96%, while the recommended value is below 80%."

But.., why ?

And, how is the "Used" calculated in the volume view ? (see below)

I myself had thought that four 4TB disks in RAIDZ1 would be sufficient to store 8TB of data, but apparently (with my configurations) it was not enough.

With best regards

Johan

I am a bit confused, have been migrating data from a physical server with 4 physical data disks, one volume at 2TB per disk (a total of 8TB data formatted as NTFS)

My target is a Proliant Microserver with 4 physical 4TB disks, no RAID configured (best handled by ZFS), 10 GB RAM.

On this machine i have a running installation of "FreeNAS-9.3-CURRENT-201503161938"

The 4 disks are visible in Freenas GUI and are set up as a RAIDZ1

After that 4 zvols where created with the size 2T

These volumes are shared with iSCSI, 4 volumes at all.

No problem to mount them at the source server, partition them and formatting them at 2TB size.

No problem to write to them also, good performance and everything were going smoothly, i thought.

But when i had copied around 7.8TB from the source server, suddenly all iSCSI disks dropped.

When i look into the Freenas GUI i see "CRITICAL: The capacity for the volume 'Datavolym' is currently at 96%, while the recommended value is below 80%."

But.., why ?

And, how is the "Used" calculated in the volume view ? (see below)

I myself had thought that four 4TB disks in RAIDZ1 would be sufficient to store 8TB of data, but apparently (with my configurations) it was not enough.

With best regards

Johan