Yeah, you want a number that's appropriately sized. In the old days (~2008), a decent Solaris system would be 16GB of RAM, and an SSD like the

Intel X25-M 80GB had write characteristics of 70MBytes/sec. You don't really want to squeeze your L2ARC device to the point of being completely busy, so the defaults are quite reasonable for an old SSD like the X25-M.

One of the things you really need to realize is that the goal here isn't to maximize these values. You don't want or need to be evicting every possible thing from the ARC to the L2ARC; rather, you'd like for the most USEFUL stuff to be evicted. That means that a somewhat-too-small window is actually fine. The L2ARC will warm up just fine, it will just take longer. What you don't want is stupid-small to the point where the L2ARC cannot function correctly.

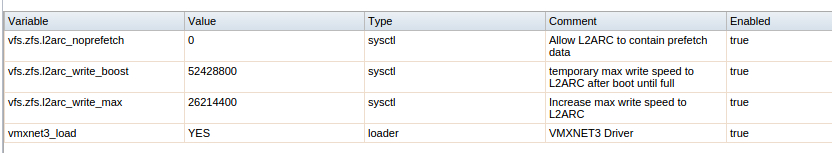

When you look at the scale of a modern system, 128GB of RAM is 8x the 16GB of old, a 512GB SSD is 6.4x the 80GB of old, and the 1500MBytes/sec write of a modern NVMe SSD is 21x the 70MBytes/sec of old. But you'll notice that I'm not jacking up the L2ARC rate as a strict ratio of old SSD speed to new SSD speed. It's probably more useful to look at the overall scale of things. So you'll notice my numbers are just a factor of 8x.

Keep your write_max lowish. I would propose that write_max being half of write_boost, and the sum of the two being no more than half the sequential write speed of the L2ARC device seems to work pretty well. You're trying not to flood the devices with writes. Under normal circumstances that leaves lots of headroom for simultaneous reads, and even under write_boost conditions, a manufacturer's spec is optimistic and doesn't allow for things like garbage collection. But also consider the size of your RAM, and don't make the numbers unrealistically large. A system with less RAM should favor somewhat smaller numbers than a system with more RAM.