Soarin

Cadet

- Joined

- Mar 23, 2019

- Messages

- 4

I've recently gotten my RAID controller (m1015) and was going to flash the card to IT, however come to find out the Ebay seller gave me the M5015 instead so until they respond to my refund request I'm just testing it out.

Without RAID and just directly into a port on my motherboard I get 110MB/s read and 53MB/s write, I test this by CDing to my pool and running these commands:

# dd if=/dev/zero of=testfile bs=1024 count=50000

Run the following command to test Read speed

# dd if=testfile of=/dev/zero bs=1024 count=50000

I transfer files over the network and get 50MB/s which is right, however now I throw this temporary-RAID controller into the mix and I setup RAID10(hardware) to see what the speeds would be like on my 4 drive configuration. I boot into FreeNAS and setup and test the network transfer speeds and I notice it's exactly the same as a single drive performance, I run the 2 commands again in the shell to see if the performance increased any there and it's the same!

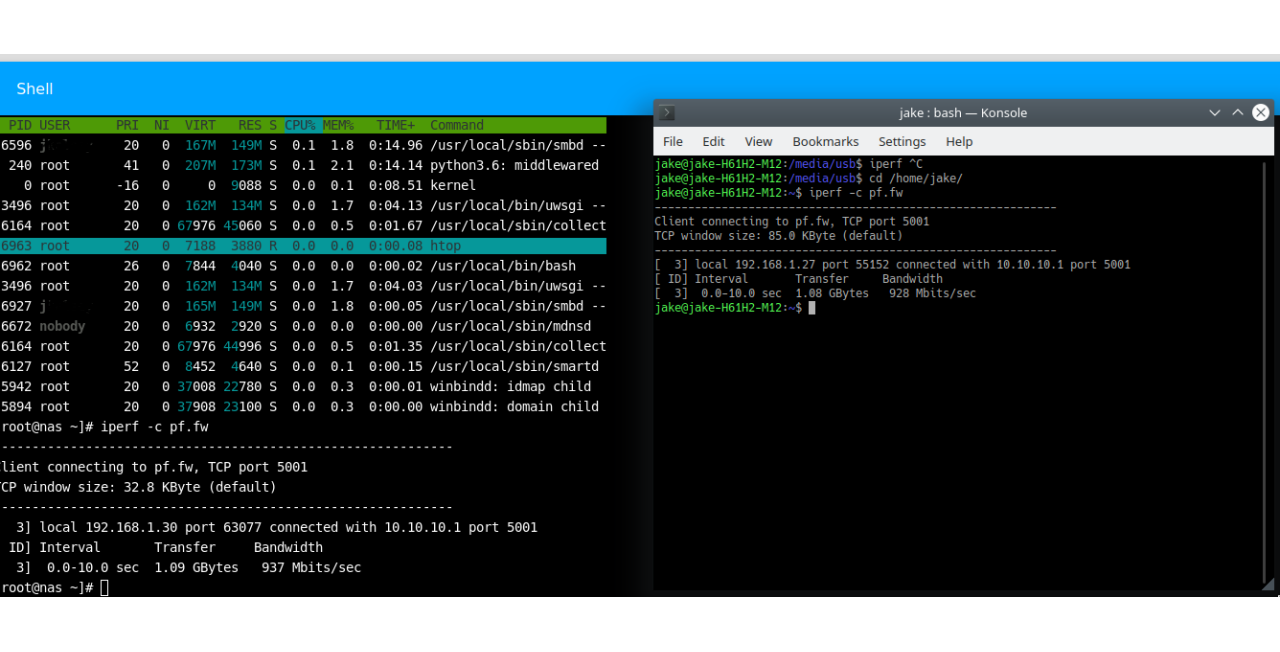

I continue then by setting up RAID0 and re-creating the pool and the same results are there, 50MB/s! I go to my pool and turn off ATime and compression to see if that does anything and still no dice, I run an IPerf on my PC & my NAS and here's the results:

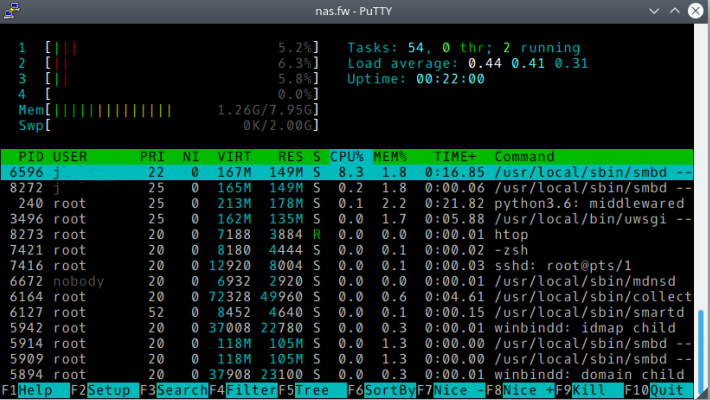

I also checked to see if maybe my CPU or RAM was being maxed or something (Xeon X3430 & 8GB ECC RAM), and here's the results during a file transfer:

My FreeNAS is mostly stock since I've just installed it a few days ago and haven't really gotten to use it since I was waiting for my M1015 to come in the mail, now I have to send it back since they gave me the wrong card..

If you need any more info let me know, I hope this can be resolved! I still plan to get the M1015 even if the M5015 isn't the problem here.

OS Version:

FreeNAS-11.2-U2.1

(Build Date: Feb 27, 2019 20:59)

Without RAID and just directly into a port on my motherboard I get 110MB/s read and 53MB/s write, I test this by CDing to my pool and running these commands:

# dd if=/dev/zero of=testfile bs=1024 count=50000

Run the following command to test Read speed

# dd if=testfile of=/dev/zero bs=1024 count=50000

I transfer files over the network and get 50MB/s which is right, however now I throw this temporary-RAID controller into the mix and I setup RAID10(hardware) to see what the speeds would be like on my 4 drive configuration. I boot into FreeNAS and setup and test the network transfer speeds and I notice it's exactly the same as a single drive performance, I run the 2 commands again in the shell to see if the performance increased any there and it's the same!

I continue then by setting up RAID0 and re-creating the pool and the same results are there, 50MB/s! I go to my pool and turn off ATime and compression to see if that does anything and still no dice, I run an IPerf on my PC & my NAS and here's the results:

I also checked to see if maybe my CPU or RAM was being maxed or something (Xeon X3430 & 8GB ECC RAM), and here's the results during a file transfer:

My FreeNAS is mostly stock since I've just installed it a few days ago and haven't really gotten to use it since I was waiting for my M1015 to come in the mail, now I have to send it back since they gave me the wrong card..

If you need any more info let me know, I hope this can be resolved! I still plan to get the M1015 even if the M5015 isn't the problem here.

OS Version:

FreeNAS-11.2-U2.1

(Build Date: Feb 27, 2019 20:59)

Attachments

Last edited: