Ive been very happy with my FN setup (which is large). It does quite a bit of NFS (vmware so is sync) and SMB , both over 10gbit , every day.

No Jails nor FN hosted VMs (no bhyve), nor any plugins.

The full specs are in my sig. I dont use a L2ARC, but do have a single optane 280gb as SLOG.

Build FreeNAS-11.2-U5

Platform Intel(R) Xeon(R) CPU E5-2637 v2 @ 3.50GHz

Memory 262067MB

System Time Mon, 10 Aug 2020 16:21:44 -0500

Uptime 4:21PM up 312 days, 12:12, 5 users

Load Average 1.60, 1.67, 1.73

(i have been testing 11.3 extensively (on a separate physical box, im not ready to upgrade yet)

My 2x questions are: with 256gb of ram (ecc d3)

1- should i consider setting a static size for my ARC (ie a ARC reservation for metadata) ?

(i assume NO, but the idea is coming from these great threads ive read though , where the author also has 256gb, and seems to be in a similar scenario as me;

www.ixsystems.com

and

www.ixsystems.com

and

www.ixsystems.com

www.ixsystems.com

2- from the attached arc_summary.py output below, which entries stand out to you? (you being those very knowledgeable, and generous, users and admins on this forum)

For me:

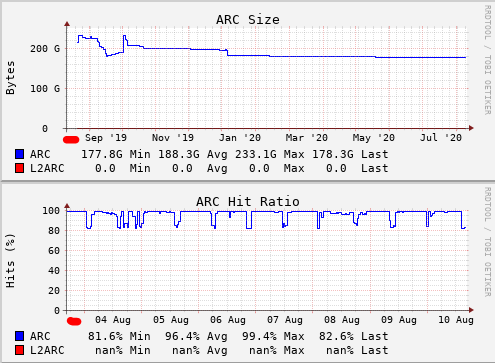

ARC Size: 71.58% 177.88 GiB

Actual Hit Ratio: 94.07% 670.64b

- tells me FN/FreeBSD/ZFS is correctly allocating a large part of my 256gb to ARC (but should the 177 GB be higher? as i never will be running any FN "extras/bhyve")

- tells me ZFS , on average, 94 out of 100 times is able to pull the metadata it needs from the 177 GB Ram, as opposed to pulling it from my slow, HDDs.

(see image of hit ratio and Size over time- im also using the Remote Graphite Server" FN setting so i do have access to just about all of the arc_summary.py stats vs time, as well)

No Jails nor FN hosted VMs (no bhyve), nor any plugins.

The full specs are in my sig. I dont use a L2ARC, but do have a single optane 280gb as SLOG.

Build FreeNAS-11.2-U5

Platform Intel(R) Xeon(R) CPU E5-2637 v2 @ 3.50GHz

Memory 262067MB

System Time Mon, 10 Aug 2020 16:21:44 -0500

Uptime 4:21PM up 312 days, 12:12, 5 users

Load Average 1.60, 1.67, 1.73

(i have been testing 11.3 extensively (on a separate physical box, im not ready to upgrade yet)

My 2x questions are: with 256gb of ram (ecc d3)

1- should i consider setting a static size for my ARC (ie a ARC reservation for metadata) ?

(i assume NO, but the idea is coming from these great threads ive read though , where the author also has 256gb, and seems to be in a similar scenario as me;

Ways to leverage Optane in 12.0? (given 4k speed+latency, could Metadata+SLOG share devices, and is L2ARC less useful)

So for this question, I need to give a bit of background. BACKGROUND: MY SYSTEM UNDER 11.3: My pool data is very large, very dedupable files (20TB of VMs), to the point that the extra hardware for dedup was worth it to save HDD costs (3.5 x dedup, I'm saving about 200TB of SAS3 raw disk cost...

SLOG benchmarking and finding the best SLOG

What block size is used for servicing VMs over NFS? Trying to understand what size is most important.

2- from the attached arc_summary.py output below, which entries stand out to you? (you being those very knowledgeable, and generous, users and admins on this forum)

For me:

ARC Size: 71.58% 177.88 GiB

Actual Hit Ratio: 94.07% 670.64b

- tells me FN/FreeBSD/ZFS is correctly allocating a large part of my 256gb to ARC (but should the 177 GB be higher? as i never will be running any FN "extras/bhyve")

- tells me ZFS , on average, 94 out of 100 times is able to pull the metadata it needs from the 177 GB Ram, as opposed to pulling it from my slow, HDDs.

(see image of hit ratio and Size over time- im also using the Remote Graphite Server" FN setting so i do have access to just about all of the arc_summary.py stats vs time, as well)

Code:

System Memory:

0.01% 13.95 MiB Active, 0.56% 1.40 GiB Inact

95.96% 239.43 GiB Wired, 0.00% 0 Bytes Cache

2.33% 5.82 GiB Free, 1.14% 2.85 GiB Gap

Real Installed: 256.00 GiB

Real Available: 99.97% 255.93 GiB

Real Managed: 97.49% 249.51 GiB

Logical Total: 256.00 GiB

Logical Used: 97.18% 248.78 GiB

Logical Free: 2.82% 7.22 GiB

Kernel Memory: 3.67 GiB

Data: 98.87% 3.63 GiB

Text: 1.13% 42.54 MiB

Kernel Memory Map: 249.51 GiB

Size: 6.39% 15.95 GiB

Free: 93.61% 233.56 GiB

Page: 1

------------------------------------------------------------------------

ARC Summary: (HEALTHY)

Storage pool Version: 5000

Filesystem Version: 5

Memory Throttle Count: 0

ARC Misc:

Deleted: 11.87b

Mutex Misses: 11.02m

Evict Skips: 11.02m

ARC Size: 71.58% 177.88 GiB

Target Size: (Adaptive) 71.48% 177.63 GiB

Min Size (Hard Limit): 12.50% 31.06 GiB

Max Size (High Water): 8:1 248.51 GiB

ARC Size Breakdown:

Recently Used Cache Size: 90.44% 160.88 GiB

Frequently Used Cache Size: 9.56% 17.00 GiB

ARC Hash Breakdown:

Elements Max: 9.81m

Elements Current: 80.97% 7.94m

Collisions: 4.76b

Chain Max: 8

Chains: 804.95k

Page: 2

------------------------------------------------------------------------

ARC Total accesses: 712.94b

Cache Hit Ratio: 94.30% 672.31b

Cache Miss Ratio: 5.70% 40.63b

Actual Hit Ratio: 94.07% 670.64b

Data Demand Efficiency: 54.72% 39.05b

Data Prefetch Efficiency: 20.94% 8.17b

CACHE HITS BY CACHE LIST:

Anonymously Used: 0.20% 1.37b

Most Recently Used: 3.95% 26.58b

Most Frequently Used: 95.80% 644.06b

Most Recently Used Ghost: 0.01% 72.13m

Most Frequently Used Ghost: 0.03% 224.49m

CACHE HITS BY DATA TYPE:

Demand Data: 3.18% 21.37b

Prefetch Data: 0.25% 1.71b

Demand Metadata: 96.54% 649.06b

Prefetch Metadata: 0.03% 171.82m

CACHE MISSES BY DATA TYPE:

Demand Data: 43.52% 17.68b

Prefetch Data: 15.89% 6.46b

Demand Metadata: 40.24% 16.35b

Prefetch Metadata: 0.35% 142.13m

Page: 3

------------------------------------------------------------------------

Page: 4

------------------------------------------------------------------------

DMU Prefetch Efficiency: 220.59b

Hit Ratio: 2.28% 5.03b

Miss Ratio: 97.72% 215.57b

Page: 5

------------------------------------------------------------------------

Page: 6

------------------------------------------------------------------------

ZFS Tunable (sysctl):

kern.maxusers 16715

vm.kmem_size 267912790016

vm.kmem_size_scale 1

vm.kmem_size_min 0

vm.kmem_size_max 1319413950874

vfs.zfs.vol.immediate_write_sz 32768

vfs.zfs.vol.unmap_sync_enabled 0

vfs.zfs.vol.unmap_enabled 1

vfs.zfs.vol.recursive 0

vfs.zfs.vol.mode 2

vfs.zfs.sync_pass_rewrite 2

vfs.zfs.sync_pass_dont_compress 5

vfs.zfs.sync_pass_deferred_free 2

vfs.zfs.zio.dva_throttle_enabled 1

vfs.zfs.zio.exclude_metadata 0

vfs.zfs.zio.use_uma 1

vfs.zfs.zil_slog_bulk 786432

vfs.zfs.cache_flush_disable 0

vfs.zfs.zil_replay_disable 0

vfs.zfs.version.zpl 5

vfs.zfs.version.spa 5000

vfs.zfs.version.acl 1

vfs.zfs.version.ioctl 7

vfs.zfs.debug 0

vfs.zfs.super_owner 0

vfs.zfs.immediate_write_sz 32768

vfs.zfs.standard_sm_blksz 131072

vfs.zfs.dtl_sm_blksz 4096

vfs.zfs.min_auto_ashift 12

vfs.zfs.max_auto_ashift 13

vfs.zfs.vdev.queue_depth_pct 1000

vfs.zfs.vdev.write_gap_limit 4096

vfs.zfs.vdev.read_gap_limit 32768

vfs.zfs.vdev.aggregation_limit_non_rotating131072

vfs.zfs.vdev.aggregation_limit 1048576

vfs.zfs.vdev.trim_max_active 64

vfs.zfs.vdev.trim_min_active 1

vfs.zfs.vdev.scrub_max_active 2

vfs.zfs.vdev.scrub_min_active 1

vfs.zfs.vdev.async_write_max_active 10

vfs.zfs.vdev.async_write_min_active 1

vfs.zfs.vdev.async_read_max_active 3

vfs.zfs.vdev.async_read_min_active 1

vfs.zfs.vdev.sync_write_max_active 10

vfs.zfs.vdev.sync_write_min_active 10

vfs.zfs.vdev.sync_read_max_active 10

vfs.zfs.vdev.sync_read_min_active 10

vfs.zfs.vdev.max_active 1000

vfs.zfs.vdev.async_write_active_max_dirty_percent60

vfs.zfs.vdev.async_write_active_min_dirty_percent30

vfs.zfs.vdev.mirror.non_rotating_seek_inc1

vfs.zfs.vdev.mirror.non_rotating_inc 0

vfs.zfs.vdev.mirror.rotating_seek_offset1048576

vfs.zfs.vdev.mirror.rotating_seek_inc 5

vfs.zfs.vdev.mirror.rotating_inc 0

vfs.zfs.vdev.trim_on_init 1

vfs.zfs.vdev.bio_delete_disable 0

vfs.zfs.vdev.bio_flush_disable 0

vfs.zfs.vdev.cache.bshift 16

vfs.zfs.vdev.cache.size 0

vfs.zfs.vdev.cache.max 16384

vfs.zfs.vdev.default_ms_shift 29

vfs.zfs.vdev.min_ms_count 16

vfs.zfs.vdev.max_ms_count 200

vfs.zfs.vdev.trim_max_pending 10000

vfs.zfs.txg.timeout 5

vfs.zfs.trim.enabled 1

vfs.zfs.trim.max_interval 1

vfs.zfs.trim.timeout 30

vfs.zfs.trim.txg_delay 32

vfs.zfs.spa_min_slop 134217728

vfs.zfs.spa_slop_shift 5

vfs.zfs.spa_asize_inflation 24

vfs.zfs.deadman_enabled 1

vfs.zfs.deadman_checktime_ms 5000

vfs.zfs.deadman_synctime_ms 1000000

vfs.zfs.debug_flags 0

vfs.zfs.debugflags 0

vfs.zfs.recover 0

vfs.zfs.spa_load_verify_data 1

vfs.zfs.spa_load_verify_metadata 1

vfs.zfs.spa_load_verify_maxinflight 10000

vfs.zfs.max_missing_tvds_scan 0

vfs.zfs.max_missing_tvds_cachefile 2

vfs.zfs.max_missing_tvds 0

vfs.zfs.spa_load_print_vdev_tree 0

vfs.zfs.ccw_retry_interval 300

vfs.zfs.check_hostid 1

vfs.zfs.mg_fragmentation_threshold 85

vfs.zfs.mg_noalloc_threshold 0

vfs.zfs.condense_pct 200

vfs.zfs.metaslab_sm_blksz 4096

vfs.zfs.metaslab.bias_enabled 1

vfs.zfs.metaslab.lba_weighting_enabled 1

vfs.zfs.metaslab.fragmentation_factor_enabled1

vfs.zfs.metaslab.preload_enabled 1

vfs.zfs.metaslab.preload_limit 3

vfs.zfs.metaslab.unload_delay 8

vfs.zfs.metaslab.load_pct 50

vfs.zfs.metaslab.min_alloc_size 33554432

vfs.zfs.metaslab.df_free_pct 4

vfs.zfs.metaslab.df_alloc_threshold 131072

vfs.zfs.metaslab.debug_unload 0

vfs.zfs.metaslab.debug_load 0

vfs.zfs.metaslab.fragmentation_threshold70

vfs.zfs.metaslab.force_ganging 16777217

vfs.zfs.free_bpobj_enabled 1

vfs.zfs.free_max_blocks 18446744073709551615

vfs.zfs.zfs_scan_checkpoint_interval 7200

vfs.zfs.zfs_scan_legacy 0

vfs.zfs.no_scrub_prefetch 0

vfs.zfs.no_scrub_io 0

vfs.zfs.resilver_min_time_ms 3000

vfs.zfs.free_min_time_ms 1000

vfs.zfs.scan_min_time_ms 1000

vfs.zfs.scan_idle 50

vfs.zfs.scrub_delay 4

vfs.zfs.resilver_delay 2

vfs.zfs.top_maxinflight 32

vfs.zfs.delay_scale 500000

vfs.zfs.delay_min_dirty_percent 60

vfs.zfs.dirty_data_sync 67108864

vfs.zfs.dirty_data_max_percent 10

vfs.zfs.dirty_data_max_max 4294967296

vfs.zfs.dirty_data_max 4294967296

vfs.zfs.max_recordsize 1048576

vfs.zfs.default_ibs 15

vfs.zfs.default_bs 9

vfs.zfs.zfetch.array_rd_sz 1048576

vfs.zfs.zfetch.max_idistance 67108864

vfs.zfs.zfetch.max_distance 8388608

vfs.zfs.zfetch.min_sec_reap 2

vfs.zfs.zfetch.max_streams 8

vfs.zfs.prefetch_disable 0

vfs.zfs.send_holes_without_birth_time 1

vfs.zfs.mdcomp_disable 0

vfs.zfs.per_txg_dirty_frees_percent 30

vfs.zfs.nopwrite_enabled 1

vfs.zfs.dedup.prefetch 1

vfs.zfs.dbuf_cache_lowater_pct 10

vfs.zfs.dbuf_cache_hiwater_pct 10

vfs.zfs.dbuf_cache_shift 5

vfs.zfs.dbuf_cache_max_bytes 8338720256

vfs.zfs.arc_min_prescient_prefetch_ms 6

vfs.zfs.arc_min_prefetch_ms 1

vfs.zfs.l2c_only_size 0

vfs.zfs.mfu_ghost_data_esize 7915449344

vfs.zfs.mfu_ghost_metadata_esize 148436202496

vfs.zfs.mfu_ghost_size 156351651840

vfs.zfs.mfu_data_esize 29638873088

vfs.zfs.mfu_metadata_esize 1056493056

vfs.zfs.mfu_size 36613960192

vfs.zfs.mru_ghost_data_esize 5438178816

vfs.zfs.mru_ghost_metadata_esize 28938361856

vfs.zfs.mru_ghost_size 34376540672

vfs.zfs.mru_data_esize 131708334592

vfs.zfs.mru_metadata_esize 461664768

vfs.zfs.mru_size 144175309824

vfs.zfs.anon_data_esize 0

vfs.zfs.anon_metadata_esize 0

vfs.zfs.anon_size 95007232

vfs.zfs.l2arc_norw 1

vfs.zfs.l2arc_feed_again 1

vfs.zfs.l2arc_noprefetch 1

vfs.zfs.l2arc_feed_min_ms 200

vfs.zfs.l2arc_feed_secs 1

vfs.zfs.l2arc_headroom 2

vfs.zfs.l2arc_write_boost 8388608

vfs.zfs.l2arc_write_max 8388608

vfs.zfs.arc_meta_limit 66709762048

vfs.zfs.arc_free_target 1393393

vfs.zfs.arc_kmem_cache_reap_retry_ms 1000

vfs.zfs.compressed_arc_enabled 1

vfs.zfs.arc_grow_retry 60

vfs.zfs.arc_shrink_shift 7

vfs.zfs.arc_average_blocksize 8192

vfs.zfs.arc_no_grow_shift 5

vfs.zfs.arc_min 33354881024

vfs.zfs.arc_max 266839048192

vfs.zfs.abd_chunk_size 4096

Page: 7

------------------------------------------------------------------------